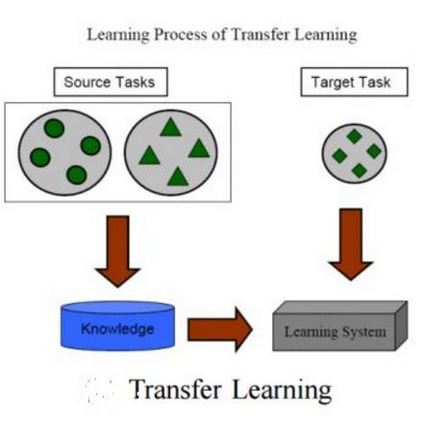

One desired capability for machines is the ability to transfer their knowledge of one domain to another where data is (usually) scarce. Despite ample adaptation of transfer learning in various deep learning applications, we yet do not understand what enables a successful transfer and which part of the network is responsible for that. In this paper, we provide new tools and analyses to address these fundamental questions. Through a series of analyses on transferring to block-shuffled images, we separate the effect of feature reuse from learning low-level statistics of data and show that some benefit of transfer learning comes from the latter. We present that when training from pre-trained weights, the model stays in the same basin in the loss landscape and different instances of such model are similar in feature space and close in parameter space.

翻译:机器的一个理想能力是能够将其对一个领域的知识传授给数据(通常)稀少的另一个领域。尽管在各种深层学习应用中对转移学习进行了充分的调整,但我们仍然不理解什么能够使转让获得成功,网络的哪个部分对此负有责任。在本文件中,我们提供了新的工具和分析,以解决这些根本问题。通过一系列关于向块状图像传输的分析,我们将特性再利用的影响与学习低水平数据统计数据区分开来,并表明转让学习的一些好处来自后者。我们指出,在通过预先培训的重量进行的培训后,模型在损失地貌的同一盆地停留,这种模型的不同实例在地貌空间和接近参数空间方面是相似的。