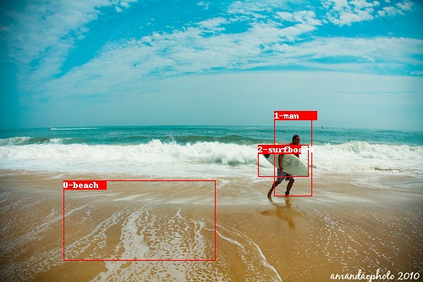

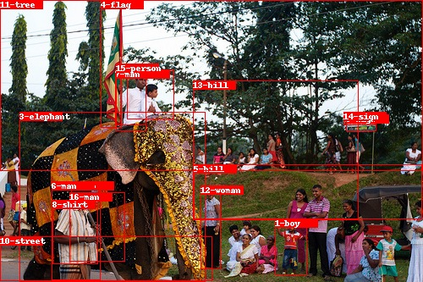

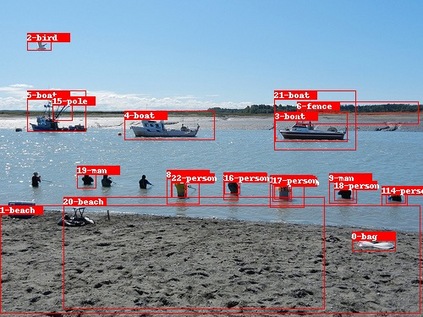

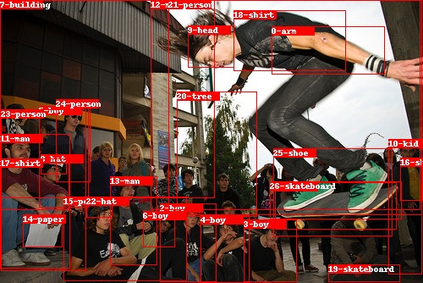

Scene graph generation (SGG) aims to capture a wide variety of interactions between pairs of objects, which is essential for full scene understanding. Existing SGG methods trained on the entire set of relations fail to acquire complex reasoning about visual and textual correlations due to various biases in training data. Learning on trivial relations that indicate generic spatial configuration like 'on' instead of informative relations such as 'parked on' does not enforce this complex reasoning, harming generalization. To address this problem, we propose a novel framework for SGG training that exploits relation labels based on their informativeness. Our model-agnostic training procedure imputes missing informative relations for less informative samples in the training data and trains a SGG model on the imputed labels along with existing annotations. We show that this approach can successfully be used in conjunction with state-of-the-art SGG methods and improves their performance significantly in multiple metrics on the standard Visual Genome benchmark. Furthermore, we obtain considerable improvements for unseen triplets in a more challenging zero-shot setting.

翻译:场景图生成( SGG) 旨在捕捉不同对象之间的广泛互动,这对全面了解场景至关重要。 有关整个关系的现有 SGG 方法由于培训数据中存在各种偏差,未能获得关于视觉和文字相关性的复杂推理。 学习那些表明通用空间配置的细小关系,比如“ 上”而不是“ 上”,而不是“ 上” 等信息性关系,并不能强制推行这种复杂的推理,损害一般化。 为了解决这一问题,我们提议了一个SG培训的新框架,根据它们的信息性能来利用关系标签。 我们的模型-不可知性培训程序对培训数据中信息性较差的样本进行了估算,并培训了有关估算标签的 SGG 模型和现有说明。 我们表明,这种方法可以成功地与最新SGG方法一起使用,并在标准视觉基因组基准的多度指标中大大改进它们的性能。 此外,我们在更具有挑战性的零光谱环境中的不可见的三者获得相当大的改进。