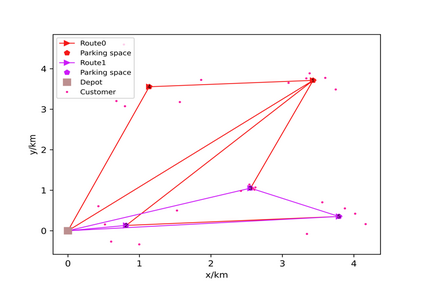

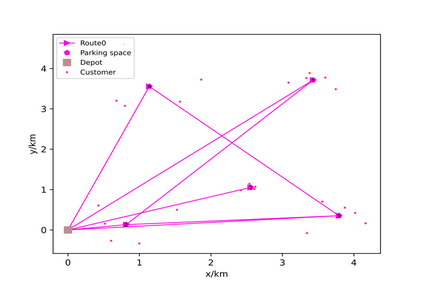

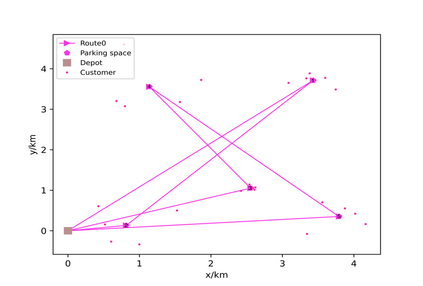

Mobile parcel lockers (MPLs) have been recently proposed by logistics operators as a technology that could help reduce traffic congestion and operational costs in urban freight distribution. Given their ability to relocate throughout their area of deployment, they hold the potential to improve customer accessibility and convenience. In this study, we formulate the Mobile Parcel Locker Problem (MPLP), a special case of the Location-Routing Problem (LRP) which determines the optimal stopover location for MPLs throughout the day and plans corresponding delivery routes. A Hybrid Q-Learning-Network-based Method (HQM) is developed to resolve the computational complexity of the resulting large problem instances while escaping local optima. In addition, the HQM is integrated with global and local search mechanisms to resolve the dilemma of exploration and exploitation faced by classic reinforcement learning (RL) methods. We examine the performance of HQM under different problem sizes (up to 200 nodes) and benchmarked it against the Genetic Algorithm (GA). Our results indicate that the average reward obtained by HQM is 1.96 times greater than GA, which demonstrates that HQM has a better optimisation ability. Finally, we identify critical factors that contribute to fleet size requirements, travel distances, and service delays. Our findings outline that the efficiency of MPLs is mainly contingent on the length of time windows and the deployment of MPL stopovers.

翻译:物流运营商最近提出移动包裹储物柜(MPL),作为有助于减少交通拥堵和城市货运分配业务费用的技术。鉴于他们有能力在整个部署地区迁移,他们拥有改善客户无障碍和方便条件的潜力。在本研究中,我们制定了移动包裹仓储问题(MPLP),这是定位路问题(LRP)的一个特例,它决定了MPL全日最佳中途停留地点,并计划相应的交货路线。我们开发了混合Q学习网基方法(HQM),以解决由此造成的大问题在计算上的复杂性,同时逃离当地的奥地马。此外,HQM与全球和地方搜索机制相结合,以解决典型强化学习(RL)方法所面临的勘探和开采两难困境。我们检查了不同问题规模(最多达200个零点)的HQM的绩效,并对照遗传Algorithm(GA)的交付路线进行基准。我们的结果显示,HQM的平均奖赏比GA多1.96倍,这显示HQM的停用时间,这显示HQM的距离为我们关键的飞行效率的极限路段。