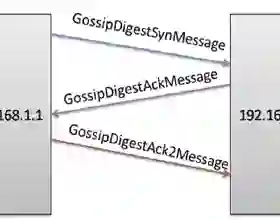

Decentralized optimization is increasingly popular in machine learning for its scalability and efficiency. Intuitively, it should also provide better privacy guarantees, as nodes only observe the messages sent by their neighbors in the network graph. But formalizing and quantifying this gain is challenging: existing results are typically limited to Local Differential Privacy (LDP) guarantees that overlook the advantages of decentralization. In this work, we introduce pairwise network differential privacy, a relaxation of LDP that captures the fact that the privacy leakage from a node $u$ to a node $v$ may depend on their relative position in the graph. We then analyze the combination of local noise injection with (simple or randomized) gossip averaging protocols on fixed and random communication graphs. We also derive a differentially private decentralized optimization algorithm that alternates between local gradient descent steps and gossip averaging. Our results show that our algorithms amplify privacy guarantees as a function of the distance between nodes in the graph, matching the privacy-utility trade-off of the trusted curator, up to factors that explicitly depend on the graph topology. Finally, we illustrate our privacy gains with experiments on synthetic and real-world datasets.

翻译:分散化优化在机器学习中越来越受欢迎,以了解其可缩放性和效率。 直观地说,它也应该提供更好的隐私保障, 因为节点只观察其邻居在网络图中发送的信息。 但是,正式化和量化这一收益具有挑战性: 现有结果通常局限于地方差异隐私(LDP)的保障,忽视了权力下放的好处。 在这项工作中,我们引入了双向网络差异隐私,LDP的放松反映了从节点美元到节点美元的隐私渗漏可能取决于其在图表中的相对位置。 然后我们分析了固定和随机通信图中本地噪音注入(简单或随机化的)八卦平均协议的组合。 我们还得出了一种差异化的私人分散化优化算法,在本地梯度下降步骤和八卦平均之间互换。 我们的结果显示,我们的算法扩大了隐私保障作为图表节点之间的距离函数的功能,与信任的馆长点的隐私使用权交易相匹配, 以及明确取决于图表表层的因素。 最后,我们用合成和真实世界数据设置的实验来说明我们的隐私成果。