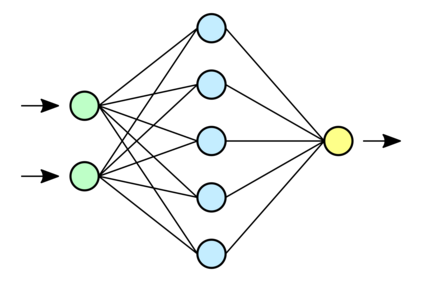

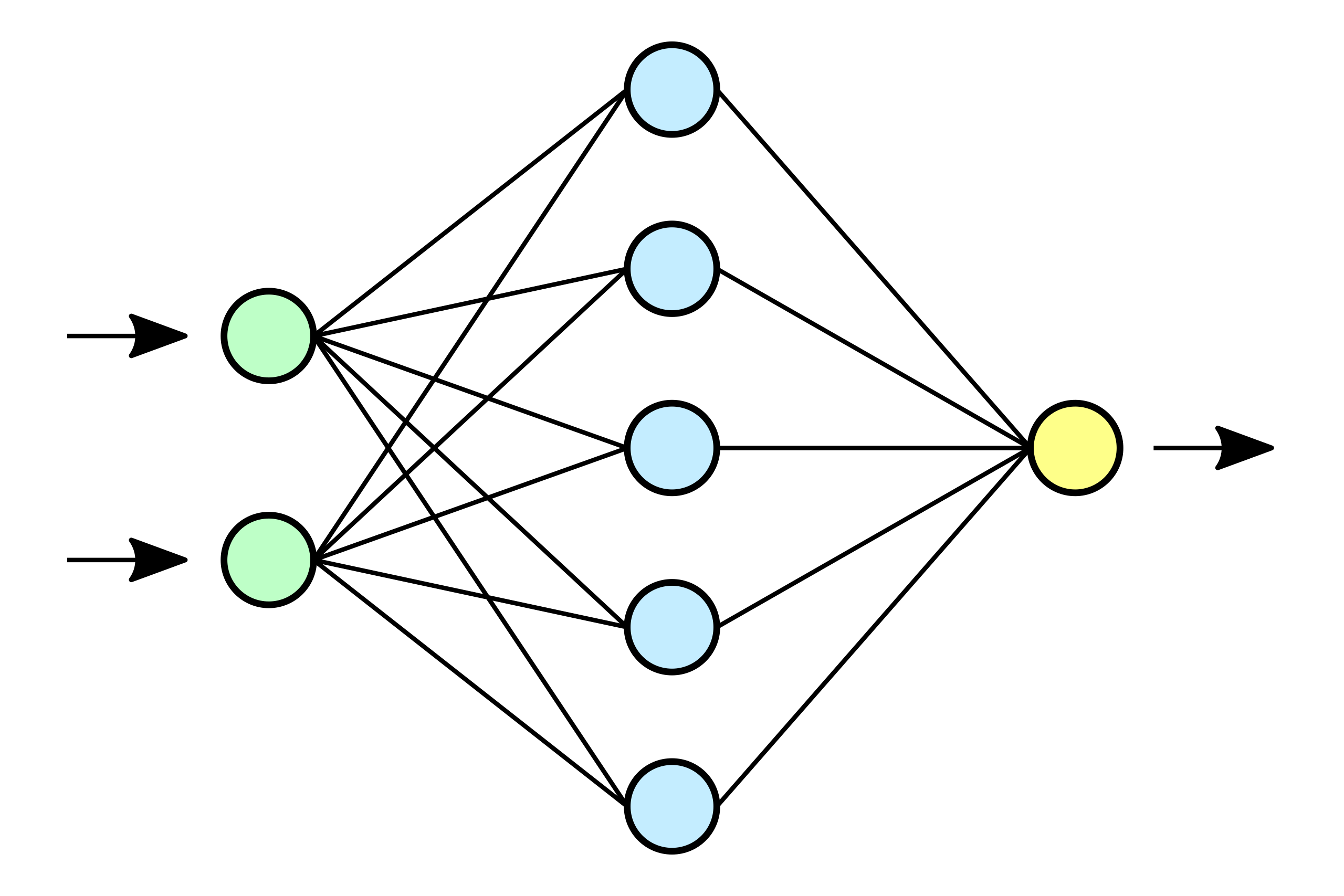

Datasets scraped from the internet have been critical to the successes of large-scale machine learning. Yet, this very success puts the utility of future internet-derived datasets at potential risk, as model outputs begin to replace human annotations as a source of supervision. In this work, we first formalize a system where interactions with one model are recorded as history and scraped as training data in the future. We then analyze its stability over time by tracking changes to a test-time bias statistic (e.g. gender bias of model predictions). We find that the degree of bias amplification is closely linked to whether the model's outputs behave like samples from the training distribution, a behavior which we characterize and define as consistent calibration. Experiments in three conditional prediction scenarios - image classification, visual role-labeling, and language generation - demonstrate that models that exhibit a sampling-like behavior are more calibrated and thus more stable. Based on this insight, we propose an intervention to help calibrate and stabilize unstable feedback systems. Code is available at https://github.com/rtaori/data_feedback.

翻译:从互联网上提取的数据集对于大规模机器学习的成功至关重要。然而,这一成功使未来互联网衍生数据集的效用面临潜在风险,因为模型产出开始取代人类的注释,作为监督的来源。在这项工作中,我们首先正式建立一个系统,将与一个模型的互动记录为历史记录,并在今后作为培训数据进行筛选。然后我们通过跟踪测试时间偏差统计的变化(例如模型预测的性别偏差)来分析其稳定性。我们发现,偏差放大的程度与模型产出是否象培训分布的样本一样密切相连,而我们将此行为定性和定义为一致校准。在三种有条件预测情景(图像分类、视觉角色标签和语言生成)中的实验表明,显示类似抽样行为的模型更加校准,因而更加稳定。基于这一观察,我们建议采取干预措施,帮助校准和稳定不稳定的反馈系统。我们可在https://github.com/rtaori/data_feedback查阅代码。