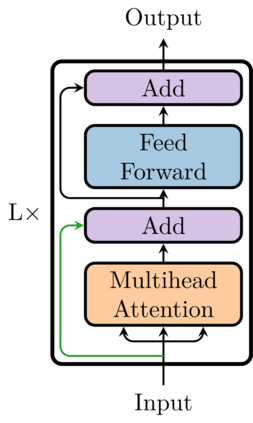

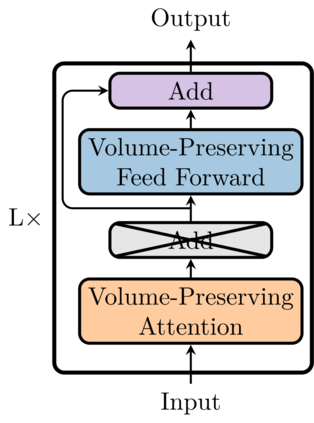

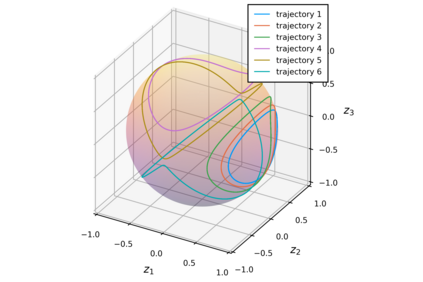

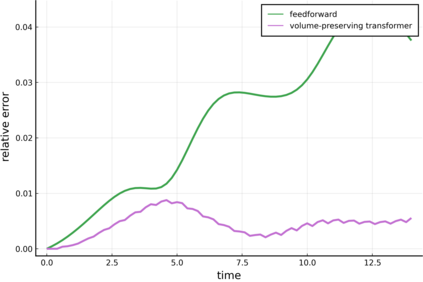

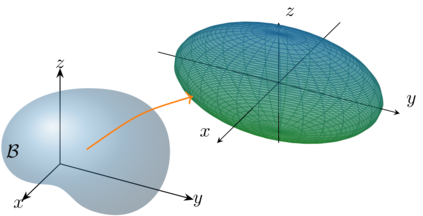

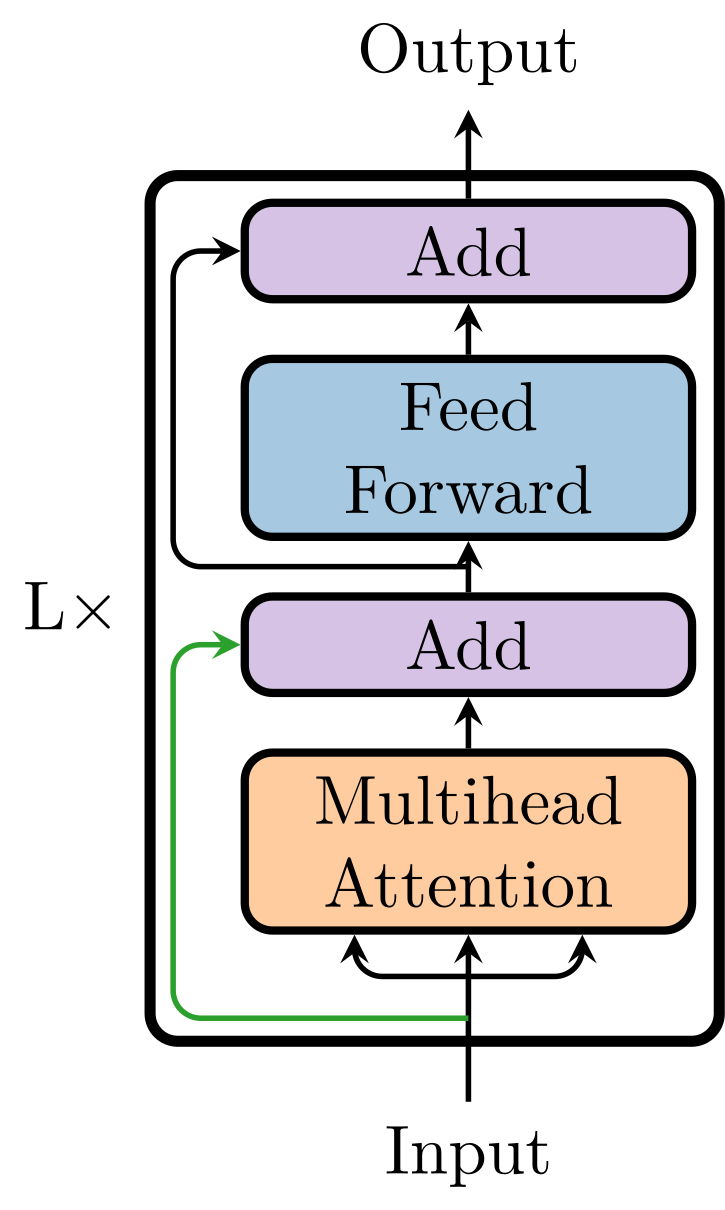

Two of the many trends in neural network research of the past few years have been (i) the learning of dynamical systems, especially with recurrent neural networks such as long short-term memory networks (LSTMs) and (ii) the introduction of transformer neural networks for natural language processing (NLP) tasks. Both of these trends have created enormous amounts of traction, particularly the second one: transformer networks now dominate the field of NLP. Even though some work has been performed on the intersection of these two trends, those efforts was largely limited to using the vanilla transformer directly without adjusting its architecture for the setting of a physical system. In this work we use a transformer-inspired neural network to learn a dynamical system and furthermore (for the first time) imbue it with structure-preserving properties to improve long-term stability. This is shown to be of great advantage when applying the neural network to real world applications.

翻译:暂无翻译