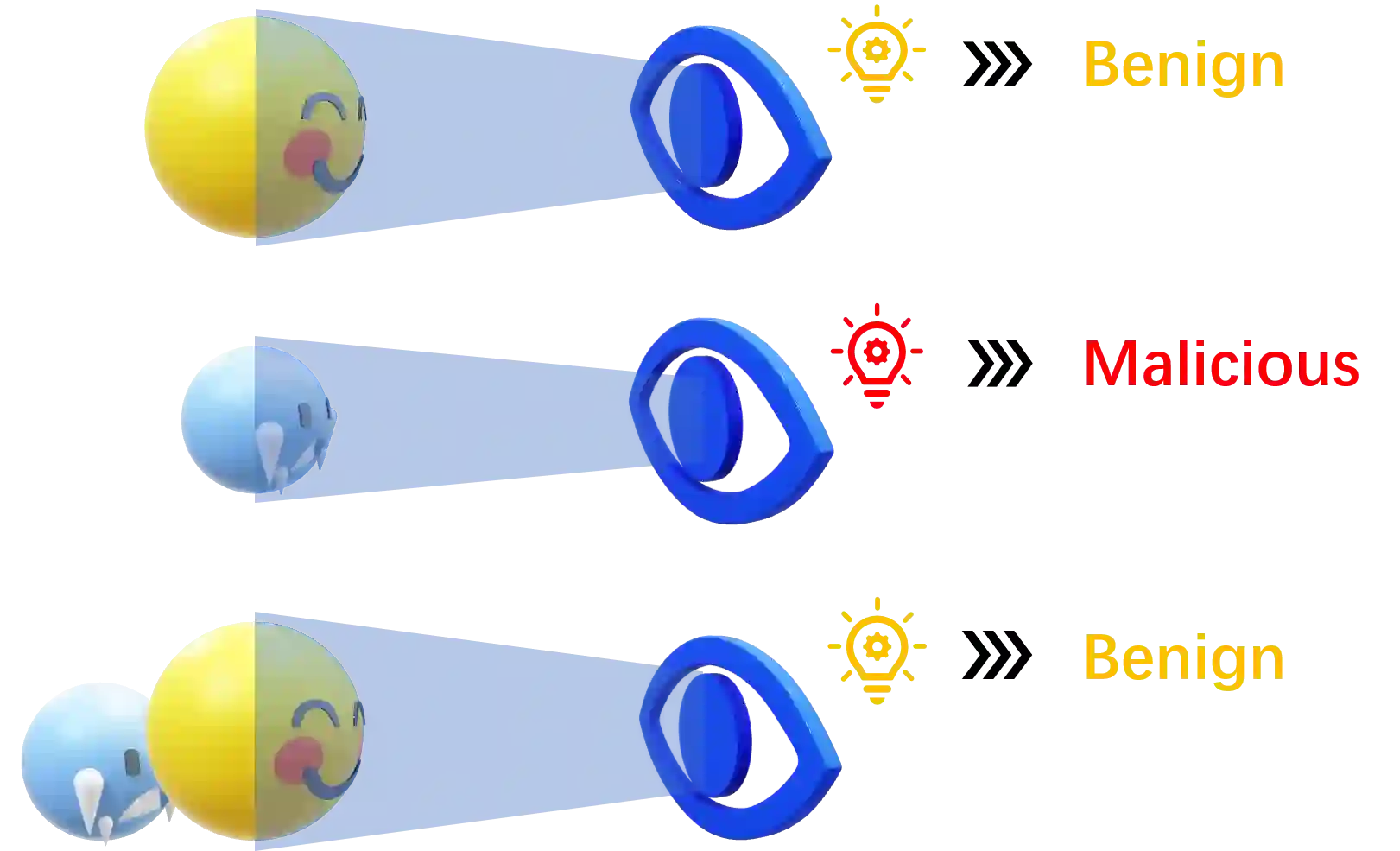

Outsourced deep neural networks have been demonstrated to suffer from patch-based trojan attacks, in which an adversary poisons the training sets to inject a backdoor in the obtained model so that regular inputs can be still labeled correctly while those carrying a specific trigger are falsely given a target label. Due to the severity of such attacks, many backdoor detection and containment systems have recently, been proposed for deep neural networks. One major category among them are various model inspection schemes, which hope to detect backdoors before deploying models from non-trusted third-parties. In this paper, we show that such state-of-the-art schemes can be defeated by a so-called Scapegoat Backdoor Attack, which introduces a benign scapegoat trigger in data poisoning to prevent the defender from reversing the real abnormal trigger. In addition, it confines the values of network parameters within the same variances of those from clean model during training, which further significantly enhances the difficulty of the defender to learn the differences between legal and illegal models through machine-learning approaches. Our experiments on 3 popular datasets show that it can escape detection by all five state-of-the-art model inspection schemes. Moreover, this attack brings almost no side-effects on the attack effectiveness and guarantees the universal feature of the trigger compared with original patch-based trojan attacks.

翻译:由于这些攻击的严重性,最近为深神经网络提出了许多后门探测和封闭系统建议,其中一个主要类别是各种示范检查计划,希望在部署不受信任的第三方模型之前先探测后门;在本文中,我们表明,这种最先进的计划可以被所谓的Scapegoat后门攻击击败,即所谓的Scapegoat后门攻击,即引入一种良性的替罪羊诱因,即数据中毒,以防止捍卫者逆转真正的异常触发器;此外,它将网络参数的价值限制在与培训期间清洁模型的相同差异之内,这大大增加了辩护人通过机器学习方法了解法律模型与非法模型之间的差异。我们在3个流行数据集上进行的实验表明,它可以逃避所有袭击性袭击性先导的先导性攻击的反向性攻击的反向性触发。