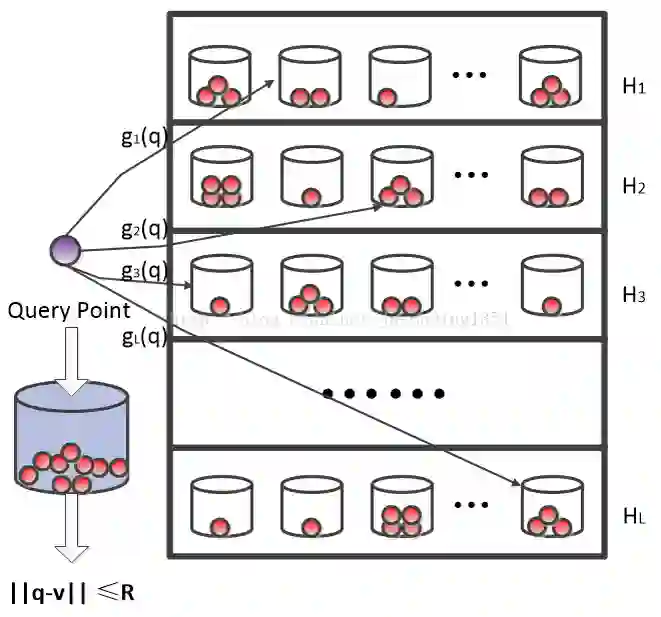

Due to its powerful feature learning capability and high efficiency, deep hashing has achieved great success in large-scale image retrieval. Meanwhile, extensive works have demonstrated that deep neural networks (DNNs) are susceptible to adversarial examples, and exploring adversarial attack against deep hashing has attracted many research efforts. Nevertheless, backdoor attack, another famous threat to DNNs, has not been studied for deep hashing yet. Although various backdoor attacks have been proposed in the field of image classification, existing approaches failed to realize a truly imperceptive backdoor attack that enjoys invisible triggers and clean label setting simultaneously, and they also cannot meet the intrinsic demand of image retrieval backdoor. In this paper, we propose BadHash, the first generative-based imperceptible backdoor attack against deep hashing, which can effectively generate invisible and input-specific poisoned images with clean label. Specifically, we first propose a new conditional generative adversarial network (cGAN) pipeline to effectively generate poisoned samples. For any given benign image, it seeks to generate a natural-looking poisoned counterpart with a unique invisible trigger. In order to improve the attack effectiveness, we introduce a label-based contrastive learning network LabCLN to exploit the semantic characteristics of different labels, which are subsequently used for confusing and misleading the target model to learn the embedded trigger. We finally explore the mechanism of backdoor attacks on image retrieval in the hash space. Extensive experiments on multiple benchmark datasets verify that BadHash can generate imperceptible poisoned samples with strong attack ability and transferability over state-of-the-art deep hashing schemes. Primary Subject Area: [Engagement] Multimedia Search and Recommendation

翻译:由于其强大的特征学习能力和高效率,深沙田在大规模图像检索中取得了巨大的成功。与此同时,广泛的工程表明深神经网络(DNNs)很容易受到对抗性实例的影响,探索对深沙田的对抗性攻击吸引了许多研究努力。然而,虽然对DNS的后门攻击是另一个著名的威胁,但还没有进行深沙坑研究。虽然在图像分类领域提出了各种后门攻击建议,但现有方法未能实现真正隐蔽的后门攻击,这种攻击同时享有隐形触发器和清洁标签设置,也无法满足图像原始回收的内在需求。在本论文中,我们提议BadHash,即首次基于基因的隐蔽性对深沙丘的后门攻击性攻击,这可以有效地生成隐形和投入的有毒图像。具体地,我们首先提出一个新的有条件的后门对抗性对抗性对抗性网络(cAN)管道,以有效生成有毒样品。对于任何具有良性的形象,它试图产生一种看上去有毒的对应器,并且具有独特的隐形的硬性触发器。为了提高攻击性原始的回收能力,我们利用了在目标上隐形的轨道上的数据定位网络,我们利用了一个标签的变变变变变的变的变的变变的变的图像,我们用了变的变的变的变的变的变的变的图像来学习了变的变的图像的变的变的变的变的变的变的变的变的变的变的变的变的变的变的变的变式的变式的变式模型。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem