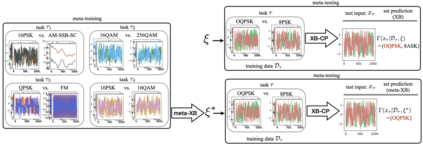

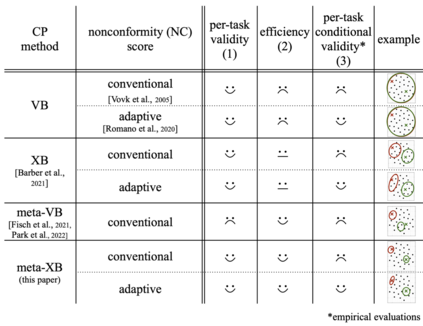

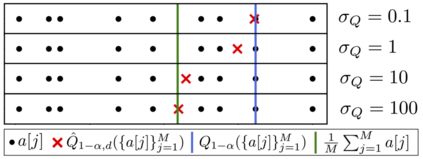

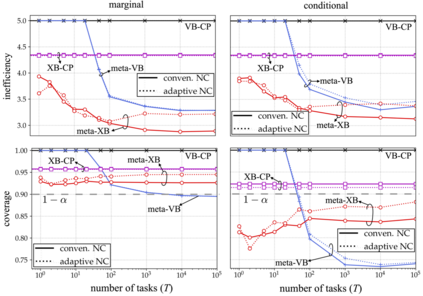

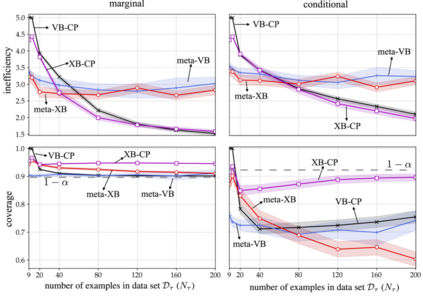

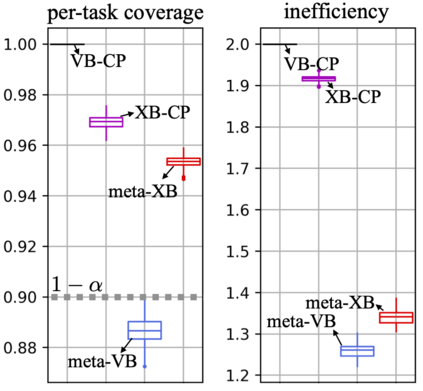

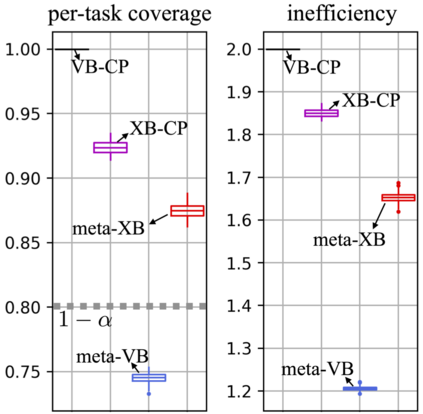

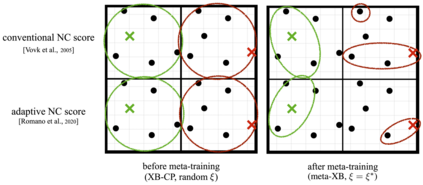

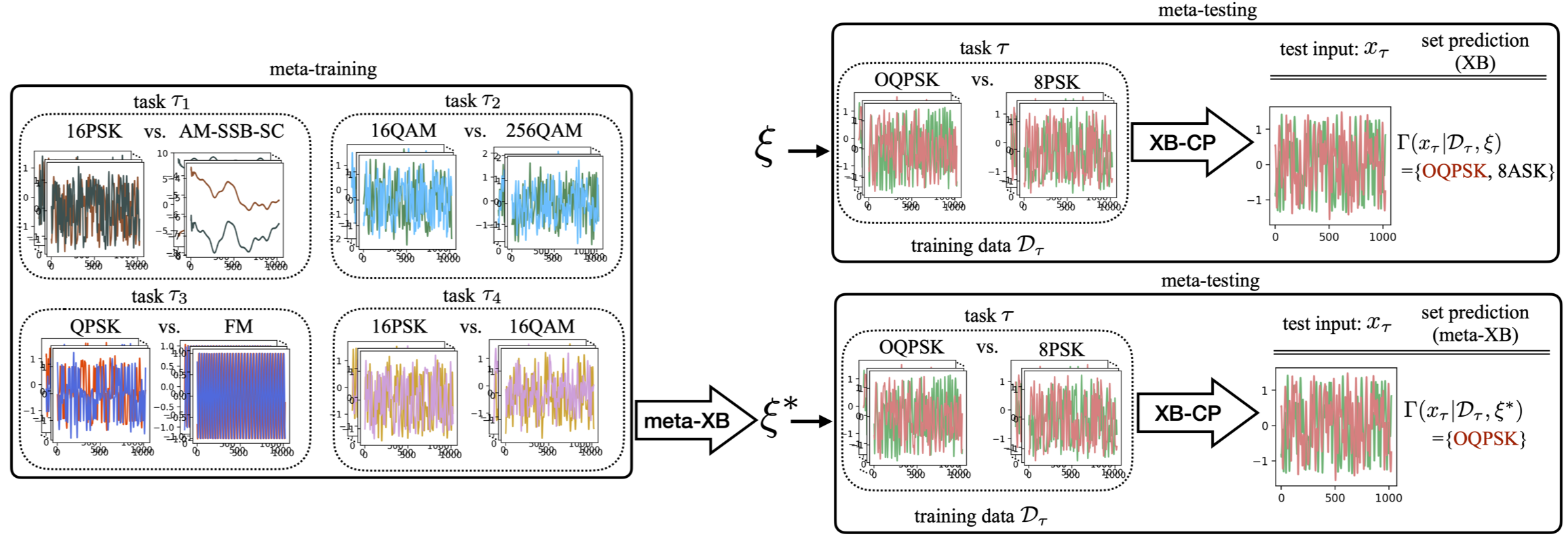

Conventional frequentist learning is known to yield poorly calibrated models that fail to reliably quantify the uncertainty of their decisions. Bayesian learning can improve calibration, but formal guarantees apply only under restrictive assumptions about correct model specification. Conformal prediction (CP) offers a general framework for the design of set predictors with calibration guarantees that hold regardless of the underlying data generation mechanism. However, when training data are limited, CP tends to produce large, and hence uninformative, predicted sets. This paper introduces a novel meta-learning solution that aims at reducing the set prediction size. Unlike prior work, the proposed meta-learning scheme, referred to as meta-XB, (i) builds on cross-validation-based CP, rather than the less efficient validation-based CP; and (ii) preserves formal per-task calibration guarantees, rather than less stringent task-marginal guarantees. Finally, meta-XB is extended to adaptive non-conformal scores, which are shown empirically to further enhance marginal per-input calibration.

翻译:据了解,常规常年学习会产生错误的模型,无法可靠地量化其决定的不确定性。贝叶斯学习可以改进校准,但正式的保证只有在对正确模型规格的限制性假设下才能适用。非正式预测(CP)为设计成套的预测器提供了一个总框架,无论基本数据生成机制如何,都具有校准保证。然而,当培训数据有限时,CP往往产生大数,从而产生非信息化的预测数据集。本文件引入了一个新的元学习解决方案,目的是减少设定的预测规模。与以前的工作不同,拟议的元学习方案(称为元预算外)是建立在基于交叉校准的CP的基础上,而不是建立在效率较低的验证基CP上;以及(二) 保留正式的每个任务校准保证,而不是不太严格的任务边际保证。最后,元预算外扩大到适应性非常规评分,从经验上显示,以进一步加强边际的人均投入校准。