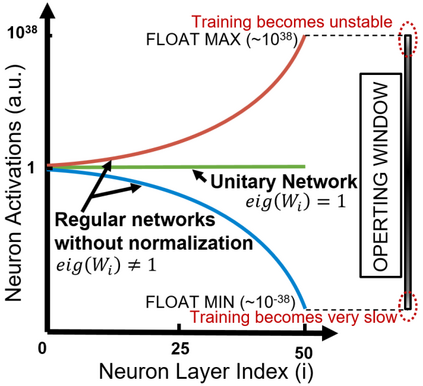

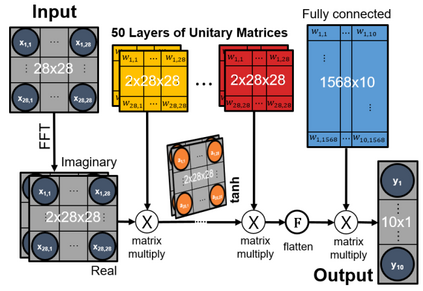

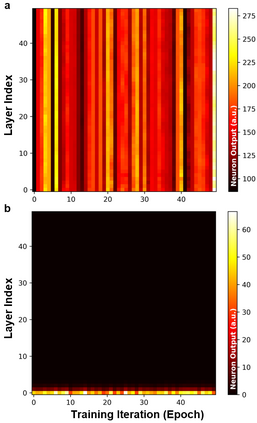

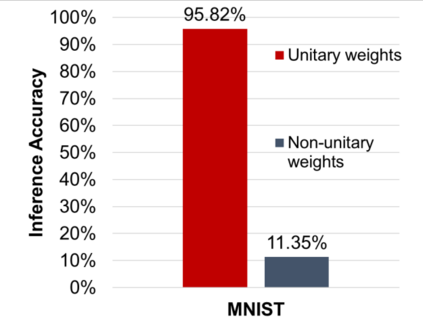

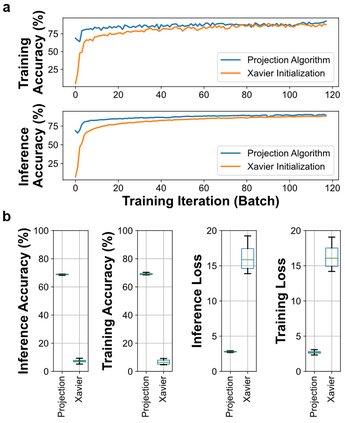

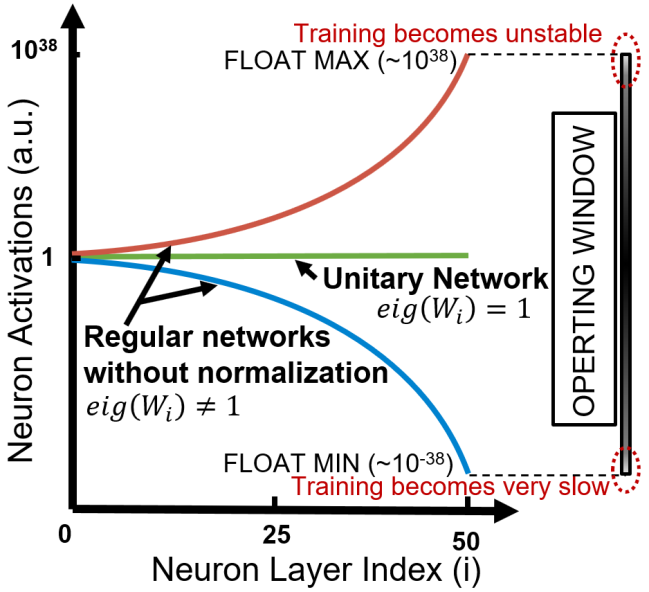

Unitary neural networks are promising alternatives for solving the exploding and vanishing activation/gradient problem without the need for explicit normalization that reduces the inference speed. However, they often require longer training time due to the additional unitary constraints on their weight matrices. Here we show a novel algorithm using a backpropagation technique with Lie algebra for computing approximated unitary weights from their pre-trained, non-unitary counterparts. The unitary networks initialized with these approximations can reach the desired accuracies much faster, mitigating their training time penalties while maintaining inference speedups. Our approach will be instrumental in the adaptation of unitary networks, especially for those neural architectures where pre-trained weights are freely available.

翻译:单体神经网络是解决爆炸和消失激活/渐进问题的有希望的替代方法,不需要明确正常化,降低推论速度,但是,由于重量矩阵上的额外单一限制,它们往往需要较长的培训时间。这里我们展示了一种新型算法,使用利代数技术来计算其预先训练的非统一对应方的近似单一重量。以这些近似值初始化的统一网络可以更快地达到理想的准确度,减轻其培训时间的处罚,同时保持快速推论。我们的方法将有利于调整单一网络,特别是适用于那些可以自由获得预先训练重量的神经结构。