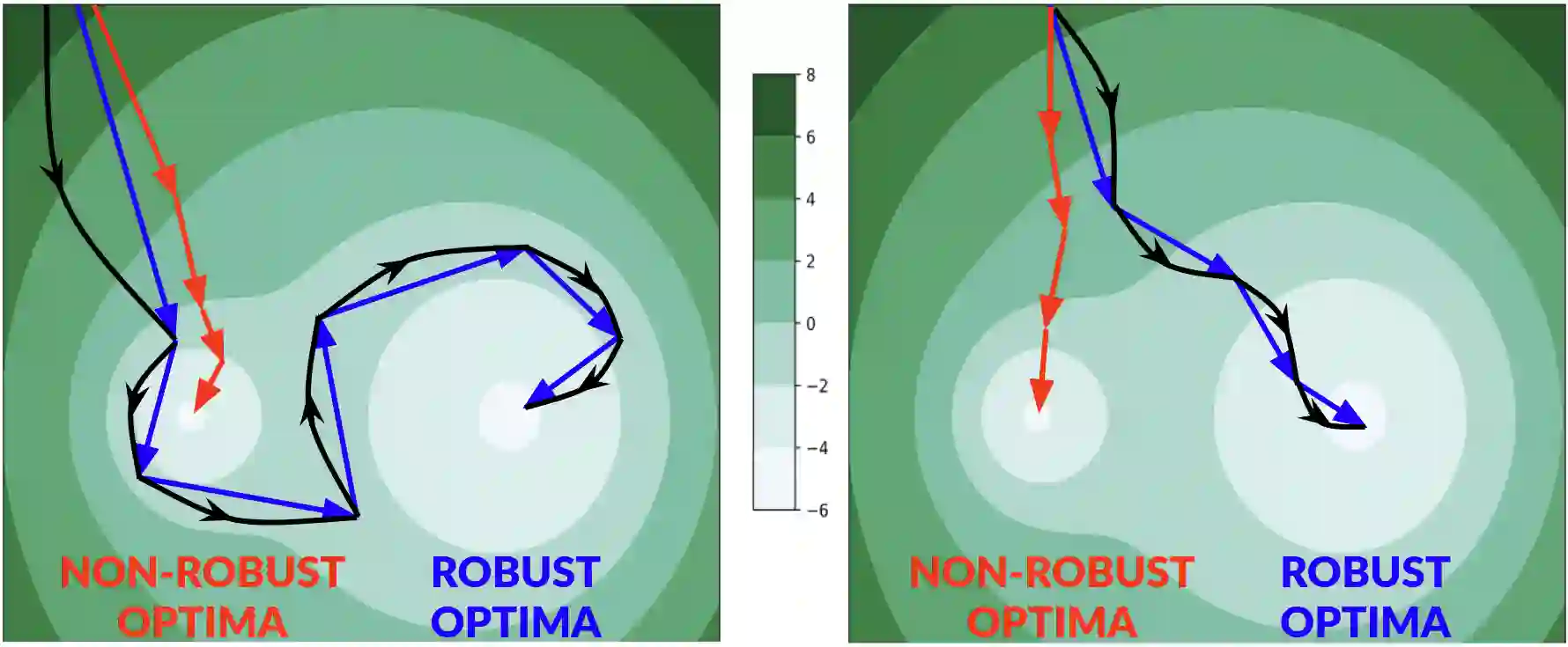

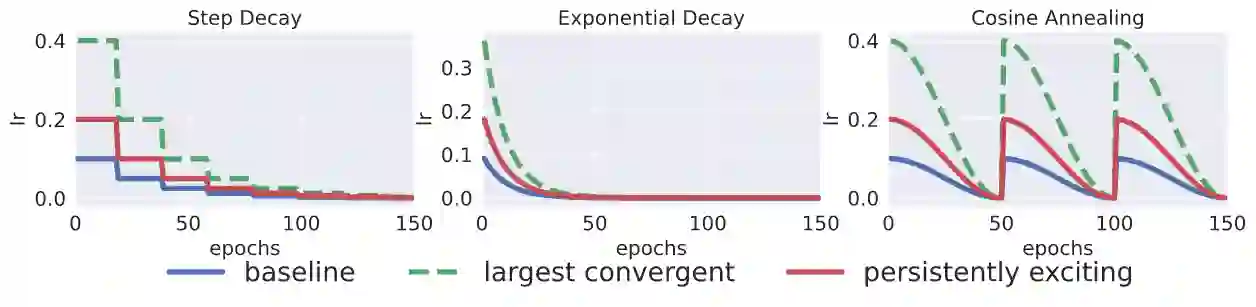

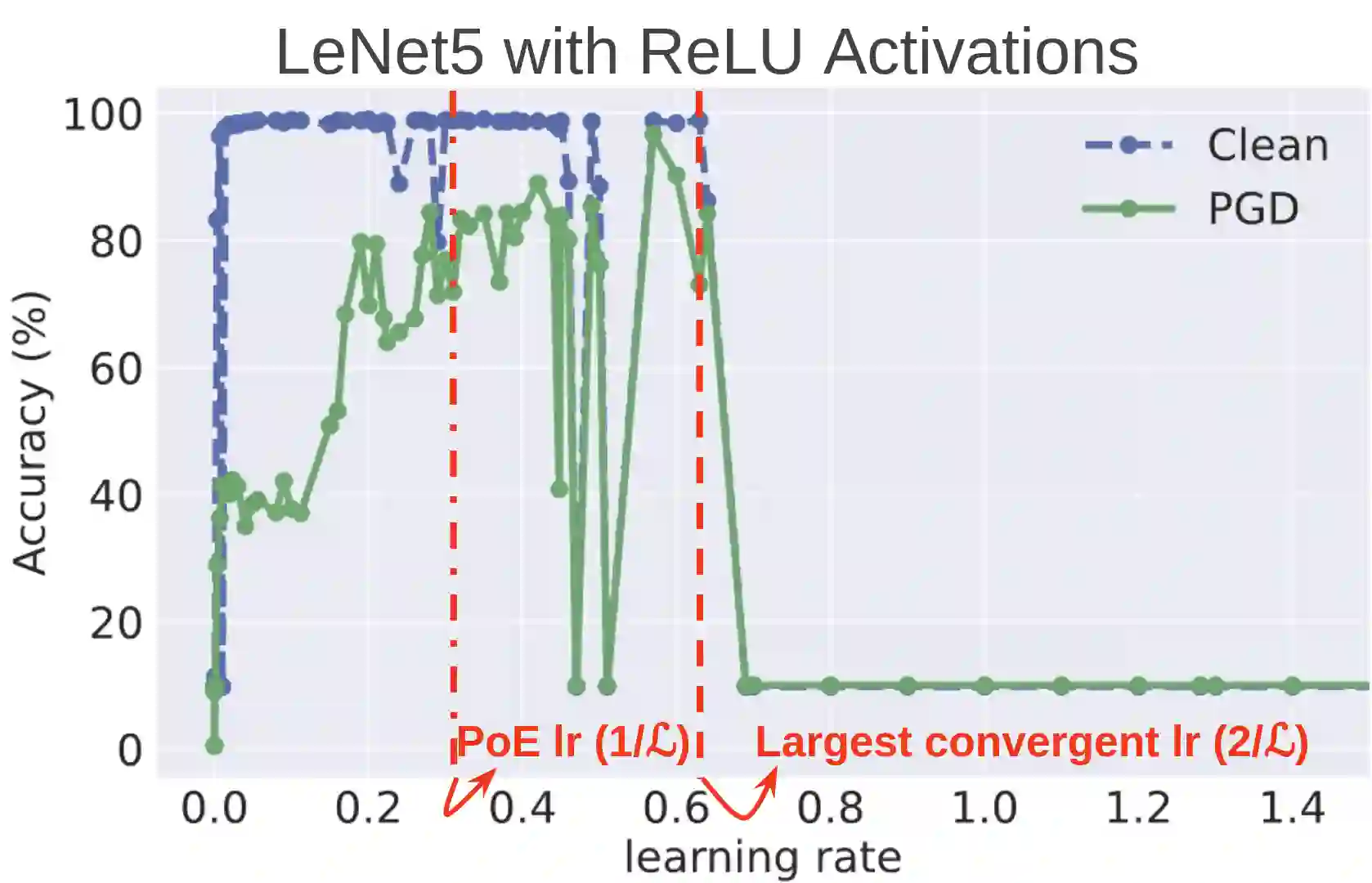

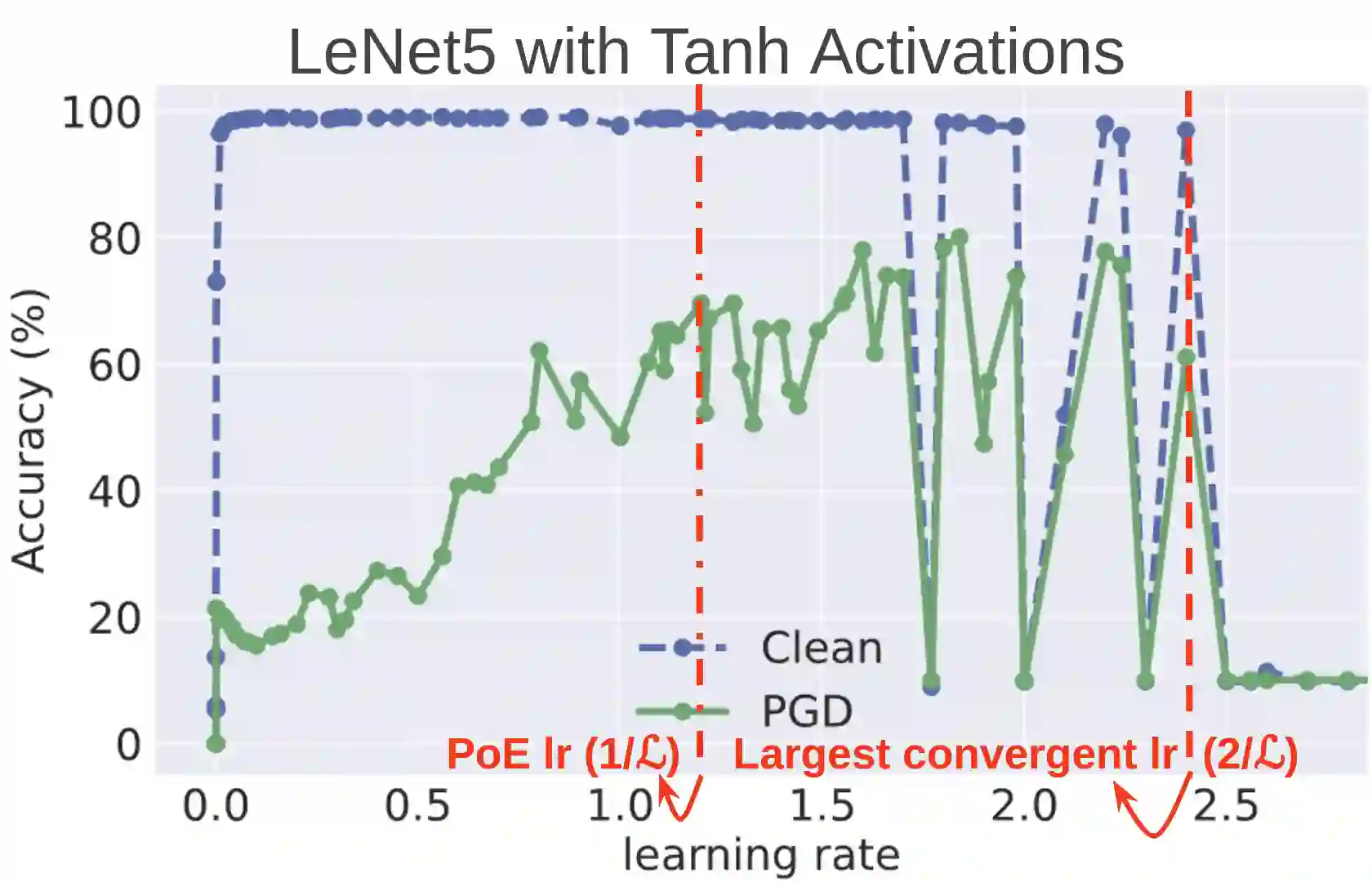

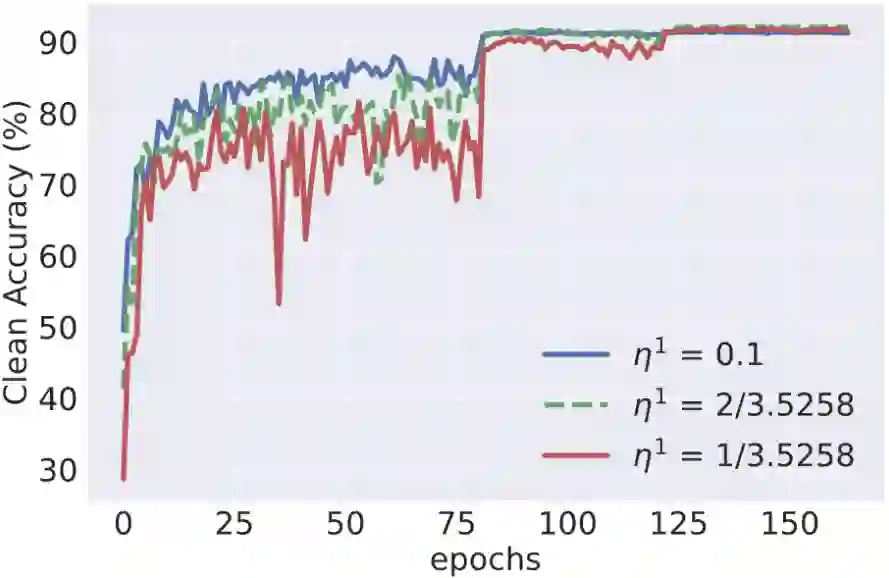

Improving adversarial robustness of neural networks remains a major challenge. Fundamentally, training a network is a parameter estimation problem. In adaptive control theory, maintaining persistency of excitation (PoE) is integral to ensuring convergence of parameter estimates in dynamical systems to their robust optima. In this work, we show that network training using gradient descent is equivalent to a dynamical system parameter estimation problem. Leveraging this relationship, we prove a sufficient condition for PoE of gradient descent is achieved when the learning rate is less than the inverse of the Lipschitz constant of the gradient of loss function. We provide an efficient technique for estimating the corresponding Lipschitz constant using extreme value theory and demonstrate that by only scaling the learning rate schedule we can increase adversarial accuracy by up to 15% points on benchmark datasets. Our approach also universally increases the adversarial accuracy by 0.1% to 0.3% points in various state-of-the-art adversarially trained models on the AutoAttack benchmark, where every small margin of improvement is significant.

翻译:改善神经网络的对抗性强度仍是一项重大挑战。 从根本上说, 培训网络是一个参数估计问题。 在适应性控制理论中, 保持刺激的持久性( PoE) 是确保动态系统中参数估算与强强的Popima相融合所不可或缺的。 在这项工作中, 我们证明使用梯度下降的网络培训相当于动态系统参数估算问题。 利用这种关系, 当学习率低于Lipschitz 损失函数梯度常数时, 我们证明对于梯度下降的PoE就足以满足了充分的条件。 我们提供了一种有效的技术,用极端值理论来估计相应的Lipschitz常数, 并证明只有将学习率表的精确度提升到基准数据集的15%。 我们的方法还普遍将顶点的顶点精确度提高0.1%至0.3%, 也就是在AutAtack基准上经过最先进的对抗性训练的模型中, 每一个小的改进幅度都很大。