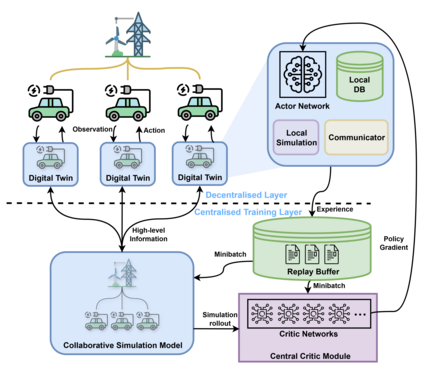

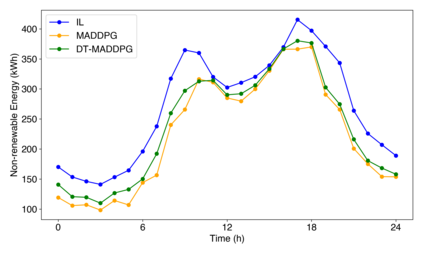

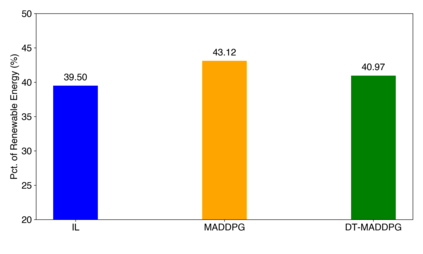

The coordination of large-scale, decentralised systems, such as a fleet of Electric Vehicles (EVs) in a Vehicle-to-Grid (V2G) network, presents a significant challenge for modern control systems. While collaborative Digital Twins have been proposed as a solution to manage such systems without compromising the privacy of individual agents, deriving globally optimal control policies from the high-level information they share remains an open problem. This paper introduces Digital Twin Assisted Multi-Agent Deep Deterministic Policy Gradient (DT-MADDPG) algorithm, a novel hybrid architecture that integrates a multi-agent reinforcement learning framework with a collaborative DT network. Our core contribution is a simulation-assisted learning algorithm where the centralised critic is enhanced by a predictive global model that is collaboratively built from the privacy-preserving data shared by individual DTs. This approach removes the need for collecting sensitive raw data at a centralised entity, a requirement of traditional multi-agent learning algorithms. Experimental results in a simulated V2G environment demonstrate that DT-MADDPG can achieve coordination performance comparable to the standard MADDPG algorithm while offering significant advantages in terms of data privacy and architectural decentralisation. This work presents a practical and robust framework for deploying intelligent, learning-based coordination in complex, real-world cyber-physical systems.

翻译:暂无翻译