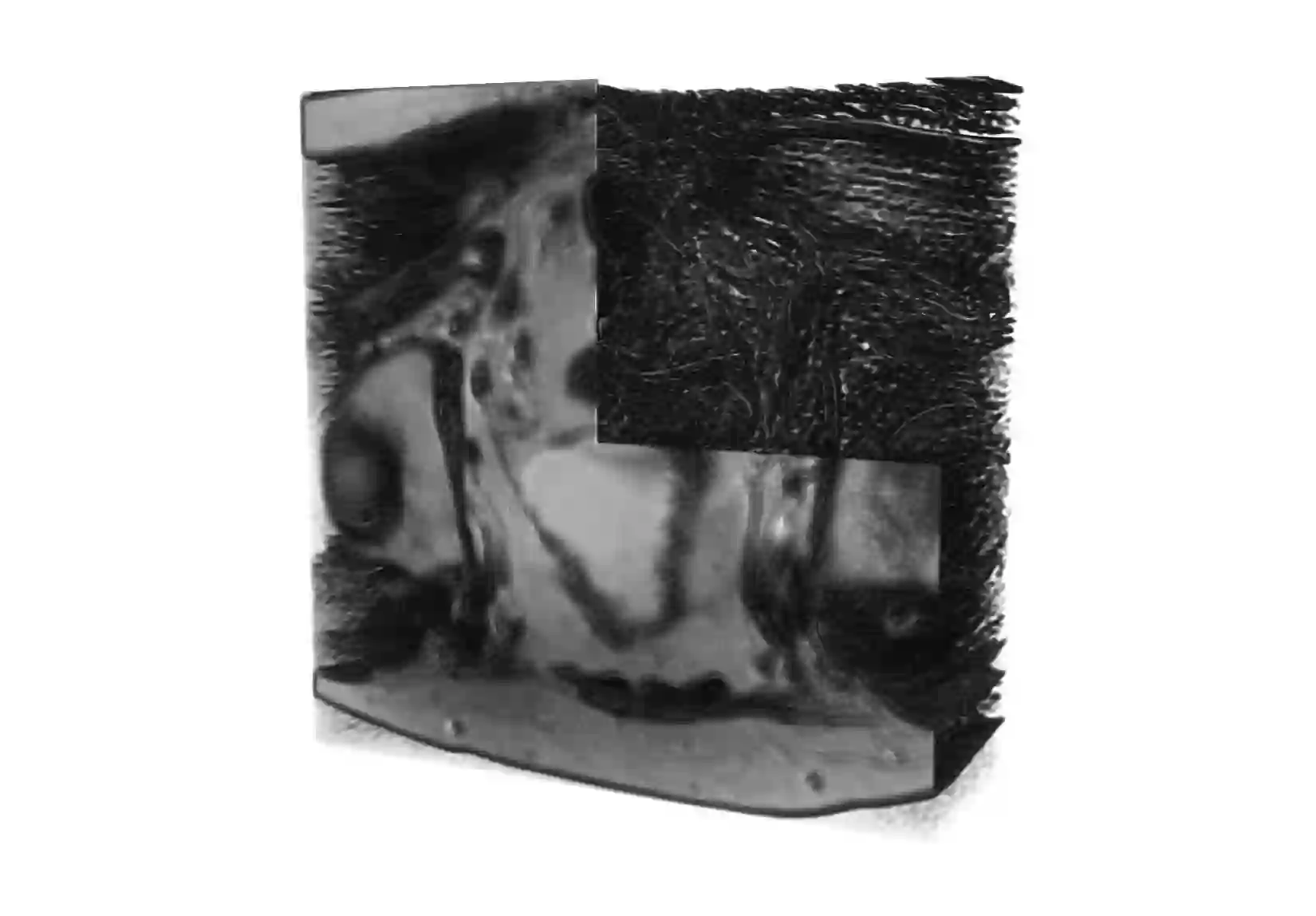

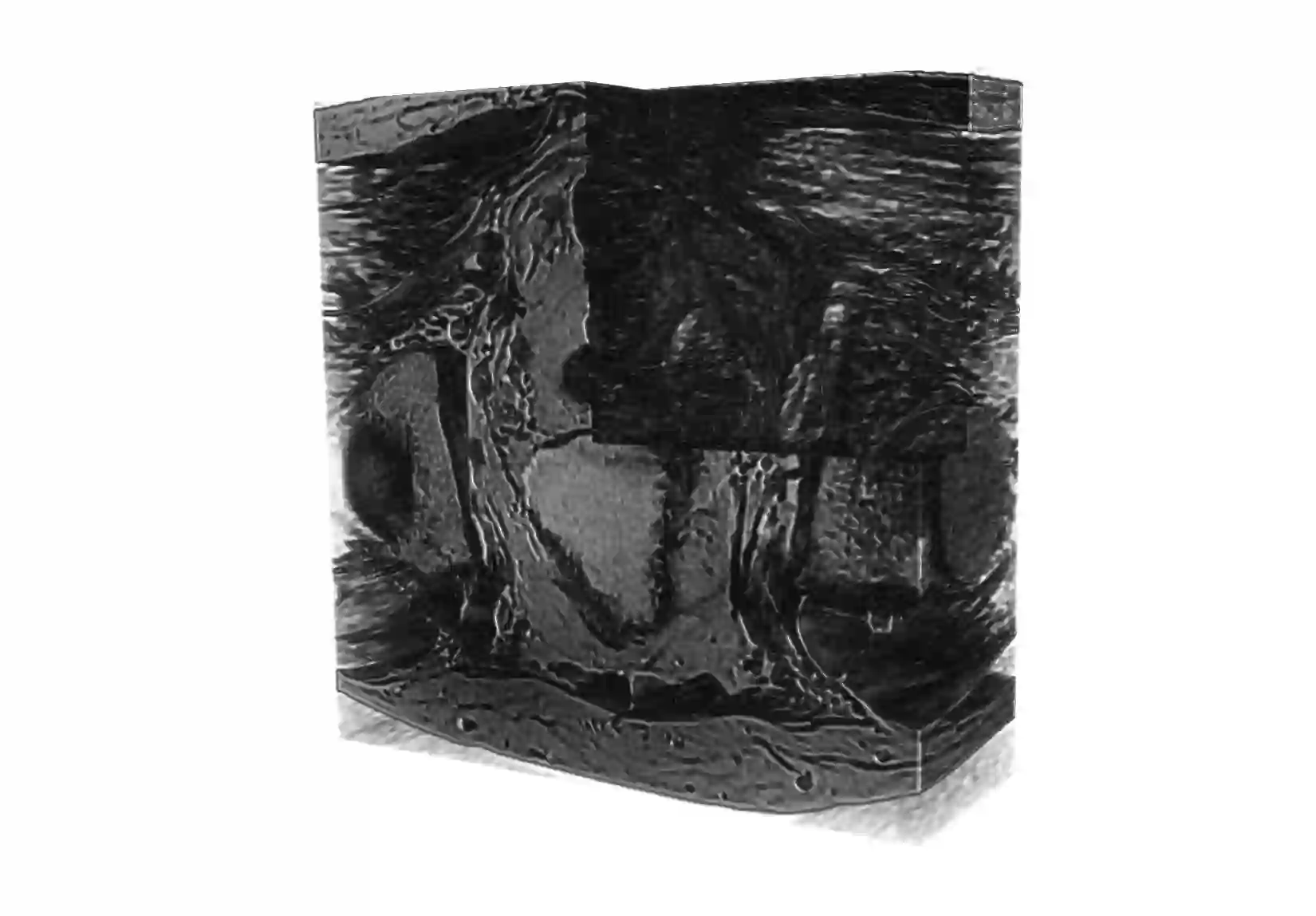

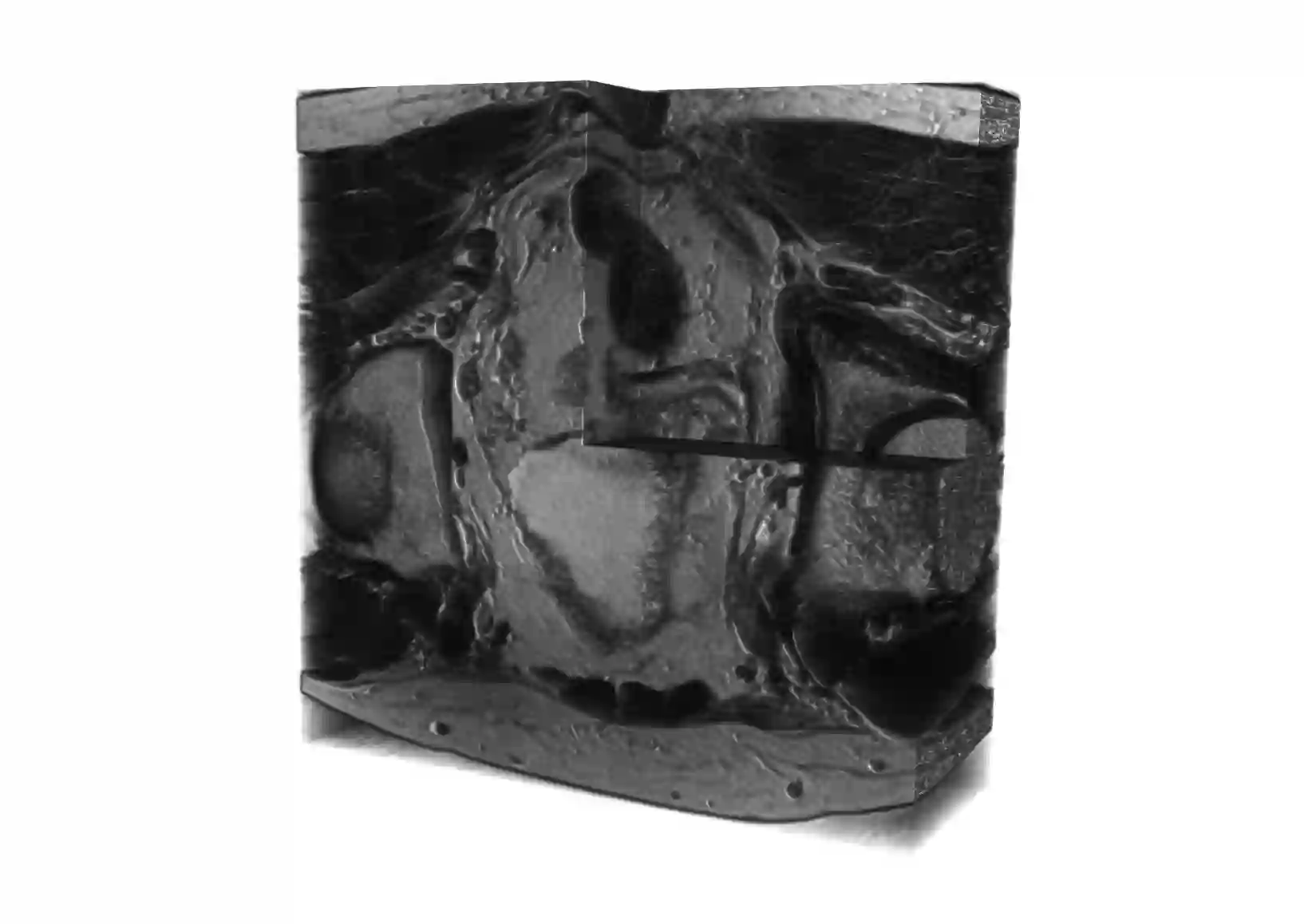

Despite the great success of convolutional neural networks (CNN) in 3D medical image segmentation tasks, the methods currently in use are still not robust enough to the different protocols utilized by different scanners, and to the variety of image properties or artefacts they produce. To this end, we introduce OOCS-enhanced networks, a novel architecture inspired by the innate nature of visual processing in the vertebrates. With different 3D U-Net variants as the base, we add two 3D residual components to the second encoder blocks: on and off center-surround (OOCS). They generalise the ganglion pathways in the retina to a 3D setting. The use of 2D-OOCS in any standard CNN network complements the feedforward framework with sharp edge-detection inductive biases. The use of 3D-OOCS also helps 3D U-Nets to scrutinise and delineate anatomical structures present in 3D images with increased accuracy.We compared the state-of-the-art 3D U-Nets with their 3D-OOCS extensions and showed the superior accuracy and robustness of the latter in automatic prostate segmentation from 3D Magnetic Resonance Images (MRIs). For a fair comparison, we trained and tested all the investigated 3D U-Nets with the same pipeline, including automatic hyperparameter optimisation and data augmentation.

翻译:尽管在3D医学图像分割任务中革命神经网络(CNN)取得了巨大成功,但目前使用的方法仍然不够强大,无法满足不同扫描仪使用的不同协议,也不足以满足其产生的图像属性或人工制品的多样性。为此,我们引入了OOCS增强的网络,这是一个由脊椎动物视觉处理内在性质所启发的新结构。用3D U-Net变量作为基数,我们在第二个管道编码区块中外添加了两个3D剩余部件:中间和中间环绕(OOCS),这些方法将视距线中的交织路径概括为3D设置。在任何标准的CNCN网络中使用2DOOCS补充了进化框架,在演化偏差中,3D-OOCS还帮助了3D U-Net对3D图像中存在的解析和描述结构,其准确性更高。我们比较了3D U-Net的状态和3D-OOCS的网络与3-O-OCS模拟的自动数据扩展,并展示了ARC-ARC的高级和高级数据升级的升级和升级。