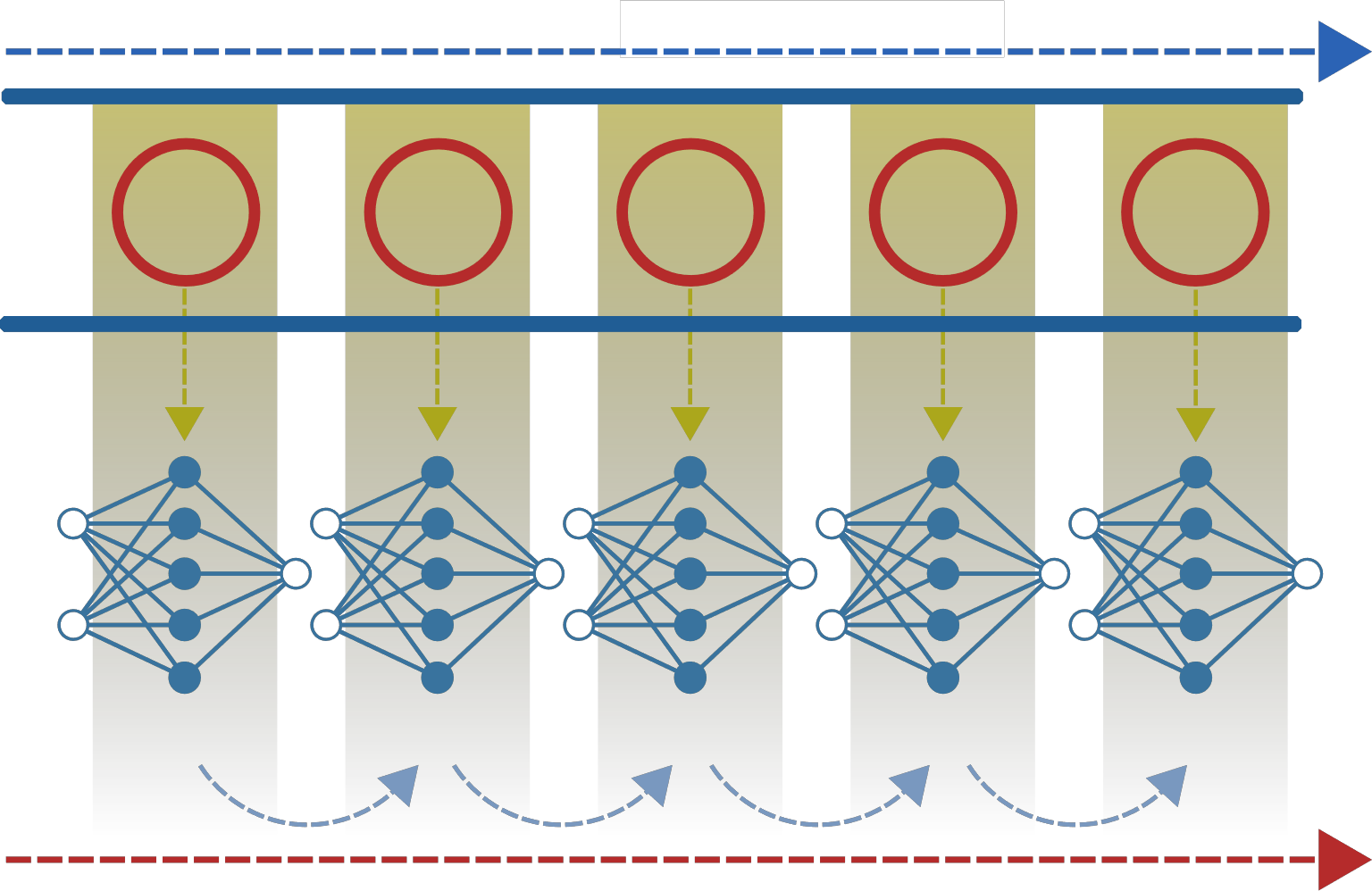

Online continual learning in the wild is a very difficult task in machine learning. Non-stationarity in online continual learning potentially brings about catastrophic forgetting in neural networks. Specifically, online continual learning for autonomous driving with SODA10M dataset exhibits extra problems on extremely long-tailed distribution with continuous distribution shift. To address these problems, we propose multiple deep metric representation learning via both contrastive and supervised contrastive learning alongside soft labels distillation to improve model generalization. Moreover, we exploit modified class-balanced focal loss for sensitive penalization in class imbalanced and hard-easy samples. We also store some samples under guidance of uncertainty metric for rehearsal and perform online and periodical memory updates. Our proposed method achieves considerable generalization with average mean class accuracy (AMCA) 64.01% on validation and 64.53% AMCA on test set.

翻译:野外的在线持续学习是机器学习中一项非常困难的任务。 在线持续学习中的非常态可能导致神经网络中的灾难性遗忘。 具体地说, 使用 SODA10M 数据集进行自动驾驶的在线持续学习在极长时间的分布上出现了额外的问题, 并且连续的分布转移。 为了解决这些问题, 我们提议通过对比性和监督性对比性学习以及软标签蒸馏来进行多重深度的衡量代表性学习, 以改进模型的概括化。 此外, 我们利用经修改的班级平衡焦点损失来对阶级不平衡和易碎样品进行敏感处罚。 我们还储存了一些样本, 用于为彩排和进行在线及定期记忆更新提供不确定性衡量标准指导。 我们提出的方法实现了相当程度的普及,平均班级精度(AMCA) 64.01%用于验证,64.53%用于测试集。

相关内容

Source: Apple - iOS 8