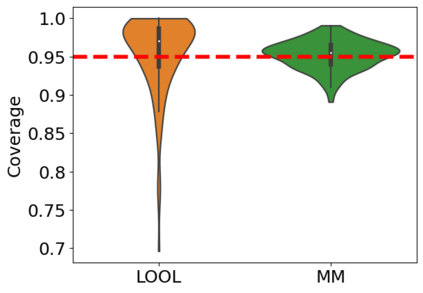

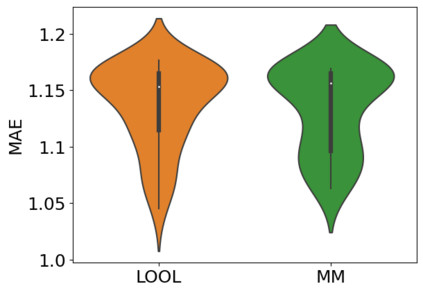

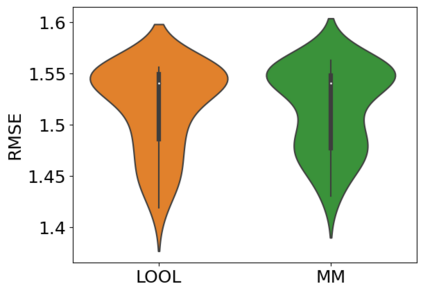

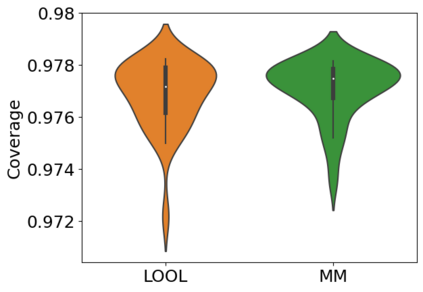

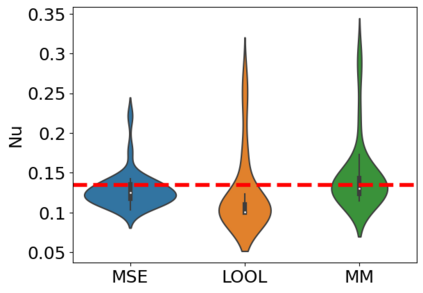

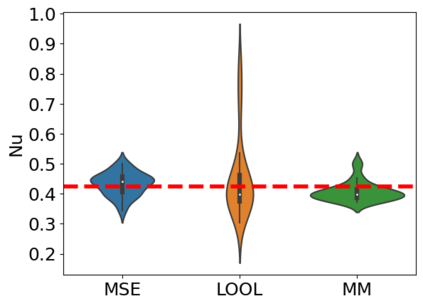

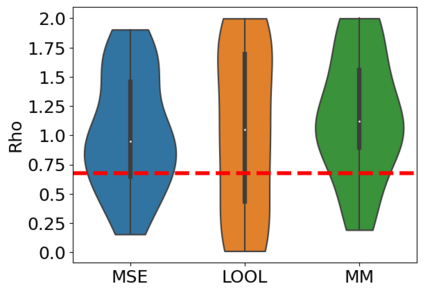

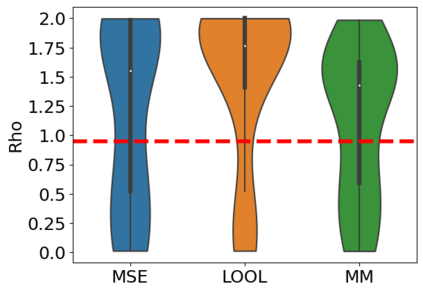

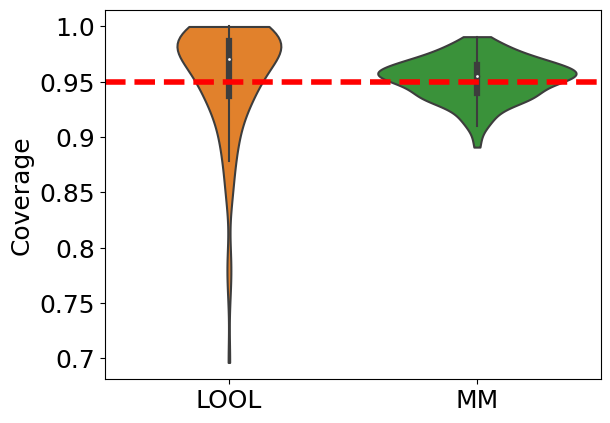

Gaussian processes (GPs) are Bayesian non-parametric models popular in a variety of applications due to their accuracy and native uncertainty quantification (UQ). Tuning GP hyperparameters is critical to ensure the validity of prediction accuracy and uncertainty; uniquely estimating multiple hyperparameters in, e.g. the Matern kernel can also be a significant challenge. Moreover, training GPs on large-scale datasets is a highly active area of research: traditional maximum likelihood hyperparameter training requires quadratic memory to form the covariance matrix and has cubic training complexity. To address the scalable hyperparameter tuning problem, we present a novel algorithm which estimates the smoothness and length-scale parameters in the Matern kernel in order to improve robustness of the resulting prediction uncertainties. Using novel loss functions similar to those in conformal prediction algorithms in the computational framework provided by the hyperparameter estimation algorithm MuyGPs, we achieve improved UQ over leave-one-out likelihood maximization while maintaining a high degree of scalability as demonstrated in numerical experiments.

翻译:Gausian进程(GPs)是各种应用中流行的Bayesian非参数模型,因为它们的准确性和本地不确定性量化(UQ) 。 调试GP超参数对于确保预测准确性和不确定性的有效性至关重要; 特别估计多重超参数(例如,Matern内核)也可能是一个重大挑战。 此外,大型数据集培训GP是一个非常活跃的研究领域:传统的最大可能性超参数培训需要四级内存形成共变矩阵,并具有立方培训复杂性。 为了解决可缩放超参数调问题,我们提出了一种新的算法,用以估计马特内核的平滑度和长度参数,以提高由此产生的预测不确定性的稳健性。我们使用与超参数估计算法MuyGP提供的计算框架中的一致预测算法中类似的新的损失函数,我们提高了UQ对休假1的可能性最大化,同时保持了数值实验所显示的高可伸缩性。