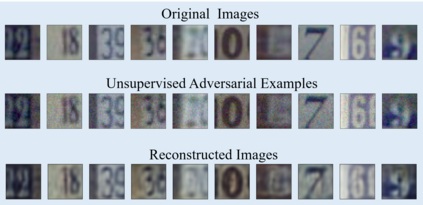

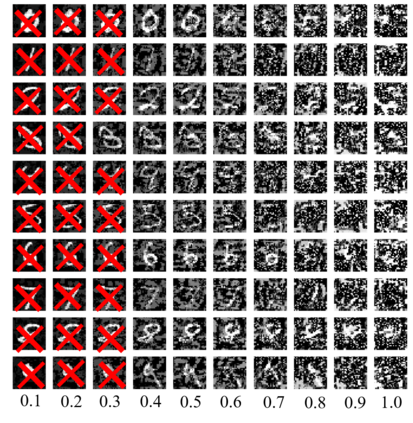

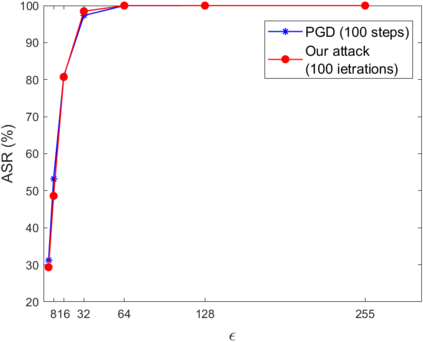

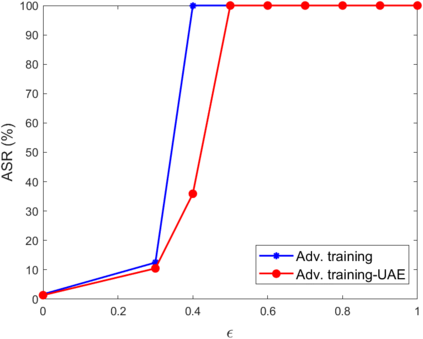

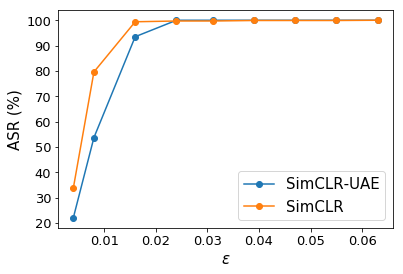

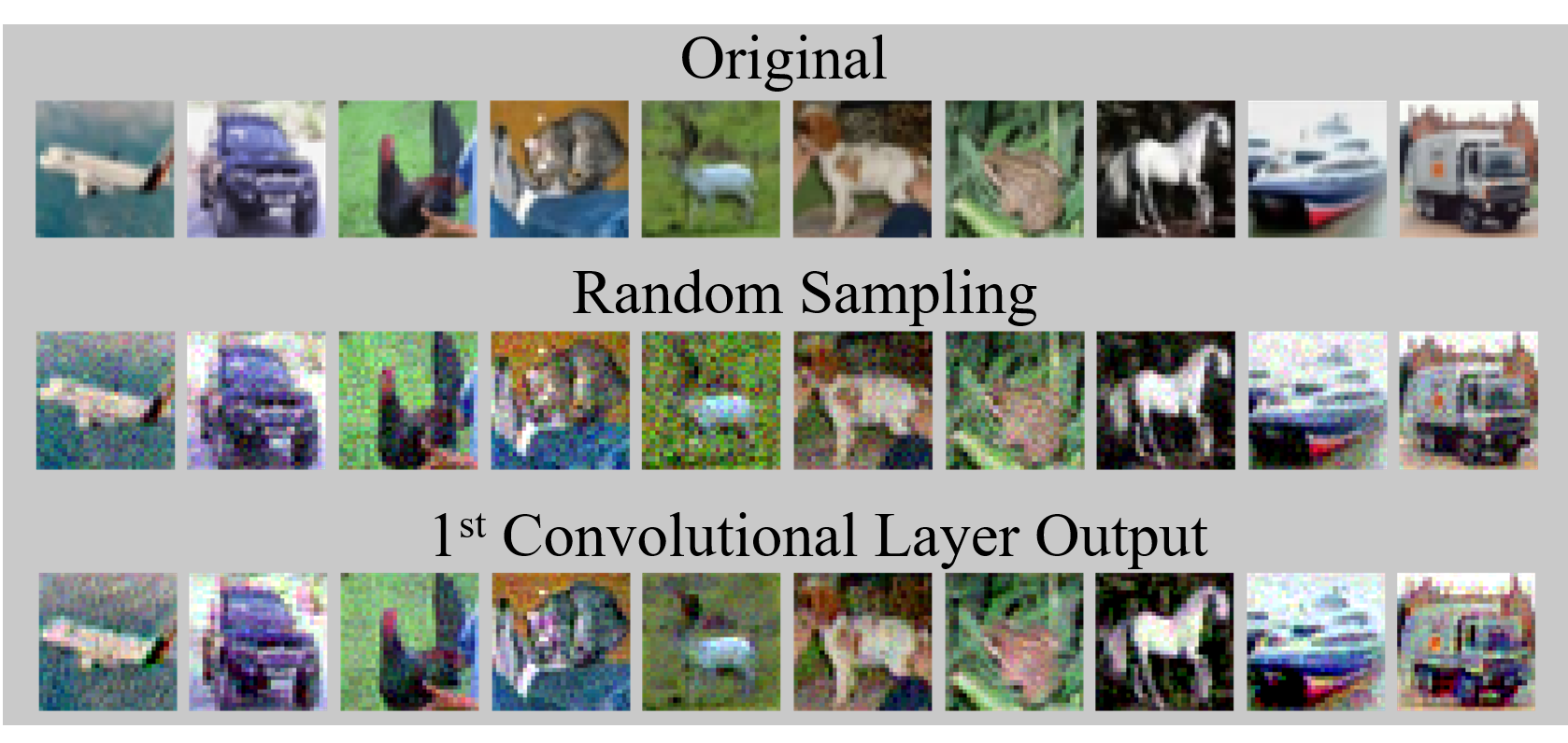

Adversarial examples causing evasive predictions are widely used to evaluate and improve the robustness of machine learning models. However, current studies on adversarial examples focus on supervised learning tasks, relying on the ground-truth data label, a targeted objective, or supervision from a trained classifier. In this paper, we propose a framework of generating adversarial examples for unsupervised models and demonstrate novel applications to data augmentation. Our framework exploits a mutual information neural estimator as an information-theoretic similarity measure to generate adversarial examples without supervision. We propose a new MinMax algorithm with provable convergence guarantees for efficient generation of unsupervised adversarial examples. Our framework can also be extended to supervised adversarial examples. When using unsupervised adversarial examples as a simple plug-in data augmentation tool for model retraining, significant improvements are consistently observed across different unsupervised tasks and datasets, including data reconstruction, representation learning, and contrastive learning. Our results show novel methods and advantages in studying and improving robustness of unsupervised learning problems via adversarial examples. Our codes are available at https://github.com/IBM/UAE.

翻译:造成规避预测的对立实例被广泛用于评价和提高机器学习模式的稳健性,然而,目前关于对抗性实例的研究侧重于受监督的学习任务,依靠地面实况数据标签、目标目标或受过训练的分类师的监督。在本文件中,我们提出一个框架,为未经监督的模型生成对抗性实例,并展示数据扩增的新应用。我们的框架利用一个相互的信息神经估计器作为信息理论相似性措施,在没有监督的情况下生成对抗性实例。我们建议一种新的 MinMax算法,为有效生成不受监督的对抗性实例提供可确认的趋同保证。我们的框架还可以扩展至受监督的对抗性实例。在使用未经监督的对抗性实例作为模型再培训的简单插件数据增强工具时,在不同的未经监督的任务和数据集中,包括数据重建、代表性学习和对比性学习,始终观察到重大改进。我们的成果显示在通过对抗性实例研究和改进未受监督学习问题的稳健性和可靠性方面的新方法和优势。我们的守则可在 https/giust/Um.