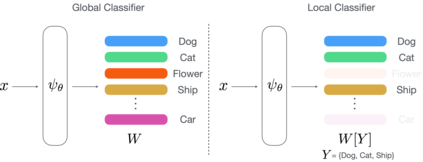

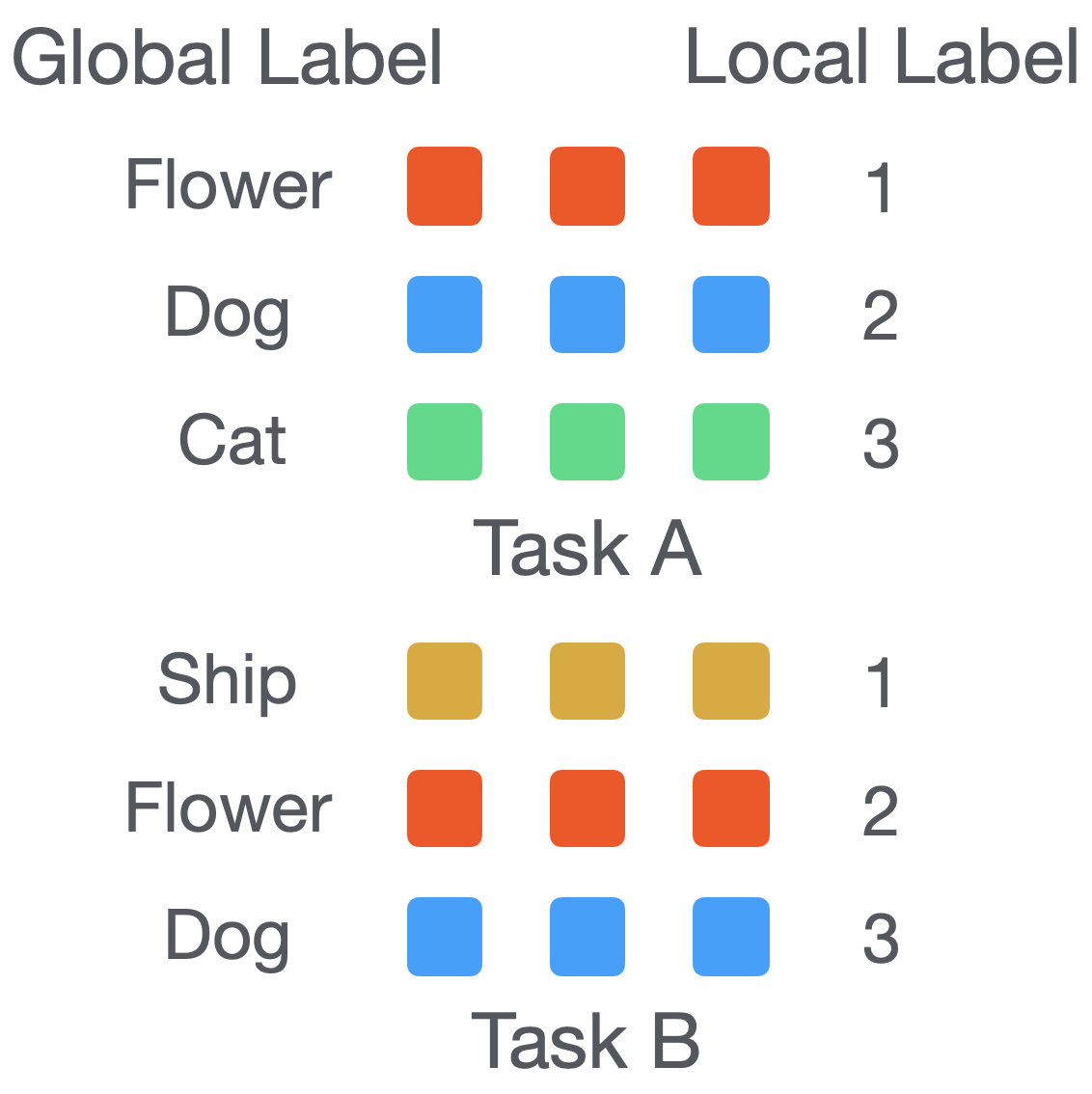

Few-shot learning (FSL) is a central problem in meta-learning, where learners must efficiently learn from few labeled examples. Within FSL, feature pre-training has recently become an increasingly popular strategy to significantly improve generalization performance. However, the contribution of pre-training is often overlooked and understudied, with limited theoretical understanding of its impact on meta-learning performance. Further, pre-training requires a consistent set of global labels shared across training tasks, which may be unavailable in practice. In this work, we address the above issues by first showing the connection between pre-training and meta-learning. We discuss why pre-training yields more robust meta-representation and connect the theoretical analysis to existing works and empirical results. Secondly, we introduce Meta Label Learning (MeLa), a novel meta-learning algorithm that learns task relations by inferring global labels across tasks. This allows us to exploit pre-training for FSL even when global labels are unavailable or ill-defined. Lastly, we introduce an augmented pre-training procedure that further improves the learned meta-representation. Empirically, MeLa outperforms existing methods across a diverse range of benchmarks, in particular under a more challenging setting where the number of training tasks is limited and labels are task-specific. We also provide extensive ablation study to highlight its key properties.

翻译:少见的学习(FSL)是元学习的一个中心问题,学习者必须有效地从几个有标签的例子中学习。在FSL中,特别的预培训最近已成为一项越来越受欢迎的战略,以大幅度提高一般化业绩。然而,预培训的贡献往往被忽视和研究不足,对它对于元化业绩的影响的理论理解有限。此外,预培训需要一套一致的全球标签,在培训任务之间共享,而实际上可能无法找到这些标签。在这项工作中,我们首先通过显示培训前和元化学习之间的联系来解决上述问题。我们讨论了为什么培训前产生更强的元代表制,并将理论分析与现有的工作和经验结果联系起来。第二,我们引入了新颖的元学习算法,即通过推导出全球性标签,来学习任务关系。这使我们能够对FSL进行预先培训,即使全球标签没有或定义不当。最后,我们引入了强化的预培训程序,以进一步改善所学前的元代表制代表制之间的联系。从概念上讲,Mela超越了现有方法与现有工作分析与现有方法之间的差别,我们在一项具体任务研究中也强调我们的任务的特性。