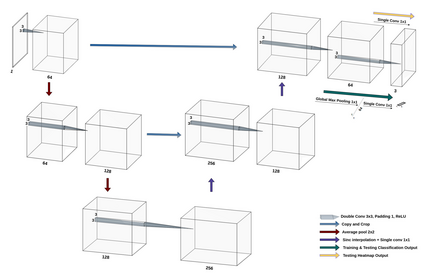

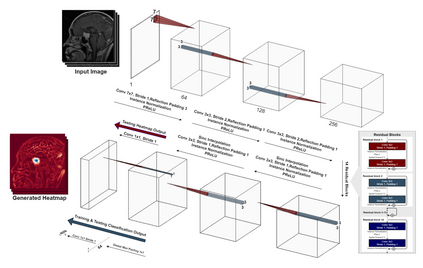

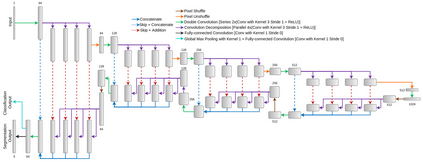

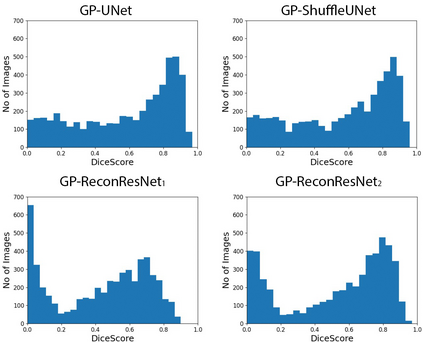

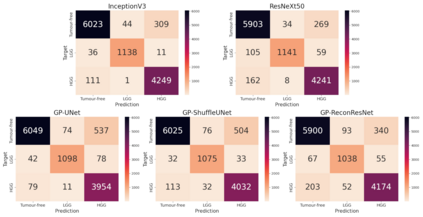

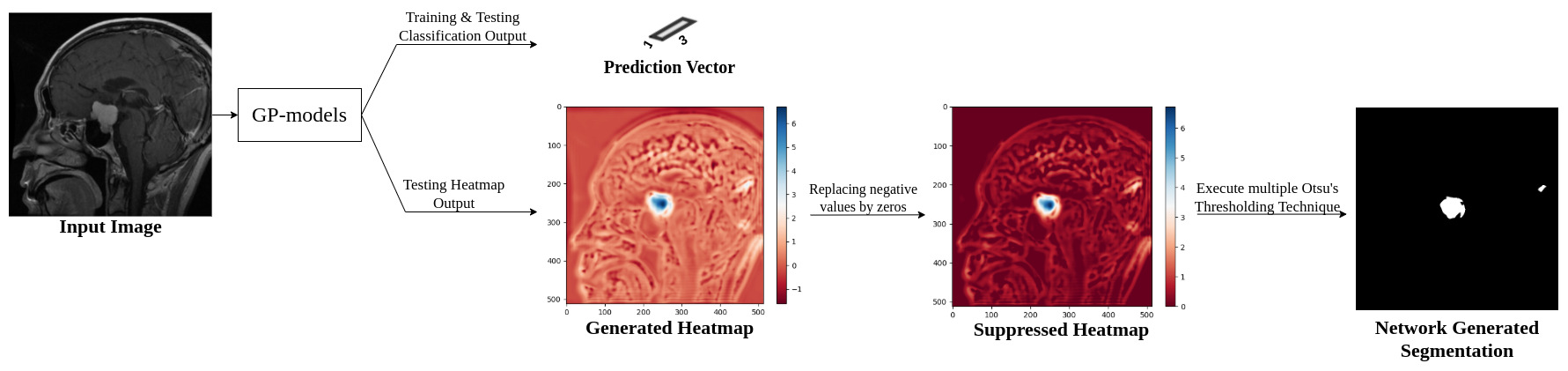

Deep learning models have shown their potential for several applications. However, most of the models are opaque and difficult to trust due to their complex reasoning - commonly known as the black-box problem. Some fields, such as medicine, require a high degree of transparency to accept and adopt such technologies. Consequently, creating explainable/interpretable models or applying post-hoc methods on classifiers to build trust in deep learning models are required. Moreover, deep learning methods can be used for segmentation tasks, which typically require hard-to-obtain, time-consuming manually-annotated segmentation labels for training. This paper introduces three inherently-explainable classifiers to tackle both of these problems as one. The localisation heatmaps provided by the networks -- representing the models' focus areas and being used in classification decision-making -- can be directly interpreted, without requiring any post-hoc methods to derive information for model explanation. The models are trained by using the input image and only the classification labels as ground-truth in a supervised fashion - without using any information about the location of the region of interest (i.e. the segmentation labels), making the segmentation training of the models weakly-supervised through classification labels. The final segmentation is obtained by thresholding these heatmaps. The models were employed for the task of multi-class brain tumour classification using two different datasets, resulting in the best F1-score of 0.93 for the supervised classification task while securing a median Dice score of 0.67$\pm$0.08 for the weakly-supervised segmentation task. Furthermore, the obtained accuracy on a subset of tumour-only images outperformed the state-of-the-art glioma tumour grading binary classifiers with the best model achieving 98.7\% accuracy.

翻译:深层学习模型已经展示了多种应用的潜力。 然而, 大部分的模型由于复杂的推理( 通常称为黑箱问题) 而不透明且难以信任。 医学等一些领域需要高度的透明度才能接受和采用这类技术。 因此, 需要创建可解释/ 可解释的模型, 或对分类者应用后热方法, 以建立对深层学习模型的信任。 此外, 深度学习方法可用于分解任务, 通常需要为培训而使用难以做到的、 耗时的手动分解变弱标记。 本文引入了三种内在可解释的分解器, 以解决这两个问题。 一些领域, 如医学等, 需要高度的透明度才能接受和采用这类技术。 由网络提供的本地化热图( 代表模型的重点领域, 并用于分类决策。 因此, 无需使用任何后期方法来为深层解解释信息。 模型使用输入图像, 仅将分类标签标为底部的底部, 不需要使用任何关于感兴趣的区域位置的信息( i. deliversalalalalalalalalalalalalalalalalalalal) 3, lievation dal dreal dal dal dalation lievation lievation legation lical dald dalation lax ligation ligation ligation lax lax lax ligation lax lax ligation lax lax lax der 。 。 。 。 25 licaldaldald d lix ligationdald ligation lix 这些, ligation lix lical lix licaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldalddaldalddddddaldaldaldaldaldaldaldaldald liddddddaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldaldal