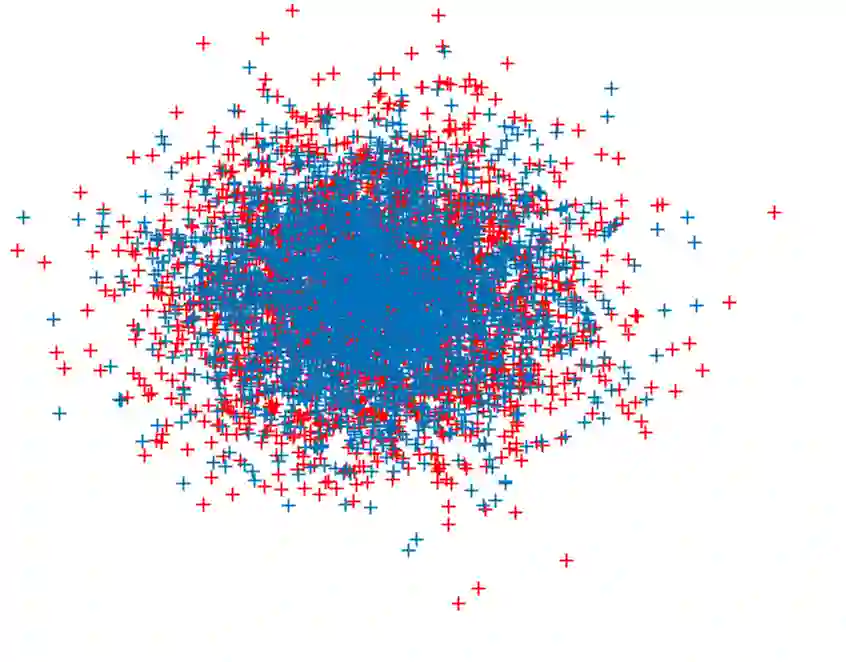

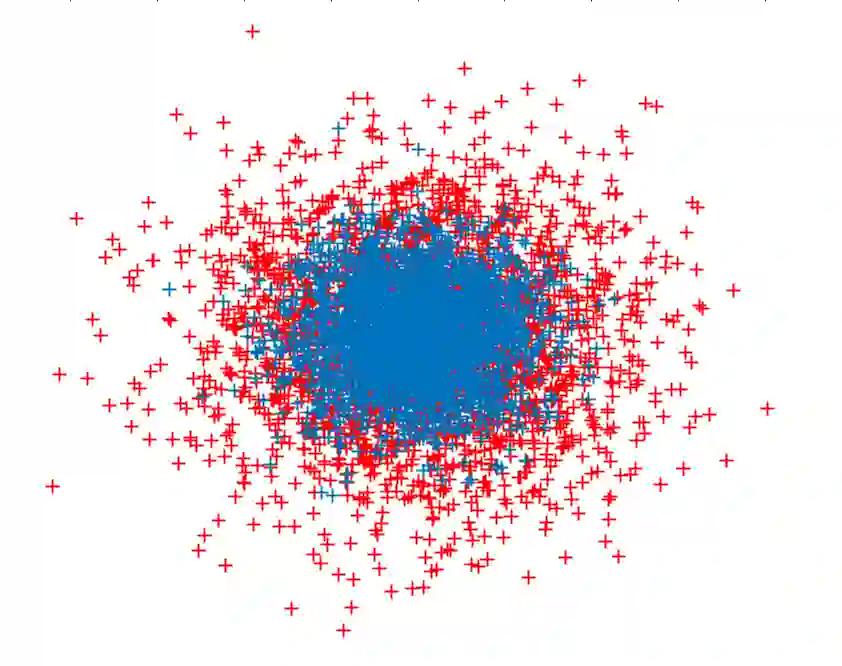

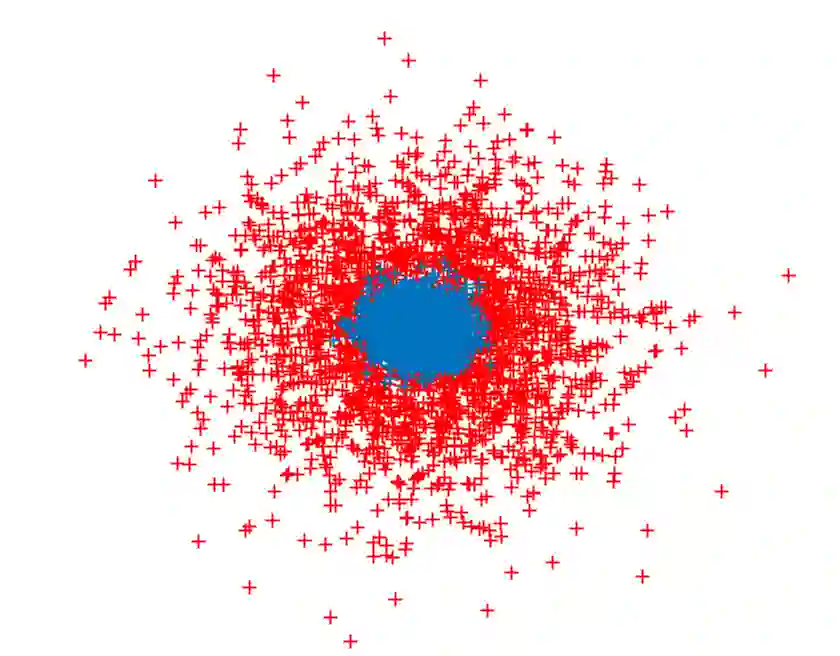

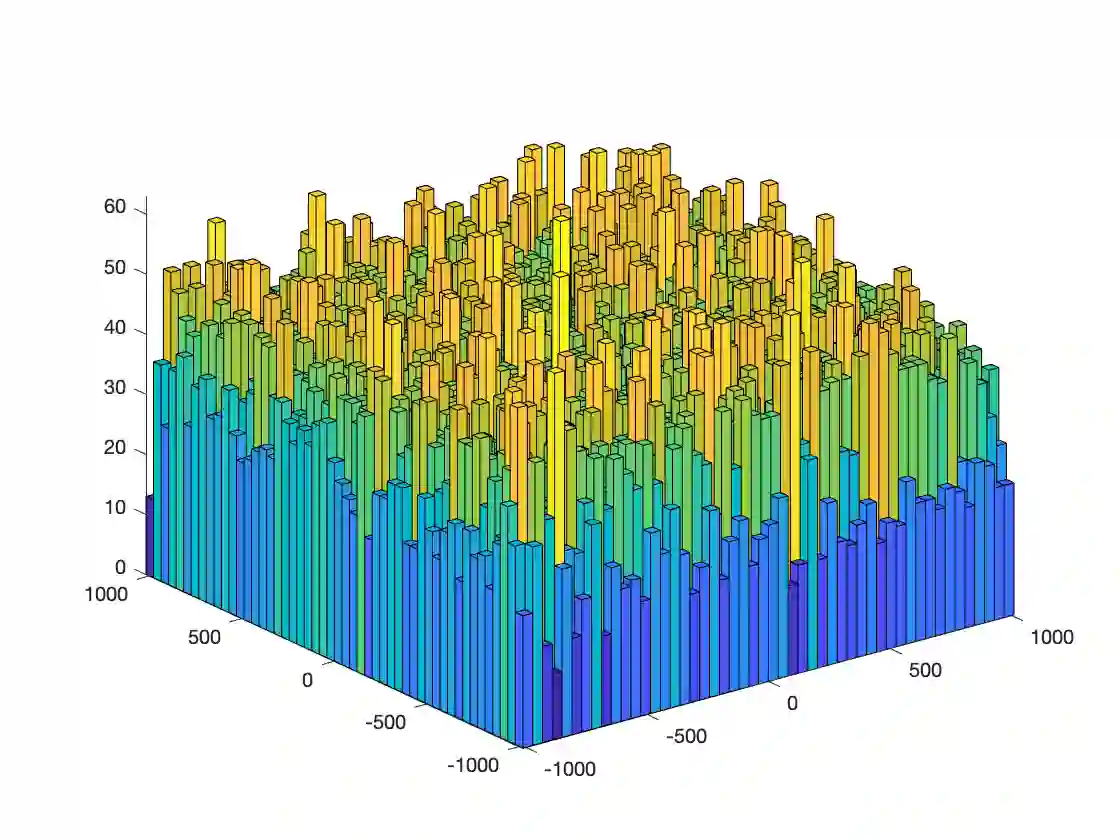

We propose a novel supervised learning method to optimize the kernel in maximum mean discrepancy generative adversarial networks (MMD GANs). Specifically, we characterize a distributionally robust optimization problem to compute a good distribution for the random feature model of Rahimi and Recht to approximate a good kernel function. Due to the fact that the distributional optimization is infinite dimensional, we consider a Monte-Carlo sample average approximation (SAA) to obtain a more tractable finite dimensional optimization problem. We subsequently leverage a particle stochastic gradient descent (SGD) method to solve finite dimensional optimization problems. Based on a mean-field analysis, we then prove that the empirical distribution of the interactive particles system at each iteration of the SGD follows the path of the gradient descent flow on the Wasserstein manifold. We also establish the non-asymptotic consistency of the finite sample estimator. Our empirical evaluation on synthetic data-set as well as MNIST and CIFAR-10 benchmark data-sets indicates that our proposed MMD GAN model with kernel learning indeed attains higher inception scores well as Fr\`{e}chet inception distances and generates better images compared to the generative moment matching network (GMMN) and MMD GAN with untrained kernels.

翻译:具体地说,我们把一个分布强强的优化优化优化优化优化优化配置的优化优化问题定性为,以计算Rahimi和Recht随机特征模型的正确分布,以近似良好的内核功能。由于分配优化是无限的,我们考虑蒙特-卡洛样本样本平均近似(SAA),以获得一个更可移植的有限维优化问题。我们随后利用粒子随机梯度梯度下降(SGD)方法解决有限维度优化问题。根据平均场分析,我们然后证明SGD每次迭代的交互式粒子系统的经验分布遵循瓦塞斯坦柱形的梯度下降流路径。我们还建立了有限样品估计器的非表面一致性。我们对合成数据集以及MNIST和CIFAR-10基准数据集的经验评估表明,我们提议的MD GAN模型确实达到了较高初始阶段的分数,作为MISMMMMM的初始距离和模拟图像(FrZQQQQGMG)的比对GMMMMMA和GMMMMA的原始距离和图像进行更好的模拟的比对时间的比。