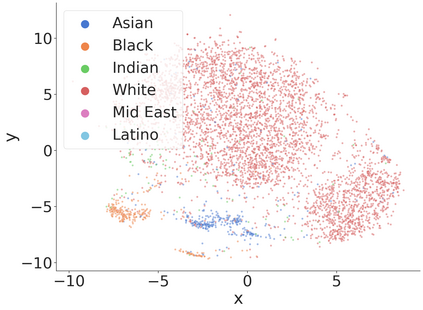

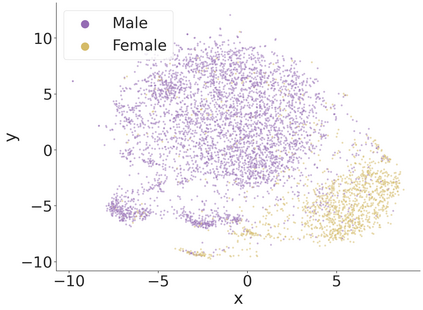

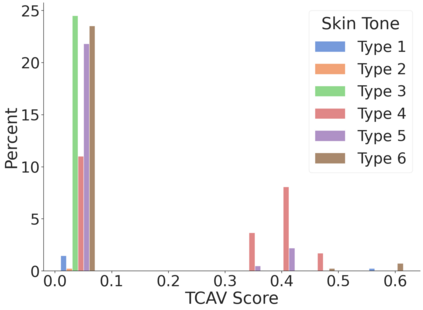

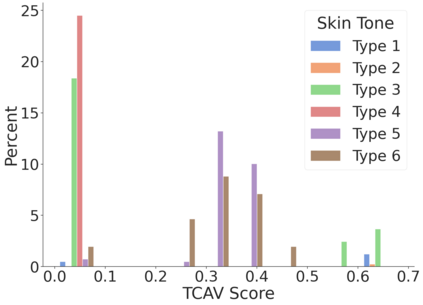

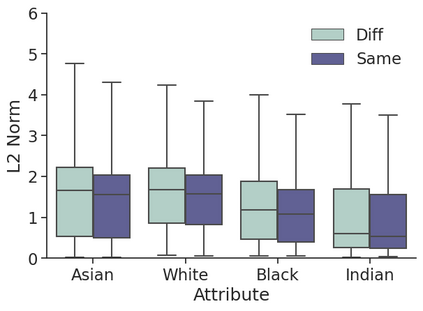

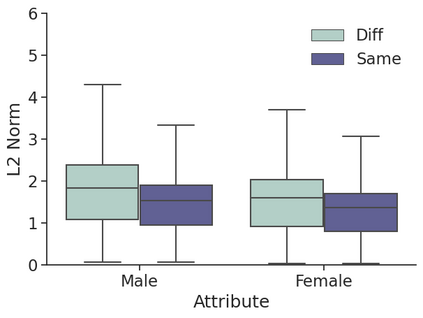

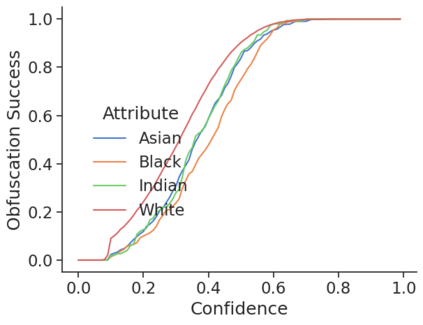

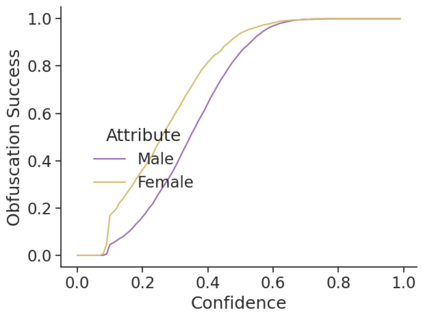

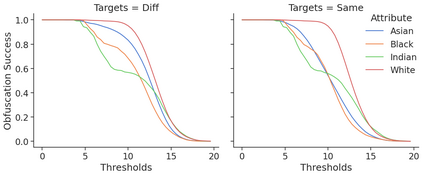

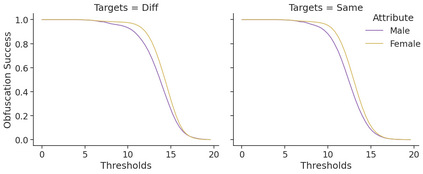

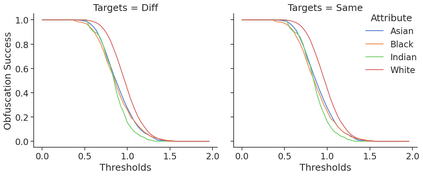

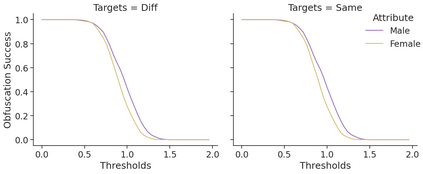

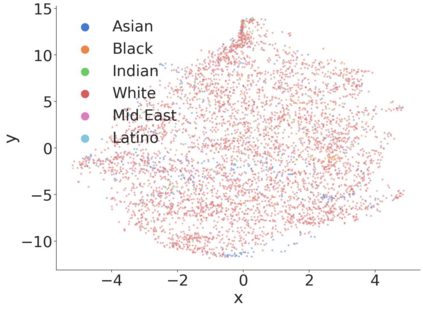

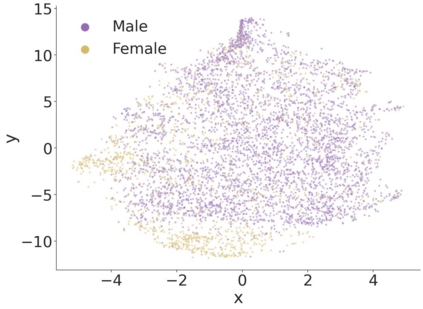

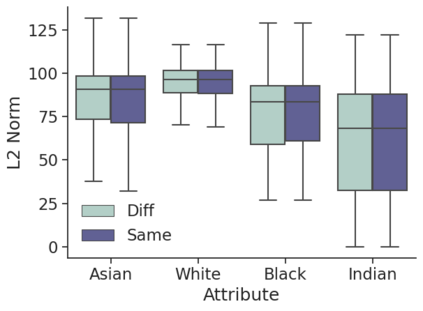

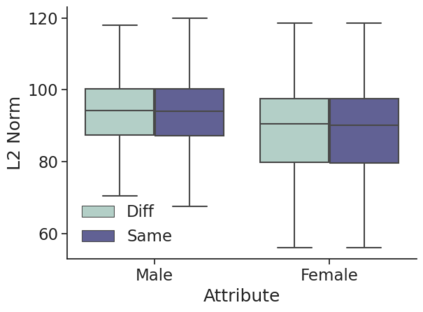

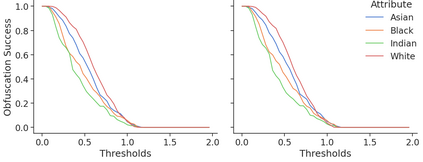

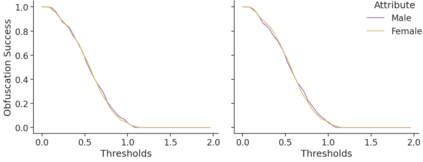

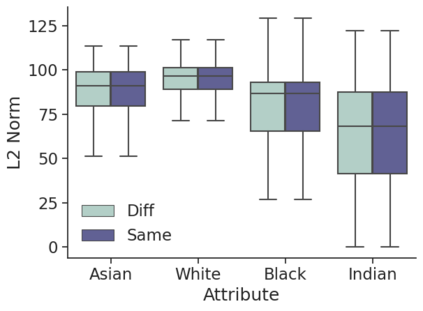

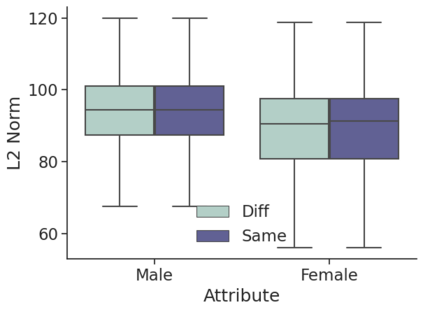

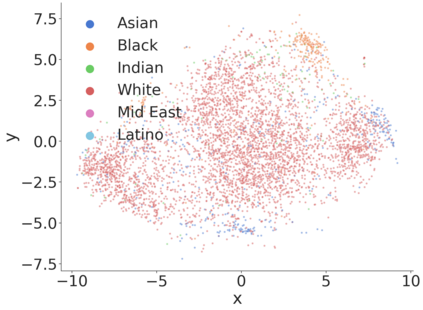

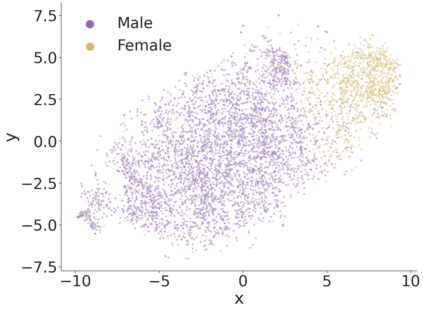

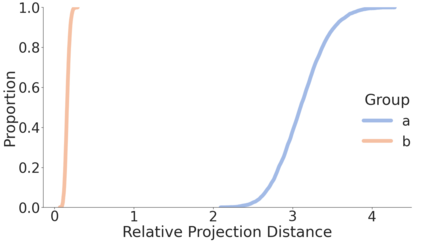

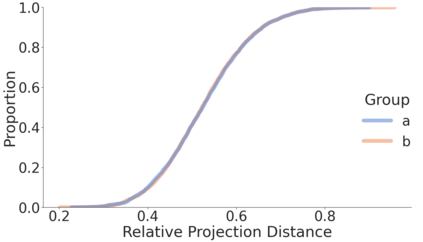

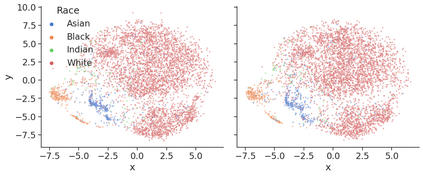

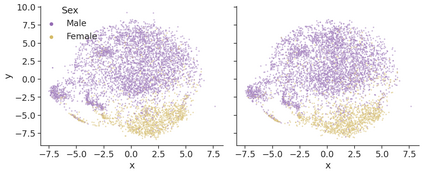

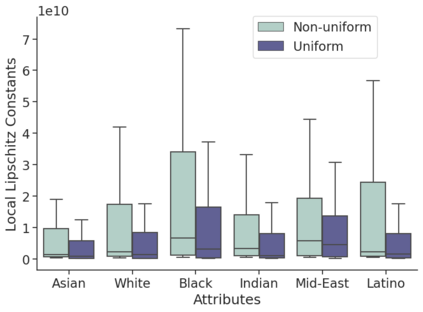

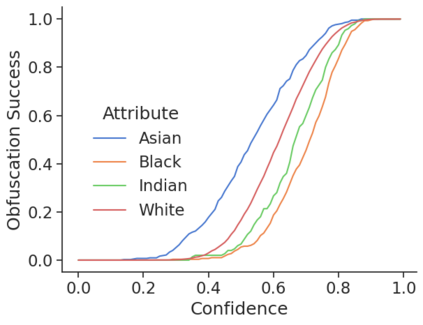

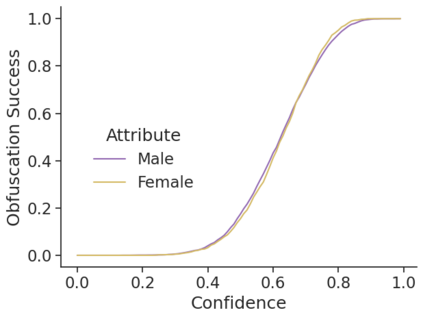

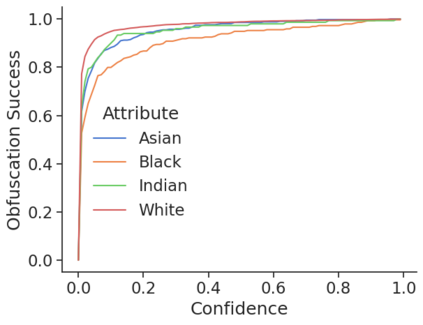

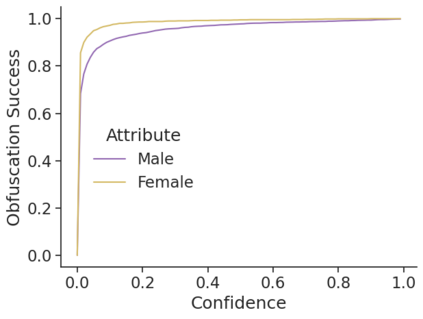

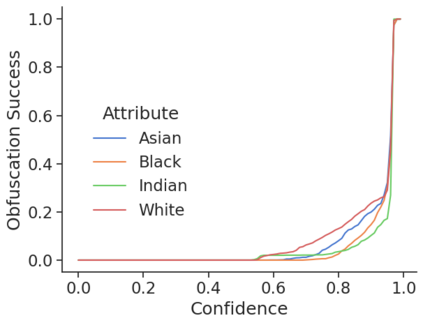

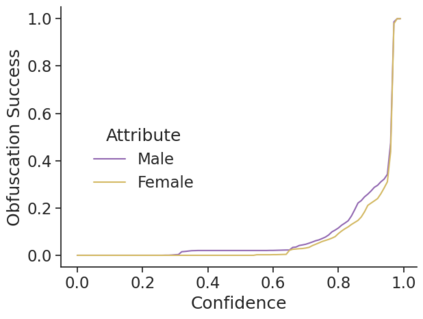

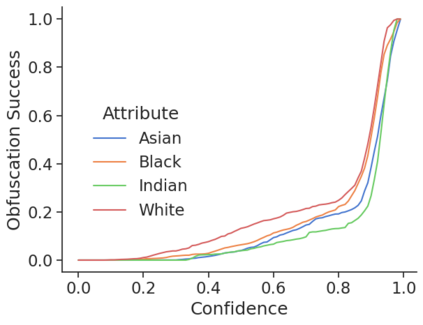

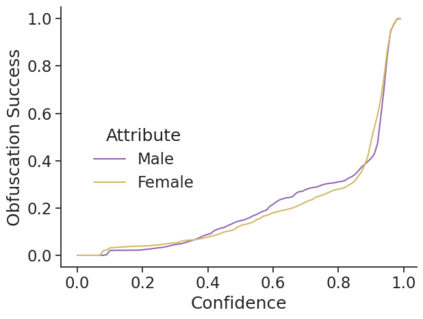

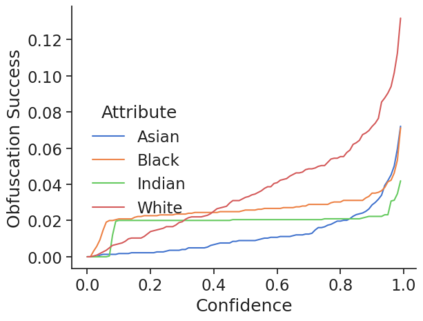

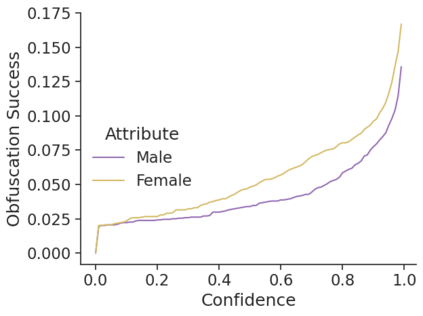

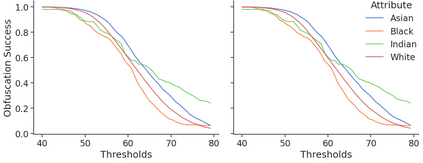

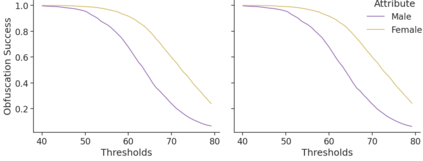

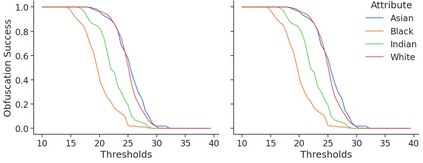

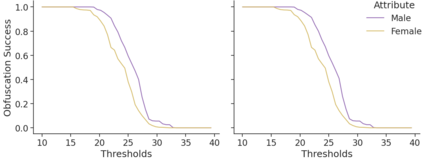

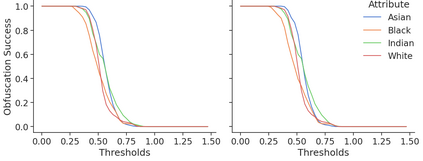

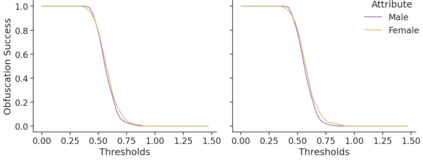

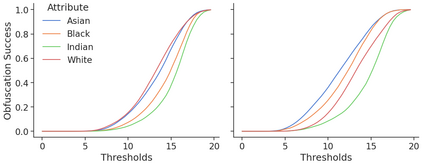

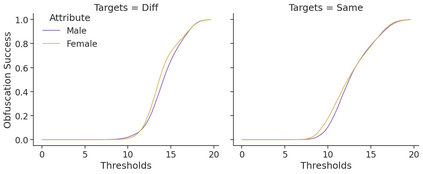

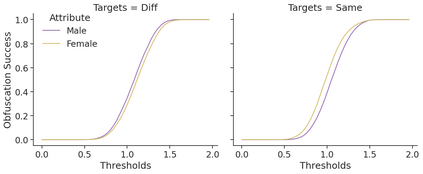

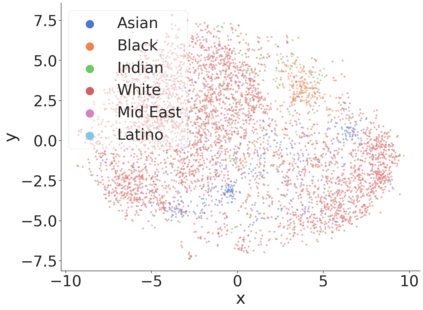

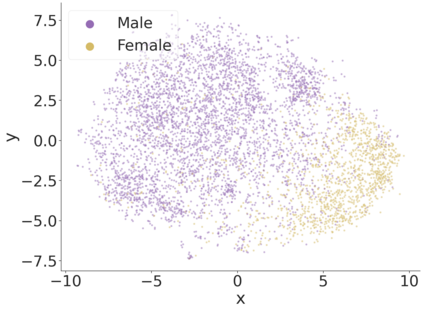

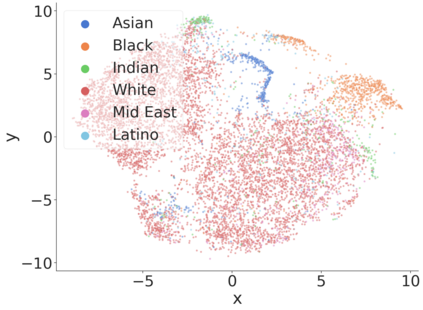

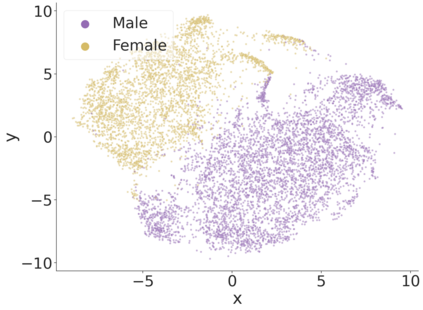

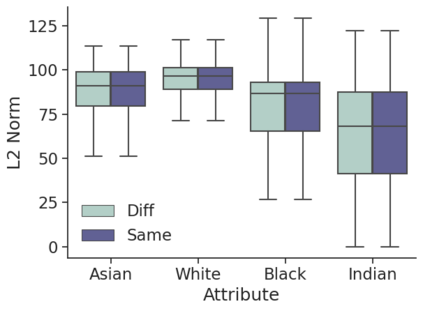

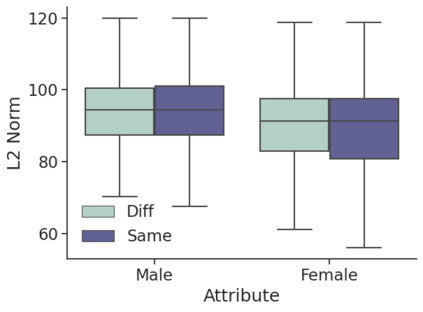

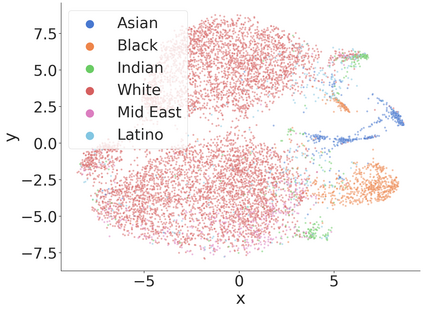

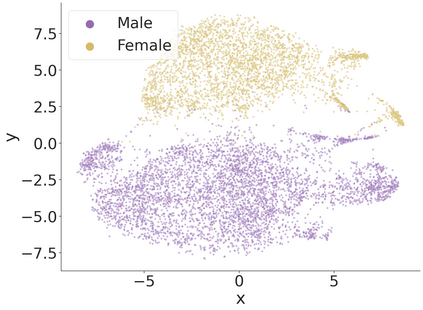

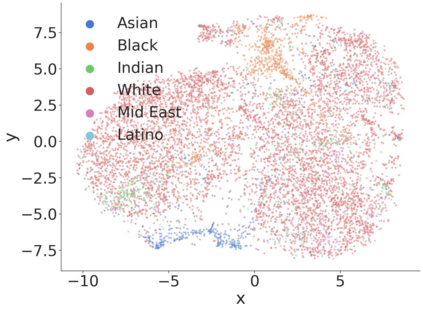

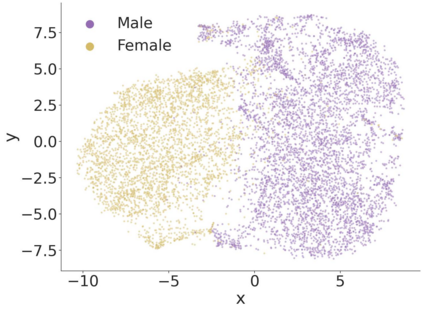

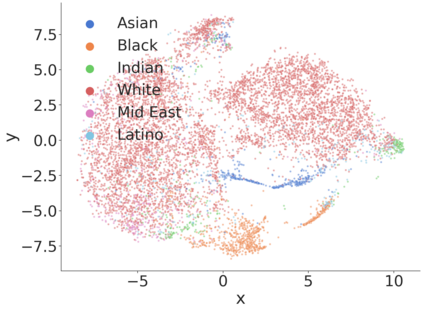

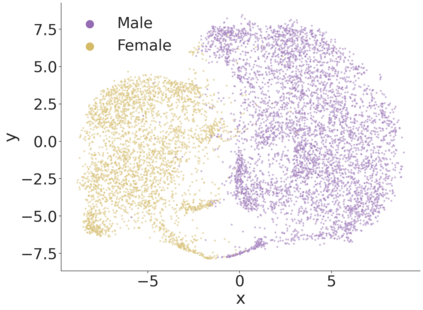

The proliferation of automated face recognition in the commercial and government sectors has caused significant privacy concerns for individuals. One approach to address these privacy concerns is to employ evasion attacks against the metric embedding networks powering face recognition systems: Face obfuscation systems generate imperceptibly perturbed images that cause face recognition systems to misidentify the user. Perturbed faces are generated on metric embedding networks, which are known to be unfair in the context of face recognition. A question of demographic fairness naturally follows: are there demographic disparities in face obfuscation system performance? We answer this question with an analytical and empirical exploration of recent face obfuscation systems. Metric embedding networks are found to be demographically aware: face embeddings are clustered by demographic. We show how this clustering behavior leads to reduced face obfuscation utility for faces in minority groups. An intuitive analytical model yields insight into these phenomena.

翻译:商业和政府部门自动化面部识别的泛滥对个人造成了严重的隐私问题。解决这些隐私问题的一个办法是对强化面部识别系统的衡量嵌入网络进行规避攻击:面对模糊的系统产生无法察觉的不易察觉的图像,导致对面部识别系统误认用户的身份。在衡量嵌入网络上产生了不自觉的面部,在表面识别方面,人们知道这些面部识别是不公平的。人口公平问题自然如下:在面对模糊的系统性能方面是否存在人口差异?我们通过对近期面部模糊系统的分析和经验探索来回答这一问题。在人口方面发现,计量嵌入网络具有人口意识:面部嵌入由人口组成。我们展示了这种组合行为如何导致少数群体面部面部脸部识别功能的减少。一个直觉分析模型可以洞察到这些现象。