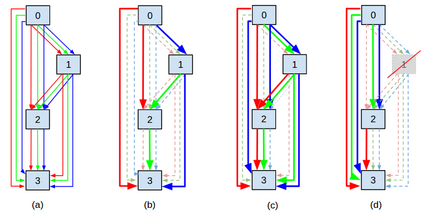

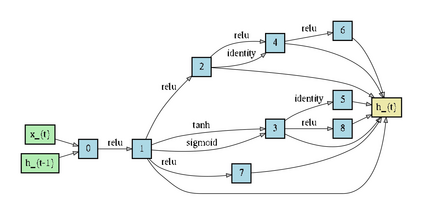

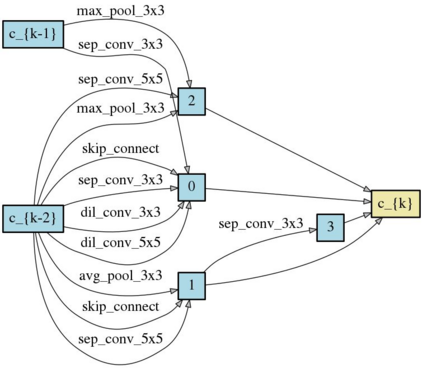

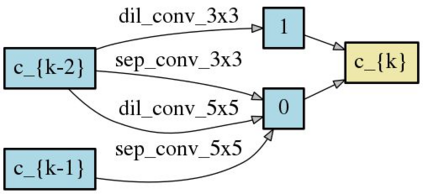

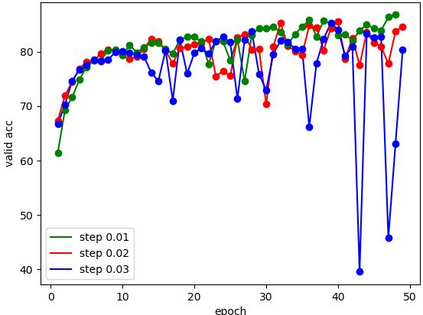

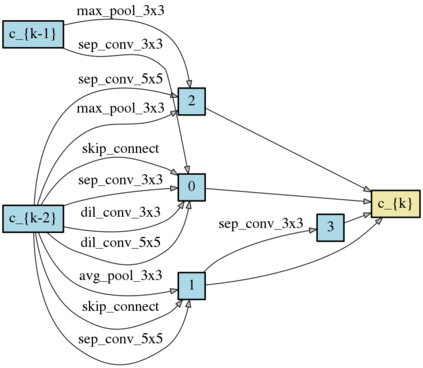

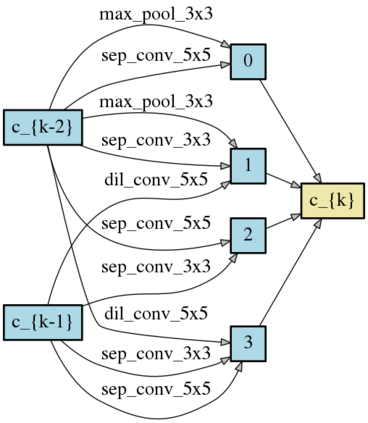

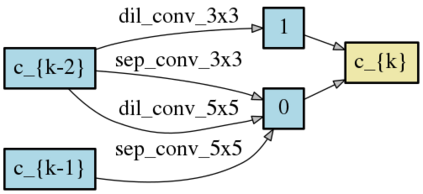

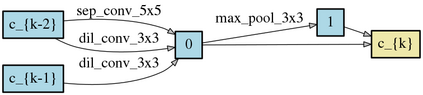

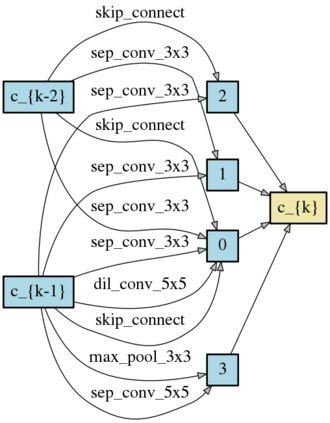

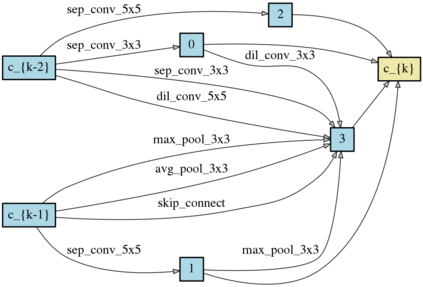

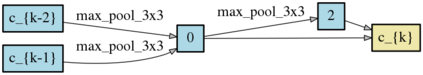

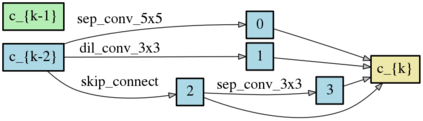

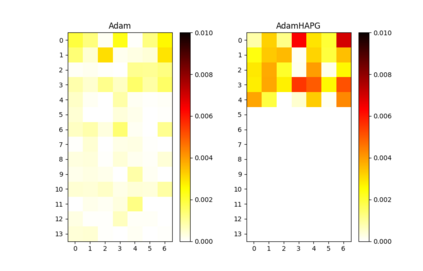

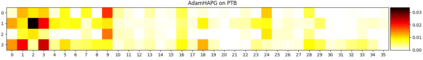

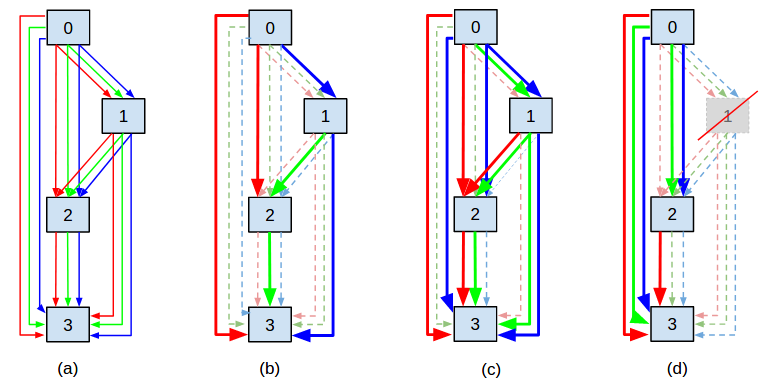

This paper aims at enlarging the problem of Neural Architecture Search (NAS) from Single-Path and Multi-Path Search to automated Mixed-Path Search. In particular, we model the NAS problem as a sparse supernet using a new continuous architecture representation with a mixture of sparsity constraints. The sparse supernet enables us to automatically achieve sparsely-mixed paths upon a compact set of nodes. To optimize the proposed sparse supernet, we exploit a hierarchical accelerated proximal gradient algorithm within a bi-level optimization framework. Extensive experiments on Convolutional Neural Network and Recurrent Neural Network search demonstrate that the proposed method is capable of searching for compact, general and powerful neural architectures.

翻译:本文旨在扩大神经结构搜索(NAS)问题的范围,从单帕和多帕搜索到自动混合帕搜索。特别是,我们将NAS问题作为稀薄的超级网络,使用新的连续结构代表形式,同时使用聚度限制的混合组合。稀疏的超级网络使我们能够在一组紧凑的节点上自动实现稀释混合路径。为了优化拟议的稀释超级网络,我们在双层优化框架内利用了一种等级加速的近氧化梯度算法。关于进化神经网络和经常性神经网络的广泛实验表明,拟议的方法能够搜索紧凑、普通和强大的神经结构。