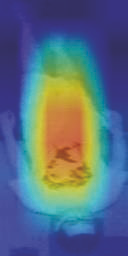

The problem of cross-modality person re-identification has been receiving increasing attention recently, due to its practical significance. Motivated by the fact that human usually attend to the difference when they compare two similar objects, we propose a dual-path cross-modality feature learning framework which preserves intrinsic spatial strictures and attends to the difference of input cross-modality image pairs. Our framework is composed by two main components: a Dual-path Spatial-structure-preserving Common Space Network (DSCSN) and a Contrastive Correlation Network (CCN). The former embeds cross-modality images into a common 3D tensor space without losing spatial structures, while the latter extracts contrastive features by dynamically comparing input image pairs. Note that the representations generated for the input RGB and Infrared images are mutually dependant to each other. We conduct extensive experiments on two public available RGB-IR ReID datasets, SYSU-MM01 and RegDB, and our proposed method outperforms state-of-the-art algorithms by a large margin with both full and simplified evaluation modes.

翻译:由于其实际意义,跨现代人重新确定身份的问题最近日益受到越来越多的关注。由于人类通常在比较两个类似对象时关注差异,我们提议一个双路交叉现代特征学习框架,保留内在的空间限制,并关注输入的跨现代图像配对的差异。我们的框架由两个主要部分组成:双路空间结构保护共同空间网络(DSCSN)和对称相近关系网络(CCN)。前者将跨现代图像嵌入一个共同的3D高压空间而不丧失空间结构,而后者则通过动态比较输入图像配对来提取对比性特征。注意输入RGB和红外图像的表述相互依赖。我们广泛试验了两种公开提供的RGB-IR再ID数据集SYSU-M01和RegDB,以及我们拟议的方法以全面简化的评价模式大幅度超越了现有状态的算法。