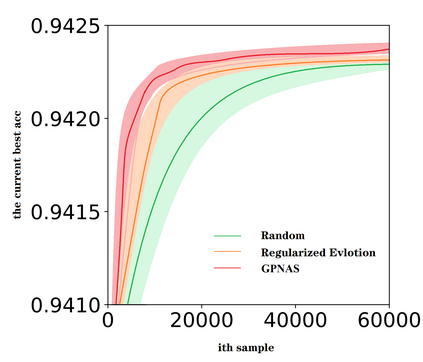

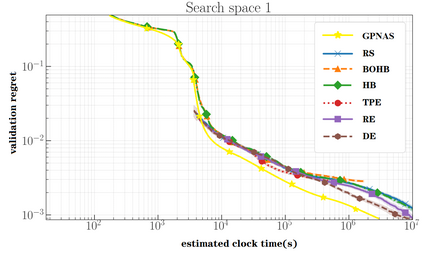

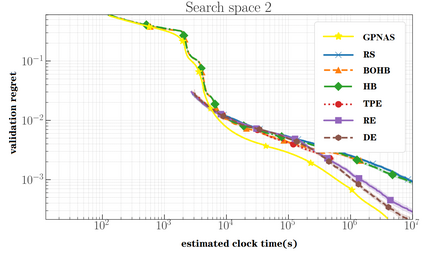

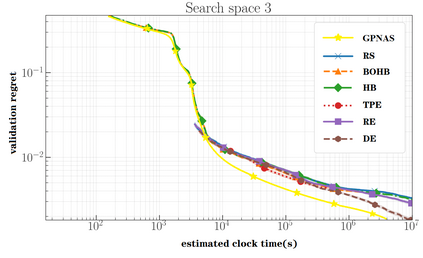

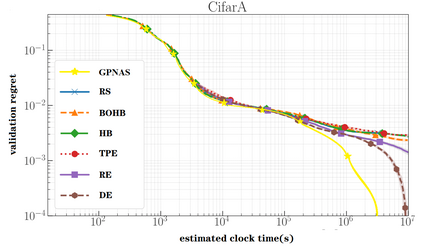

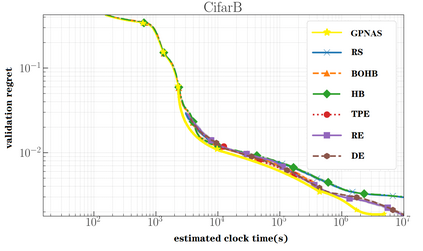

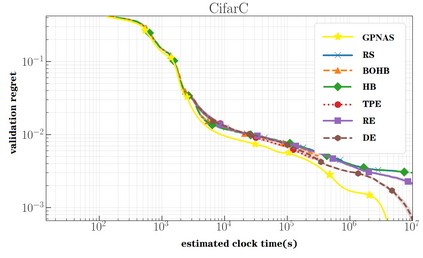

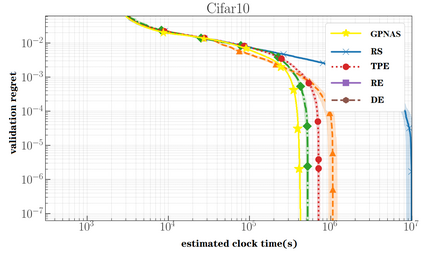

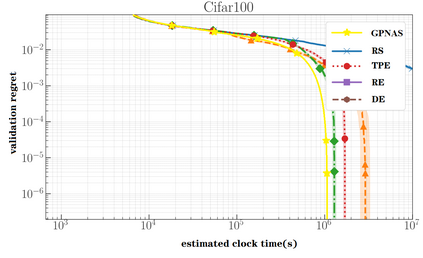

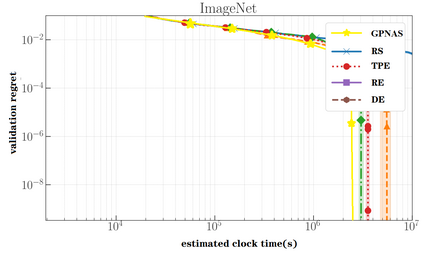

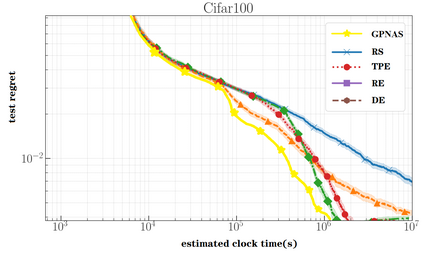

In practice, the problems encountered in Neural Architecture Search (NAS) training are not simple problems, but often a series of difficult combinations (wrong compensation estimation, curse of dimension, overfitting, high complexity, etc.). In this paper, we propose a framework to decouple network structure from operator search space, and use two BOHBs to search alternatively. Considering that activation function and initialization are also important parts of neural network, the generalization ability of the model will be affected. We introduce an activation function and an initialization method domain, and add them into the operator search space to form a generalized search space, so as to improve the generalization ability of the child model. We then trained a GCN-based predictor using feedback from the child model. This can not only improve the search efficiency, but also solve the problem of dimension curse. Next, unlike other NAS studies, we used predictors to analyze the stability of different network structures. Finally, we applied our framework to neural structure search and achieved significant improvements on multiple datasets.

翻译:实际上,神经结构搜索(NAS)培训中遇到的问题并不是简单的问题,而往往是一系列困难的组合(错误的补偿估计、尺寸诅咒、过度装配、高度复杂等等)。在本文件中,我们提出了一个框架,将网络结构与操作者搜索空间脱钩,并使用两个BOHB另作搜索。考虑到激活功能和初始化也是神经网络的重要部分,模型的普及能力将受到影响。我们引入了激活功能和初始化方法域,并将其加入操作者搜索空间,以形成一个通用搜索空间,从而改进儿童模型的普及能力。我们随后用儿童模型的反馈培训了一个基于GCN的预测器。这不仅可以提高搜索效率,还可以解决尺寸诅咒问题。接着,与其他NAS研究不同,我们使用预测器来分析不同网络结构的稳定性。最后,我们运用了我们的框架来进行神经结构搜索,并在多个数据集上实现重大改进。