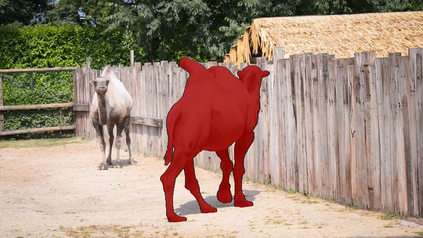

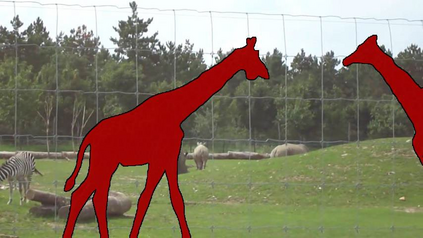

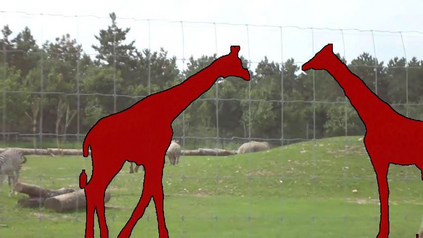

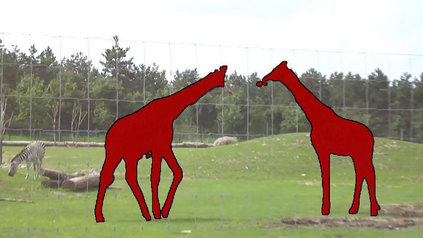

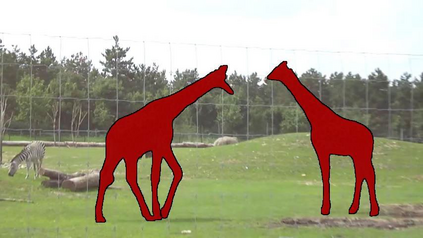

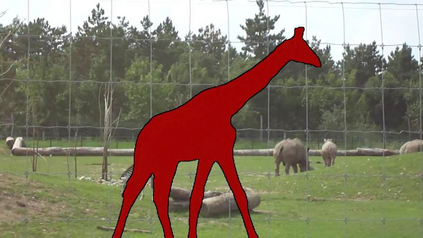

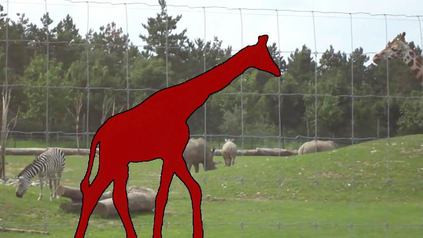

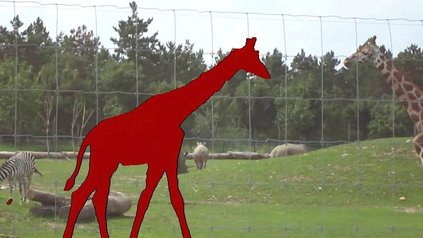

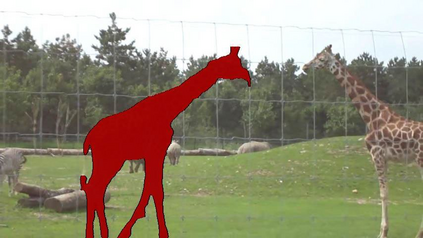

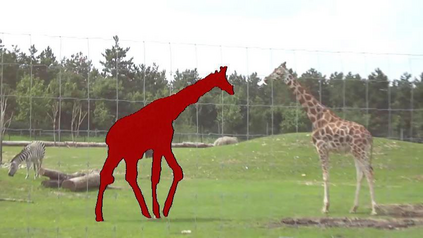

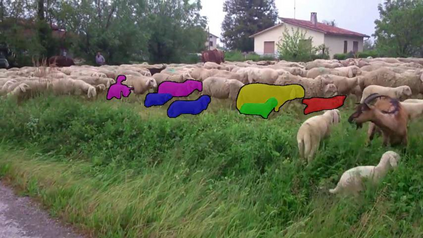

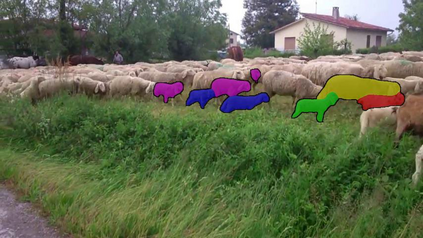

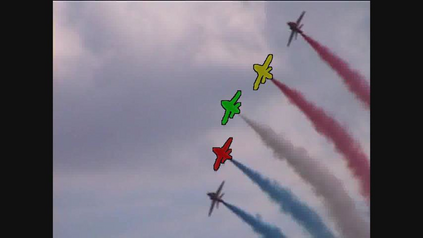

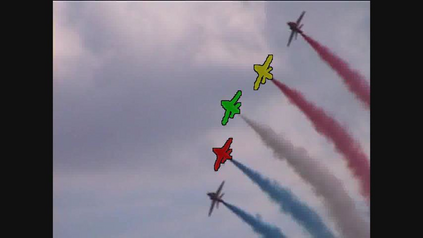

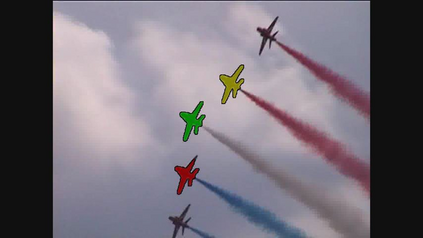

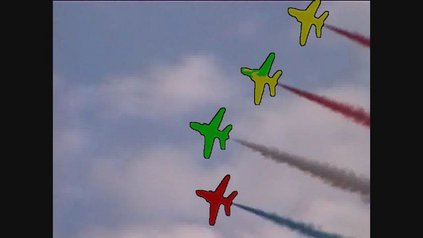

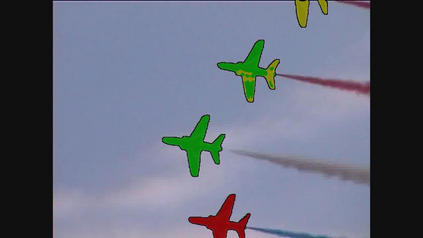

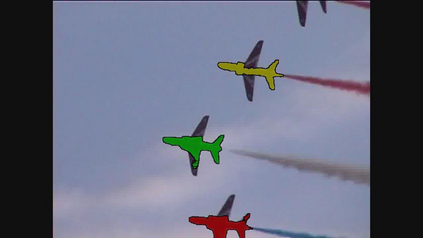

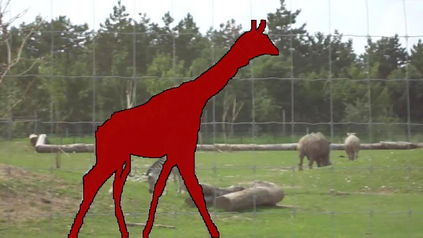

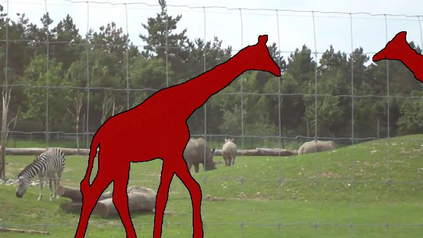

Semi-supervised video object segmentation (VOS) aims to densely track certain designated objects in videos. One of the main challenges in this task is the existence of background distractors that appear similar to the target objects. We propose three novel strategies to suppress such distractors: 1) a spatio-temporally diversified template construction scheme to obtain generalized properties of the target objects; 2) a learnable distance-scoring function to exclude spatially-distant distractors by exploiting the temporal consistency between two consecutive frames; 3) swap-and-attach augmentation to force each object to have unique features by providing training samples containing entangled objects. On all public benchmark datasets, our model achieves a comparable performance to contemporary state-of-the-art approaches, even with real-time performance. Qualitative results also demonstrate the superiority of our approach over existing methods. We believe our approach will be widely used for future VOS research.

翻译:半监督的视频对象分割(VOS)旨在对视频中的某些指定物体进行密集跟踪。这项任务的主要挑战之一是存在与目标对象相似的背景分散器。我们提出了三种新的战略来抑制这种分散器:1)一个平方-时位多样化的模板构建计划,以获取目标物体的普遍特性;2)一个可学习的远程测量功能,以利用连续两个框架之间的时间一致性,排除空间上较远的分散器;3)交换式和附加式扩增,以通过提供含有缠绕物体的培训样本,迫使每个物体具有独特的特征。在所有公共基准数据集中,我们的模型取得了与当代最新方法相似的性能,即使实时性能也是如此。定性结果还表明我们的方法优于现有方法。我们认为,我们的方法将被广泛用于未来的VOS研究。