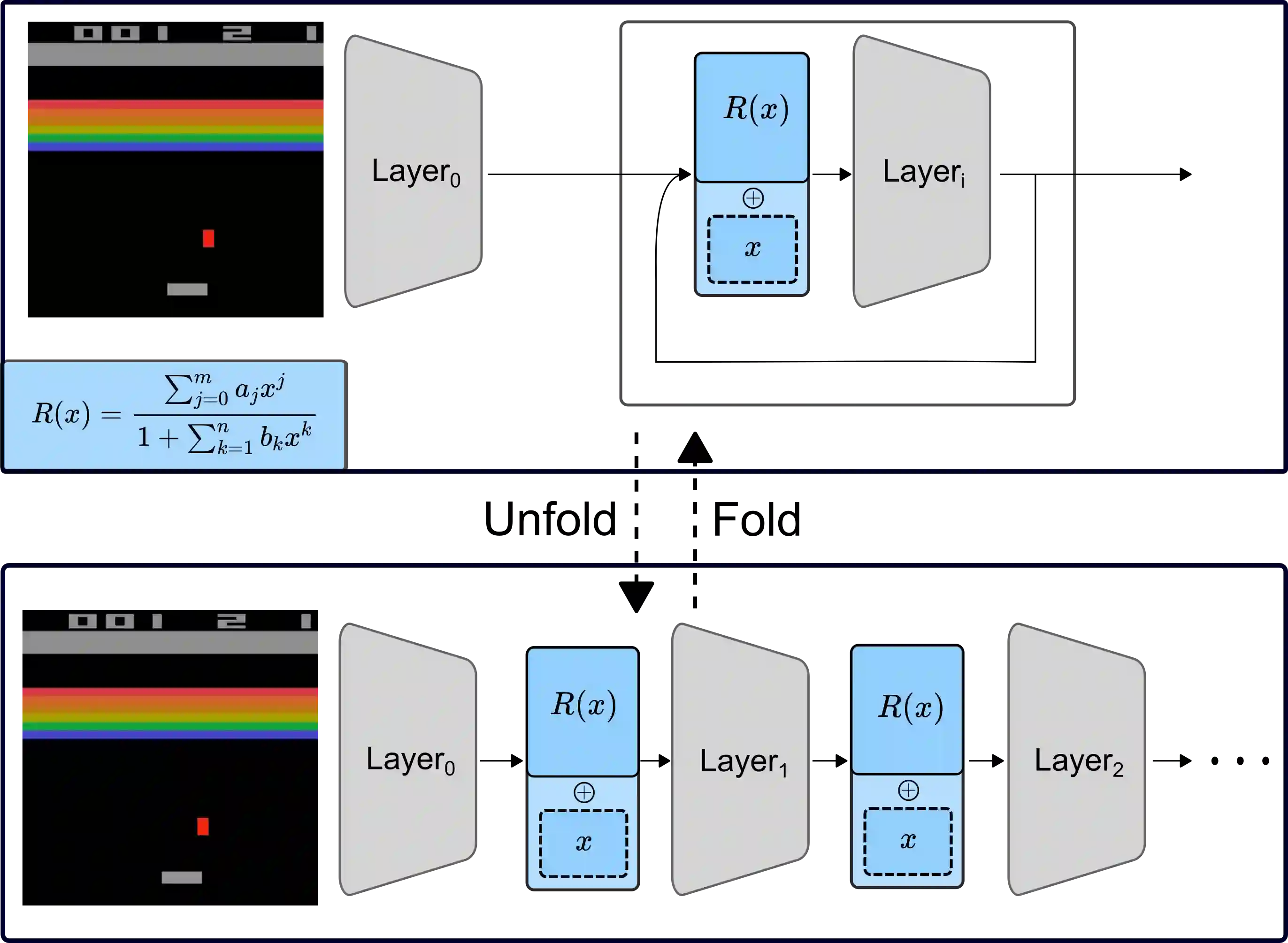

Latest insights from biology show that intelligence does not only emerge from the connections between the neurons, but that individual neurons shoulder more computational responsibility. Current Neural Network architecture design and search are biased on fixed activation functions. Using more advanced learnable activation functions provide Neural Networks with higher learning capacity. However, general guidance for building such networks is still missing. In this work, we first explain why rationals offer an optimal choice for activation functions. We then show that they are closed under residual connections, and inspired by recurrence for residual networks we derive a self-regularized version of Rationals: Recurrent Rationals. We demonstrate that (Recurrent) Rational Networks lead to high performance improvements on Image Classification and Deep Reinforcement Learning.

翻译:生物学的最新洞察显示,智能不仅来自神经元之间的联系,而且个别神经元承担更多的计算责任。当前的神经网络结构设计和搜索偏向于固定激活功能。使用更先进的可学习激活功能为神经网络提供学习能力更高的神经网络。然而,建立这种网络的一般指导仍然缺乏。在这项工作中,我们首先解释为什么理性为激活功能提供了最佳选择。我们然后表明,它们是在剩余连接下关闭的,并受到残余网络的重复作用的启发。我们产生了一个自定版本的“理性:常态理性”。我们证明(Rive)理性网络导致图像分类和深层强化学习的高度性能改进。