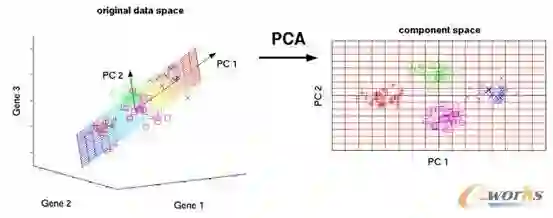

A wireless federated learning system is investigated by allowing a server and workers to exchange uncoded information via orthogonal wireless channels. Since the workers frequently upload local gradients to the server via bandwidth-limited channels, the uplink transmission from the workers to the server becomes a communication bottleneck. Therefore, a one-shot distributed principle component analysis (PCA) is leveraged to reduce the dimension of uploaded gradients such that the communication bottleneck is relieved. A PCA-based wireless federated learning (PCA-WFL) algorithm and its accelerated version (i.e., PCA-AWFL) are proposed based on the low-dimensional gradients and the Nesterov's momentum. For the non-convex loss functions, a finite-time analysis is performed to quantify the impacts of system hyper-parameters on the convergence of the PCA-WFL and PCA-AWFL algorithms. The PCA-AWFL algorithm is theoretically certified to converge faster than the PCA-WFL algorithm. Besides, the convergence rates of PCA-WFL and PCA-AWFL algorithms quantitatively reveal the linear speedup with respect to the number of workers over the vanilla gradient descent algorithm. Numerical results are used to demonstrate the improved convergence rates of the proposed PCA-WFL and PCA-AWFL algorithms over the benchmarks.

翻译:本文探讨了一种无线联邦学习系统,其中服务器和工作者通过正交无线信道交换未编码信息。由于工作者经常通过带宽受限的信道上传本地梯度到服务器,因此工作者到服务器的上行传输成为通信瓶颈。因此,利用一种一次性的分布式主成分分析(PCA)来减少上传的梯度维数,从而缓解通信瓶颈。本文提出了一种基于低维梯度和Nesterov动量的PCA无线联邦学习(PCA-WFL)算法及其加速版本(即PCA-AWFL)。对于非凸损失函数,本文通过有限时间分析量化了系统超参数对PCA-WFL和PCA-AWFL算法收敛性的影响。PCA-AWFL算法在理论上被证明比PCA-WFL算法更快地收敛。此外,PCA-WFL和PCA-AWFL算法的收敛速度定量揭示了相对于基准梯度下降算法的工作者数量的线性加速。数值结果用于演示所提出的PCA-WFL和PCA-AWFL算法相对于基准的收敛速度的改进。