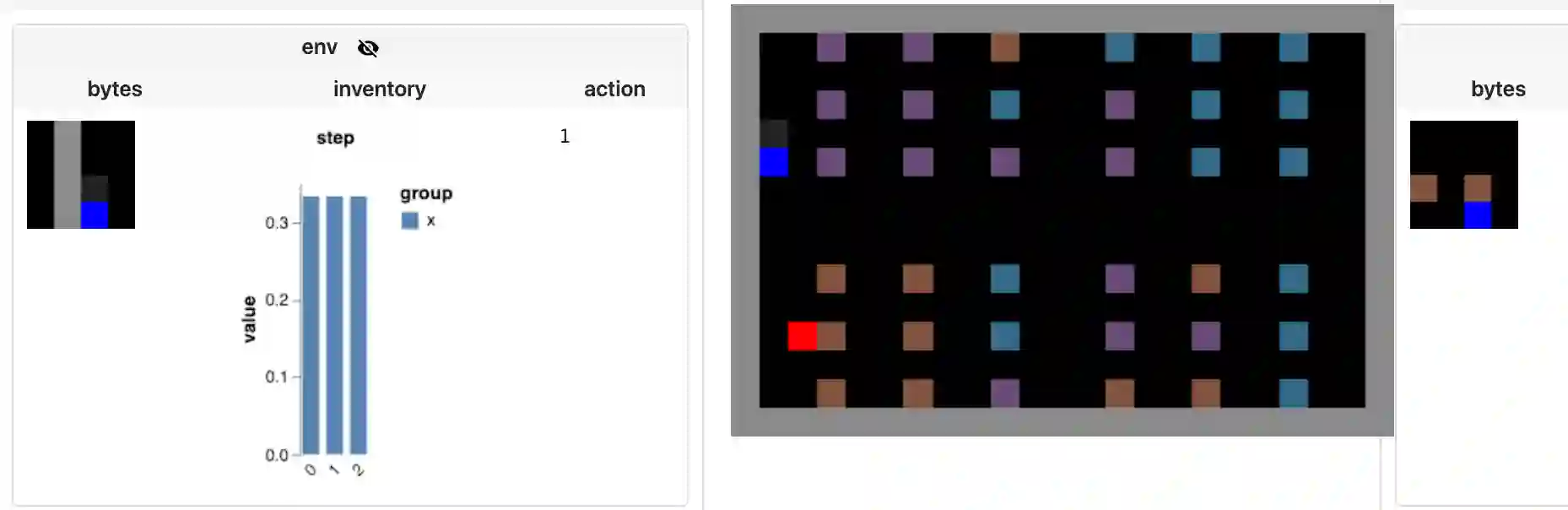

Learning to play optimally against any mixture over a diverse set of strategies is of important practical interests in competitive games. In this paper, we propose simplex-NeuPL that satisfies two desiderata simultaneously: i) learning a population of strategically diverse basis policies, represented by a single conditional network; ii) using the same network, learn best-responses to any mixture over the simplex of basis policies. We show that the resulting conditional policies incorporate prior information about their opponents effectively, enabling near optimal returns against arbitrary mixture policies in a game with tractable best-responses. We verify that such policies behave Bayes-optimally under uncertainty and offer insights in using this flexibility at test time. Finally, we offer evidence that learning best-responses to any mixture policies is an effective auxiliary task for strategic exploration, which, by itself, can lead to more performant populations.

翻译:在竞争游戏中,我们建议简单x-NeuPL同时满足两个要求:(一) 学习具有战略多样性基础政策的人口,由一个单一有条件的网络代表;(二) 使用同一个网络,学习对任何混合的最佳反应,而不是简单的基础政策。我们表明,由此产生的有条件政策有效地包含了关于其对手的事先信息,从而能够在一个具有可追溯性最佳反应的游戏中针对任意混合政策实现近乎最佳的回报。我们核实这种政策在不确定性中表现得非常完美,并在测试时提供运用这种灵活性的洞察力。最后,我们提供证据表明,学习对任何混合政策的最佳反应是战略探索的有效辅助任务,而战略探索本身可以导致更有表现的人口。