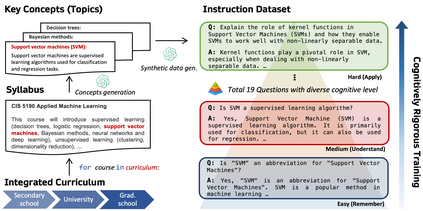

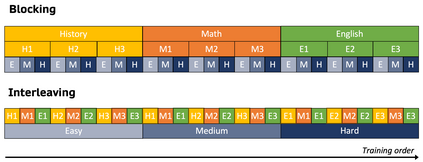

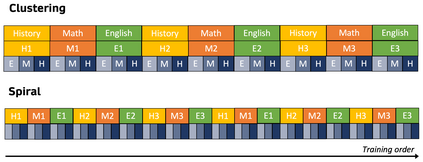

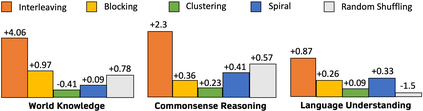

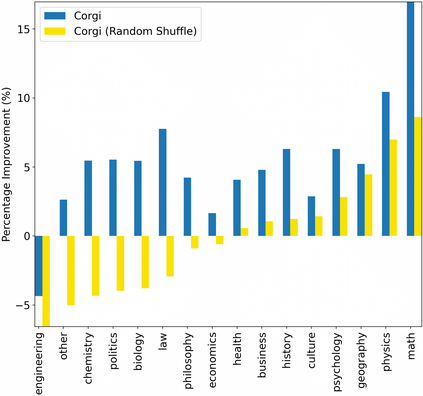

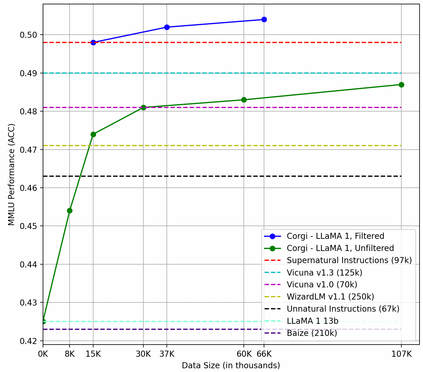

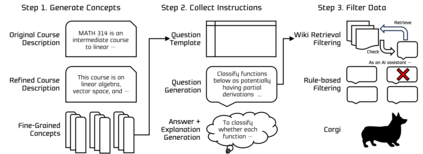

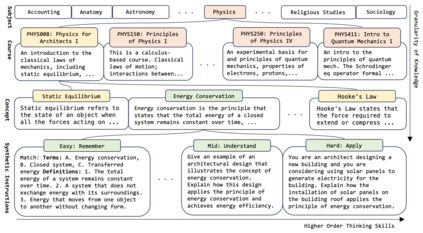

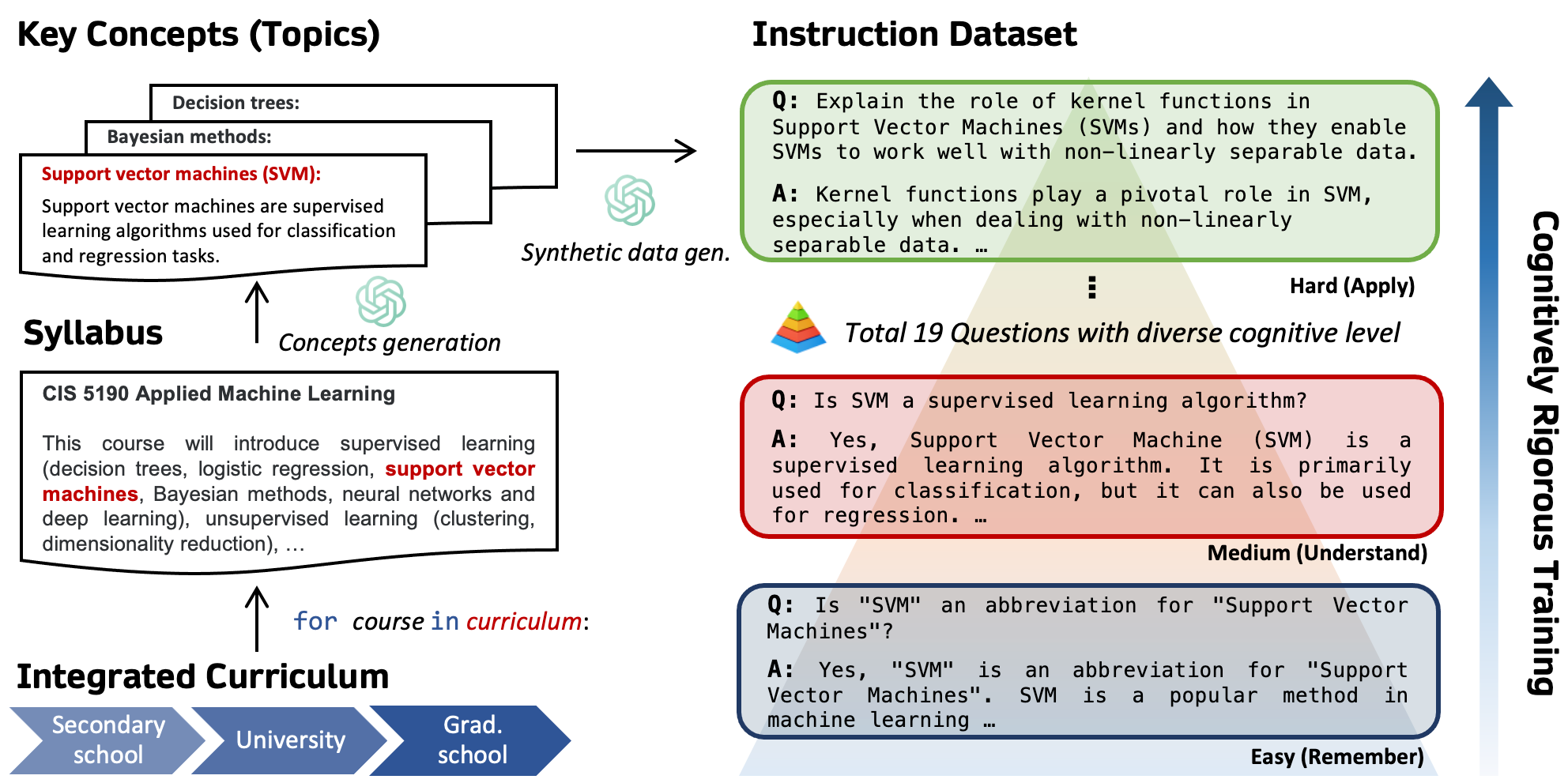

In building instruction-tuned large language models (LLMs), the importance of a deep understanding of human knowledge can be often overlooked by the importance of instruction diversification. This research proposes a novel approach to instruction tuning by integrating a structured cognitive learning methodology that takes inspiration from the systematic progression and cognitively stimulating nature of human education through two key steps. First, our synthetic instruction data generation pipeline, designed with some references to human educational frameworks, is enriched with meta-data detailing topics and cognitive rigor for each instruction. Specifically, our generation framework is infused with questions of varying levels of rigorousness, inspired by Bloom's Taxonomy, a classic educational model for structured curriculum learning. Second, during instruction tuning, we curate instructions such that questions are presented in an increasingly complex manner utilizing the information on question complexity and cognitive rigorousness produced by our data generation pipeline. Our human-inspired curriculum learning yields significant performance enhancements compared to uniform sampling or round-robin, improving MMLU by 3.06 on LLaMA 2. We conduct extensive experiments and find that the benefits of our approach are consistently observed in eight other benchmarks. We hope that our work will shed light on the post-training learning process of LLMs and its similarity with their human counterpart.

翻译:暂无翻译