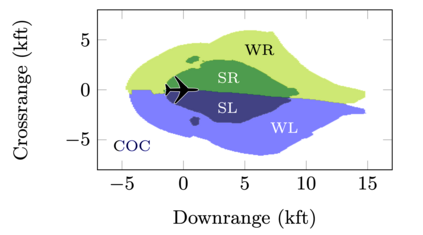

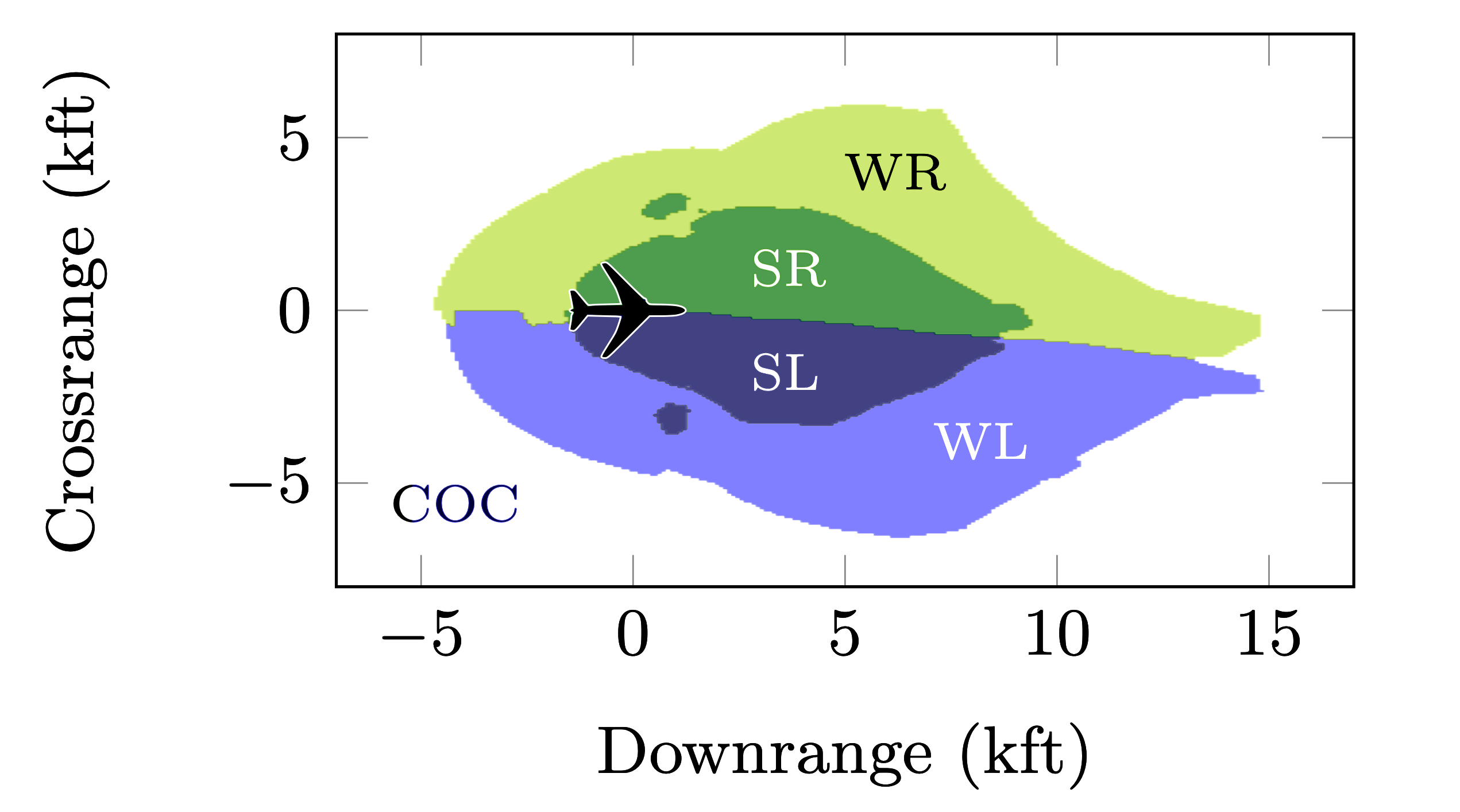

Deep neural networks (DNNs) are increasingly applied in safety-critical domains, such as self-driving cars, unmanned aircraft, and medical diagnosis. It is of fundamental importance to certify the safety of these DNNs, i.e. that they comply with a formal safety specification. While safety certification tools exactly answer this question, they are of no help in debugging unsafe DNNs, requiring the developer to iteratively verify and modify the DNN until safety is eventually achieved. Hence, a repair technique needs to be developed that can produce a safe DNN automatically. To address this need, we present SpecRepair, a tool that efficiently eliminates counter-examples from a DNN and produces a provably safe DNN without harming its classification accuracy. SpecRepair combines specification-based counter-example search and resumes training of the DNN, penalizing counter-examples and certifying the resulting DNN. We evaluate SpecRepair's effectiveness on the ACAS Xu benchmark, a DNN-based controller for unmanned aircraft, and two image classification benchmarks. The results show that SpecRepair is more successful in producing safe DNNs than comparable methods, has a shorter runtime, and produces safe DNNs while preserving their classification accuracy.

翻译:深神经网络(DNNs)越来越多地应用于安全关键领域,如自驾驶汽车、无人驾驶飞机和医疗诊断。验证这些DNS的安全性至关重要,即它们符合正式的安全规格。安全认证工具恰好回答了这个问题,但它们在调试不安全的DNNs方面毫无帮助,要求开发者在最终实现安全之前反复核查和修改DNN。因此,需要开发一种能够自动产生安全DNNN的修理技术。为了满足这一需要,我们提出了SpecRepair,这是一个有效消除DNN的反试的工具,并产生一个可察觉的安全的DNNNN,同时又不损害其分类的准确性。SpecRepair综合了基于规格的反样搜索,并恢复了DNNN的培训,惩罚反样并认证了DNN。我们评估了SpecRepair在AC Xu基准上的效力,一个基于DNNN的D控制器,以及两个图像分类基准,结果显示SREpirs在维护安全性方面比DNNR的精确性更短。