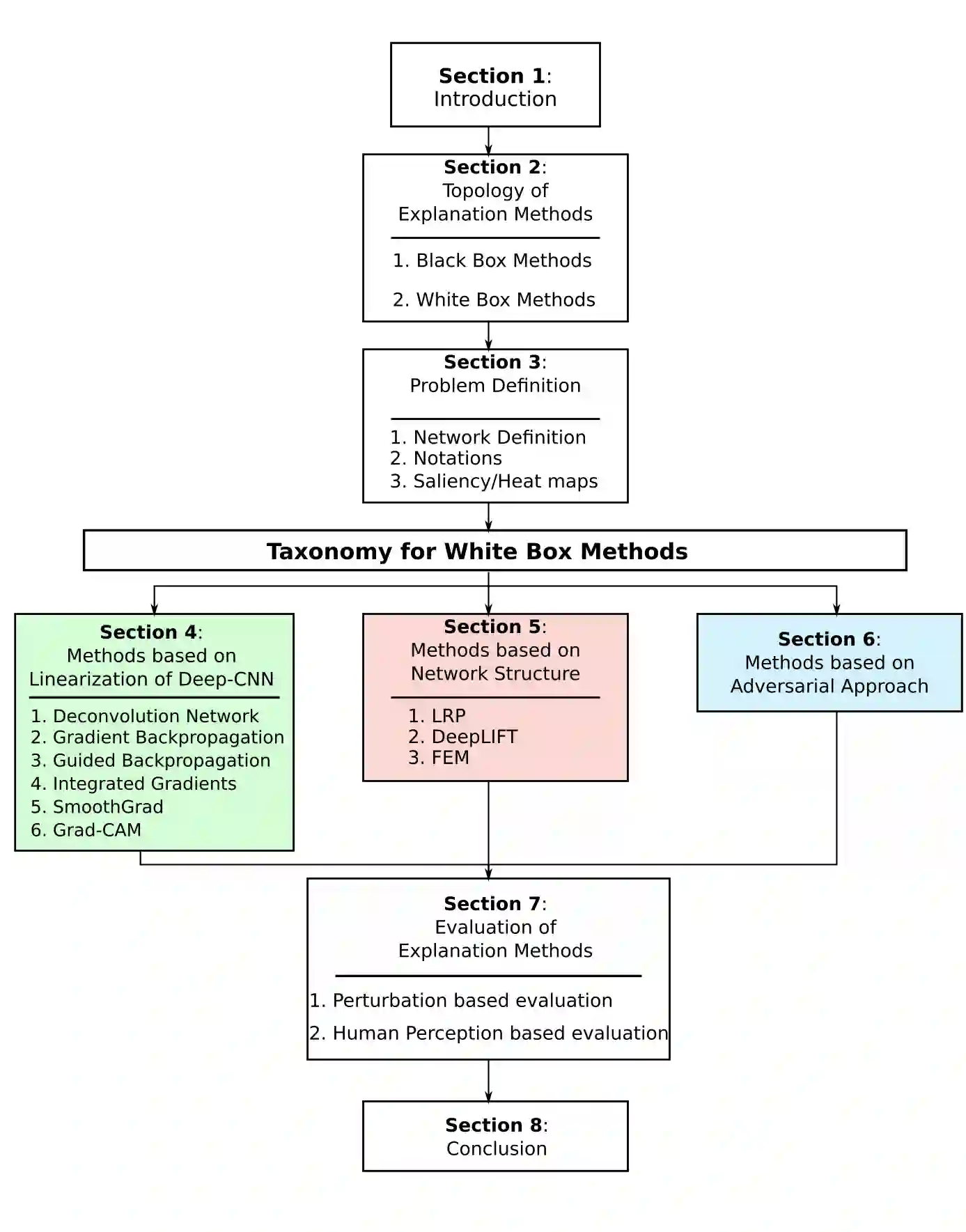

In recent years, deep learning has become prevalent to solve applications from multiple domains. Convolutional Neural Networks (CNNs) particularly have demonstrated state of the art performance for the task of image classification. However, the decisions made by these networks are not transparent and cannot be directly interpreted by a human. Several approaches have been proposed to explain to understand the reasoning behind a prediction made by a network. In this paper, we propose a topology of grouping these methods based on their assumptions and implementations. We focus primarily on white box methods that leverage the information of the internal architecture of a network to explain its decision. Given the task of image classification and a trained CNN, this work aims to provide a comprehensive and detailed overview of a set of methods that can be used to create explanation maps for a particular image, that assign an importance score to each pixel of the image based on its contribution to the decision of the network. We also propose a further classification of the white box methods based on their implementations to enable better comparisons and help researchers find methods best suited for different scenarios.

翻译:近年来,为解决多个领域的应用,深层次的学习已变得十分普遍。进化神经网络(CNNs)特别展示了图像分类任务的先进性能。然而,这些网络所作的决定并不透明,不能直接由人类解释。提出了几种方法来解释一个网络所作预测背后的推理。在本文中,我们建议根据这些方法的假设和实施情况对这些方法进行分组的地形学。我们主要侧重于利用一个网络内部结构的信息解释其决定的白箱方法。鉴于图像分类和受过培训的CNN的任务,这项工作旨在全面、详细地概述一套方法,这些方法可用于为特定图像绘制解释图,根据其对网络决定的贡献,对图像的每个像素都给予重要评分。我们还根据这些白箱方法的实施情况提出进一步分类,以便能够进行更好的比较,帮助研究人员找到最适合不同情景的方法。