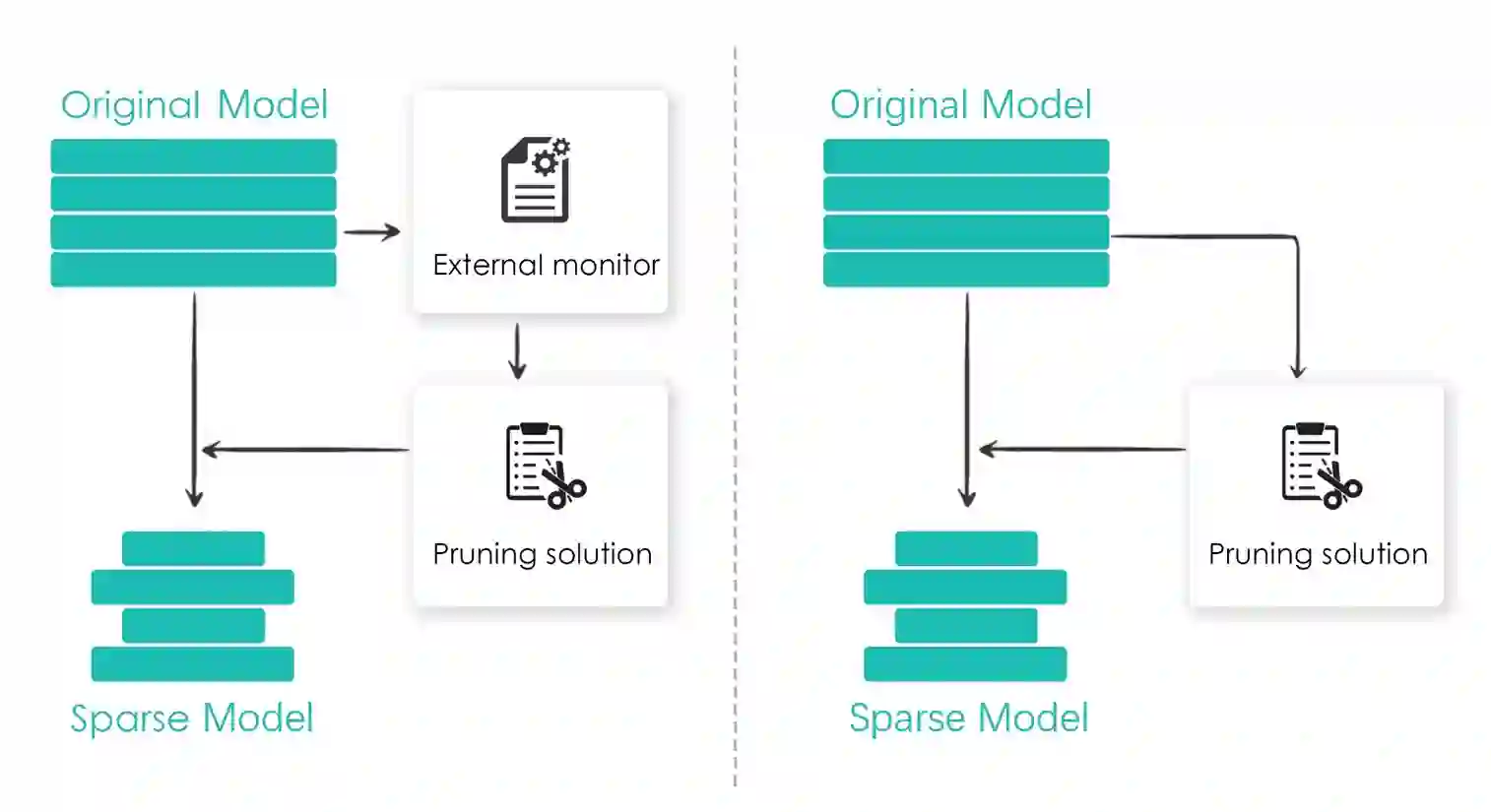

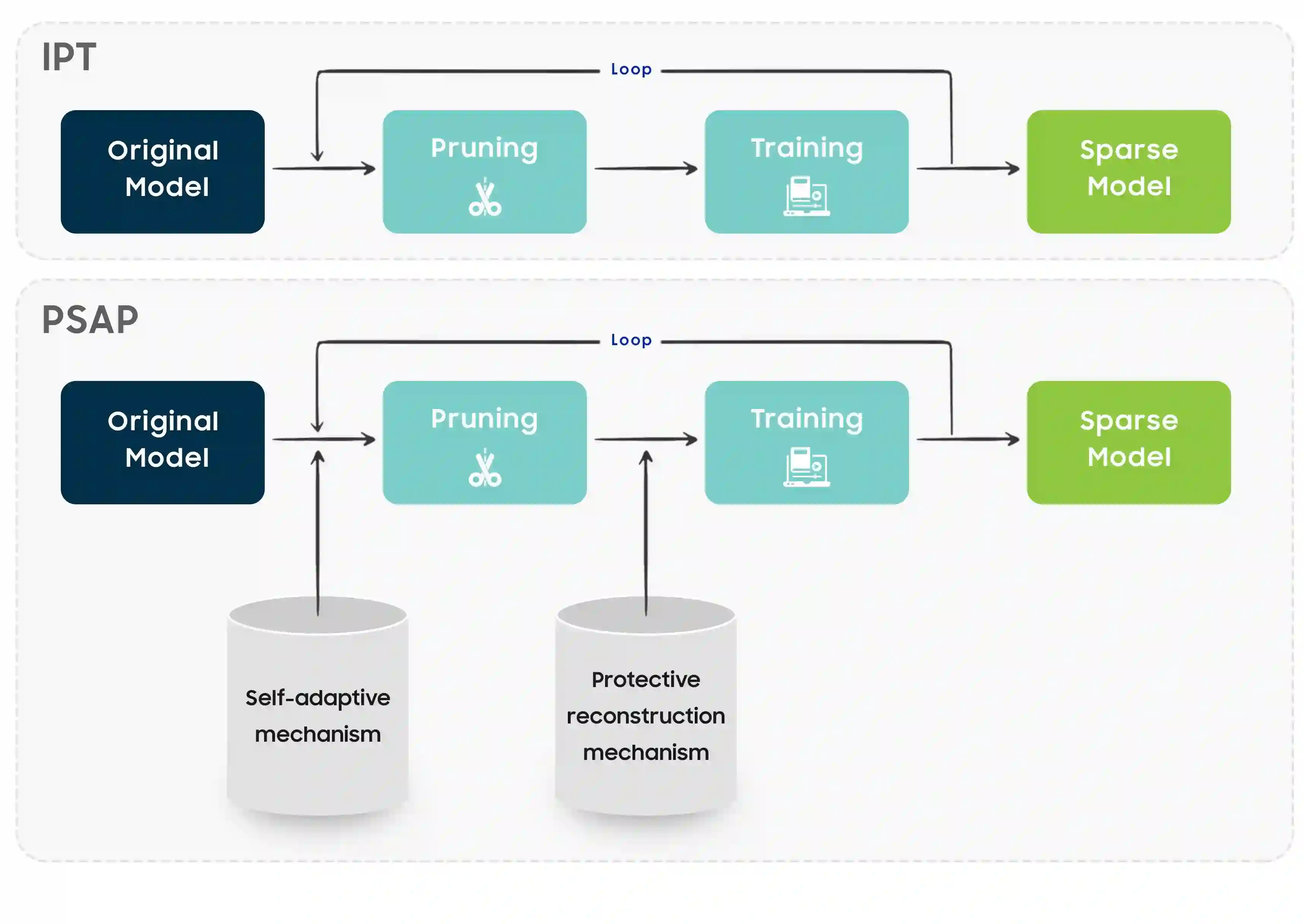

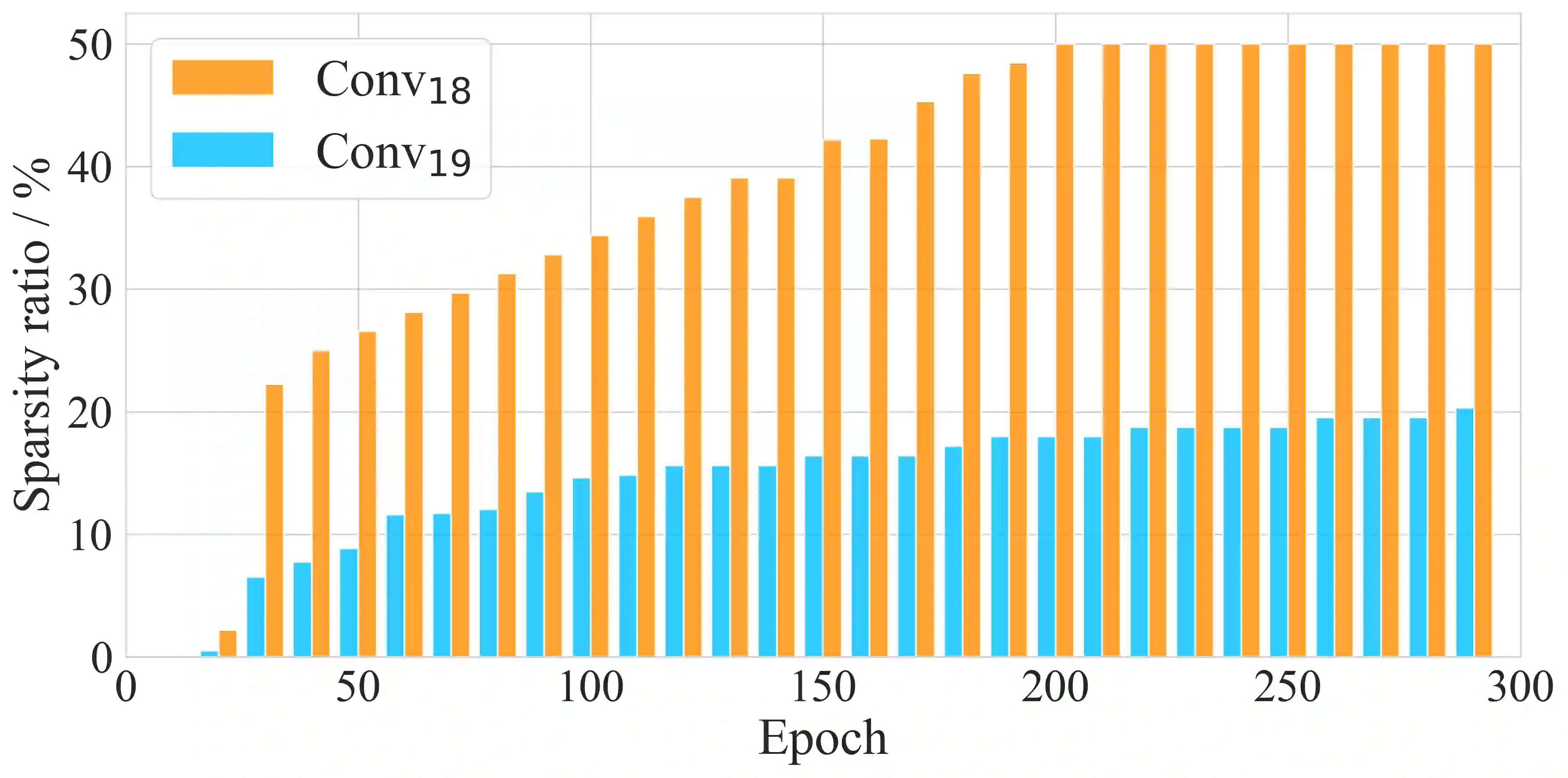

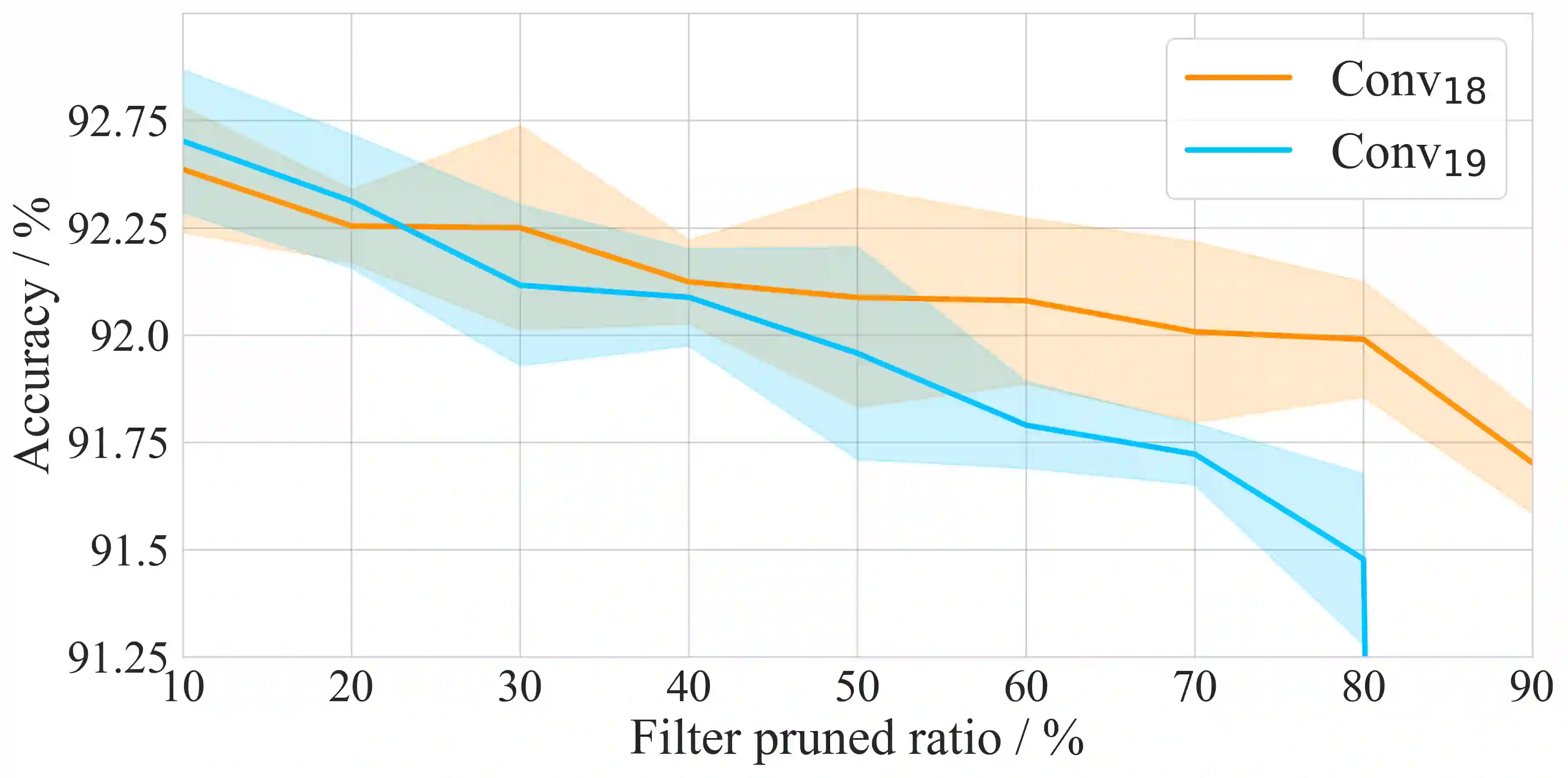

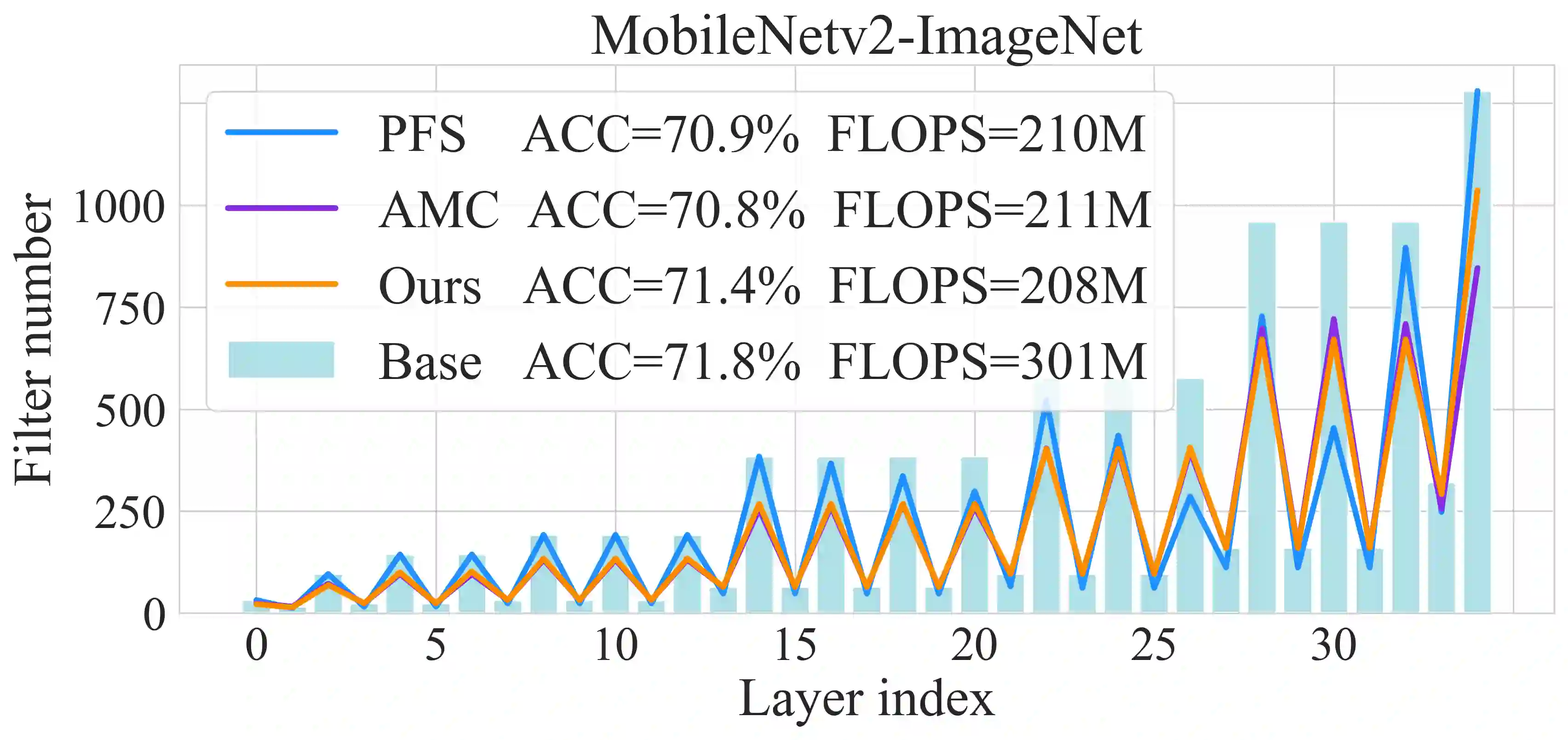

Adaptive network pruning approach has recently drawn significant attention due to its excellent capability to identify the importance and redundancy of layers and filters and customize a suitable pruning solution. However, it remains unsatisfactory since current adaptive pruning methods rely mostly on an additional monitor to score layer and filter importance, and thus faces high complexity and weak interpretability. To tackle these issues, we have deeply researched the weight reconstruction process in iterative prune-train process and propose a Protective Self-Adaptive Pruning (PSAP) method. First of all, PSAP can utilize its own information, weight sparsity ratio, to adaptively adjust pruning ratio of layers before each pruning step. Moreover, we propose a protective reconstruction mechanism to prevent important filters from being pruned through supervising gradients and to avoid unrecoverable information loss as well. Our PSAP is handy and explicit because it merely depends on weights and gradients of model itself, instead of requiring an additional monitor as in early works. Experiments on ImageNet and CIFAR-10 also demonstrate its superiority to current works in both accuracy and compression ratio, especially for compressing with a high ratio or pruning from scratch.

翻译:自适应网络剪枝方法因其优秀的能力来识别层和过滤器的重要性和冗余性并定制合适的剪枝方案而引起了极大的关注。然而,它仍然不尽如人意,因为当前的自适应剪枝方法大多依赖于额外的监视器来评估层和过滤器的重要性,因此面临着高复杂度和弱可解释性的问题。为解决这些问题,我们深入研究了迭代剪枝-训练过程中的权重重构过程,并提出了一种保护性自适应剪枝(PSAP)方法。首先,PSAP可以利用其自身信息,即权重稀疏性比率,来适应性地调整每个剪枝步骤之前的层剪枝比率。此外,我们提出了一种保护性重构机制,通过监督梯度来防止重要的过滤器被剪枝,并避免不可恢复的信息损失。我们的PSAP非常方便和明确,因为它仅依赖于模型本身的权重和梯度,而无需像早期作品那样需要额外的监视器。在ImageNet和CIFAR-10上的实验证明了它在精度和压缩比方面的优越性,尤其适用于高比率压缩或从头开始剪枝的情况。