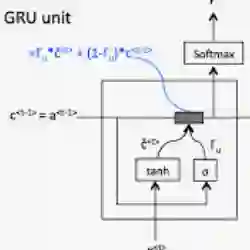

Gated recurrent units (GRUs) are specialized memory elements for building recurrent neural networks. Despite their incredible success on various tasks, including extracting dynamics underlying neural data, little is understood about the specific dynamics representable in a GRU network. As a result, it is both difficult to know a priori how successful a GRU network will perform on a given task, and also their capacity to mimic the underlying behavior of their biological counterparts. Using a continuous time analysis, we gain intuition on the inner workings of GRU networks. We restrict our presentation to low dimensions, allowing for a comprehensive visualization. We found a surprisingly rich repertoire of dynamical features that includes stable limit cycles (nonlinear oscillations), multi-stable dynamics with various topologies, and homoclinic bifurcations. At the same time we were unable to train GRU networks to produce continuous attractors, which are hypothesized to exist in biological neural networks. We contextualize the usefulness of different kinds of observed dynamics and support our claims experimentally.

翻译:GRU(GRUs) 是用于建设经常性神经网络的专门记忆元素。 尽管在各种任务上取得了令人难以置信的成功, 包括提取神经数据背后的动态数据, 但对于GRU(GRU)网络中代表的具体动态却知之甚少。 因此,很难先验地知道GRU(GRU)网络在某项任务上将如何成功, 也难以想象它们是否有能力模仿生物对应方的基本行为。 利用持续的时间分析, 我们获得GRU网络内部运行的直觉。 我们限制我们的演示范围, 将我们的演示限制在低维度上, 允许全面视觉化 。 我们发现一个奇异的动态特征堆积, 包括稳定的限制周期( 非线性振荡 ) 、 与不同地形相容的多维系动态 以及同临床分立 。 与此同时, 我们无法训练 GRU网络来产生连续的吸引器, 这些吸引器在生物神经网络中是低度的。 我们根据不同种类的观测动态的实用性, 并且支持我们的主张实验性 。