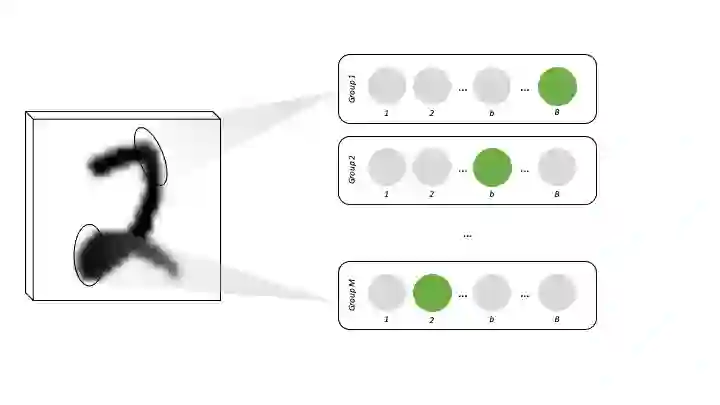

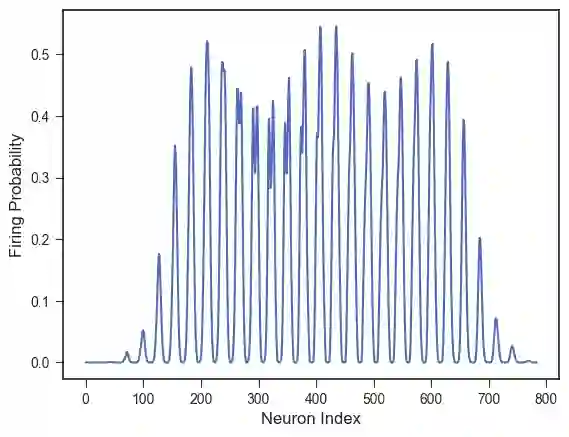

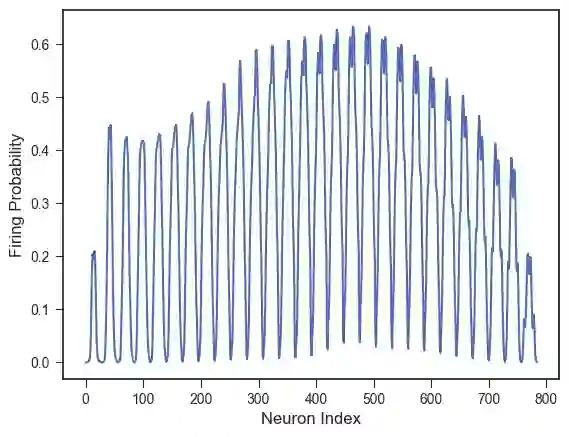

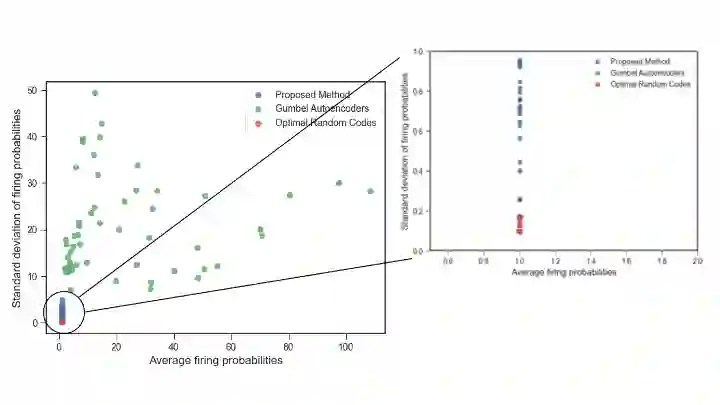

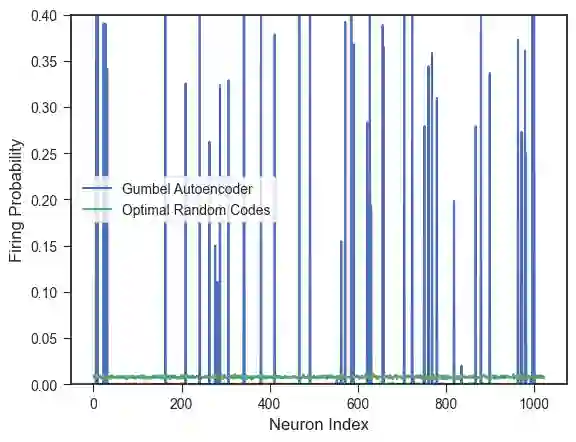

One of the most well established brain principles, hebbian learning, has led to the theoretical concept of neural assemblies. Based on it, many interesting brain theories have spawned. Palm's work implements this concept through binary associative memory, in a model that not only has a wide cognitive explanatory power but also makes neuroscientific predictions. Yet, associative memory can only work with logarithmic sparse representations, which makes it extremely difficult to apply the model to real data. We propose a biologically plausible network that encodes images into codes that are suitable for associative memory. It is organized into groups of neurons that specialize on local receptive fields, and learn through a competitive scheme. After conducting auto- and hetero-association experiments on two visual data sets, we can conclude that our network not only beats sparse coding baselines, but also that it comes close to the performance achieved using optimal random codes.

翻译:最成熟的大脑原则之一, Hebbian 学习导致神经元构件的理论概念。 在此基础上, 许多有趣的大脑理论已经诞生。 棕榈的作品通过二进制关联记忆实施这一概念, 模型不仅具有广泛的认知解释力, 而且还能做出神经科学预测。 然而, 关联记忆只能与对数稀少的表达方式起作用, 这使得将模型应用到真实数据上极为困难。 我们提议建立一个生物上可信的网络, 将图像编码成适合关联记忆的代码。 它被组织成一组神经元, 专门研究本地的接收领域, 并通过竞争方案学习。 在对两套视觉数据集进行自动和异性关联实验之后, 我们可以得出结论, 我们的网络不仅可以跳过稀疏的编码基线, 而且可以接近使用最佳随机代码实现的性能。