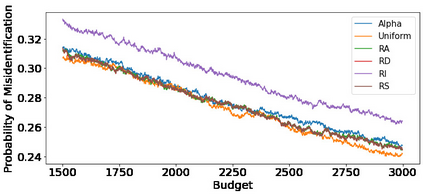

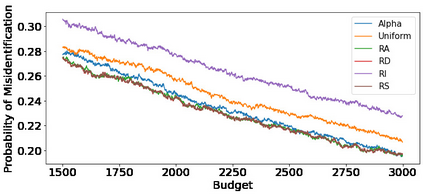

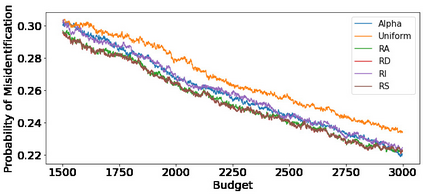

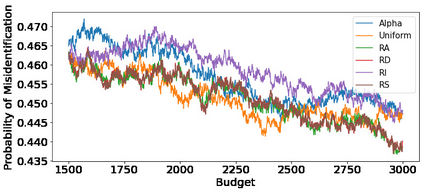

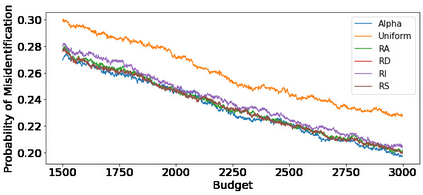

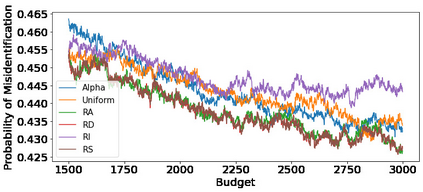

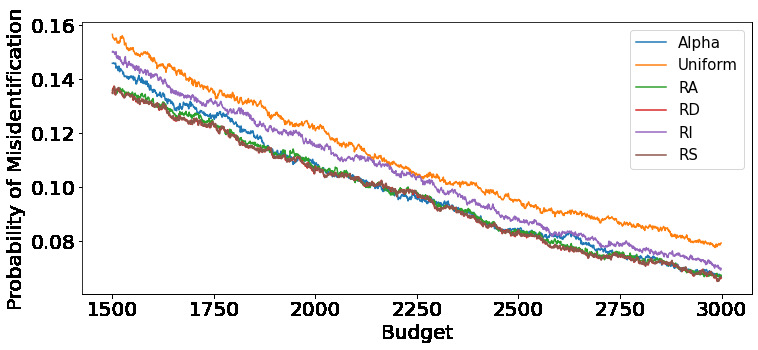

We consider the fixed-budget best arm identification problem in two-armed Gaussian bandits with unknown variances. The tightest lower bound on the complexity and an algorithm whose performance guarantee matches the lower bound have long been open problems when the variances are unknown and when the algorithm is agnostic to the optimal proportion of the arm draws. In this paper, we propose a strategy comprising a sampling rule with randomized sampling (RS) following the estimated target allocation probabilities of arm draws and a recommendation rule using the augmented inverse probability weighting (AIPW) estimator, which is often used in the causal inference literature. We refer to our strategy as the RS-AIPW strategy. In the theoretical analysis, we first derive a large deviation principle for martingales, which can be used when the second moment converges in mean, and apply it to our proposed strategy. Then, we show that the proposed strategy is asymptotically optimal in the sense that the probability of misidentification achieves the lower bound by Kaufmann et al. (2016) when the sample size becomes infinitely large and the gap between the two arms goes to zero.

翻译:我们认为,在两只手持两只手的高斯山土匪中,固定预算最佳手臂识别问题差异不明。在复杂程度和算法上,其性能保证与较低界限相符的算法最窄的界限长期以来一直是开放的问题,因为差异不为人知,而且算法对手臂抽取的最佳比例是不可知的。在本文件中,我们提出了一个战略,其中包括一个抽样规则,按照估计目标分配的手臂抽取概率随机抽样(RS),以及一项建议规则,使用增加的反概率加权(AIPW)估测器(AIPW),这在因果关系文献中经常使用。我们把我们的战略称为RS-AIPW战略。在理论分析中,我们首先得出了一种巨大的马丁果偏离原则,可以在第二个时刻达到平均值时使用,然后将它应用于我们提出的战略。然后,我们表明,拟议的战略在确定误差的可能性达到Kaufmann et al. (1988) 和 Kaufmann et al. (1988) 的较低约束程度时,我们从微大和两只武器之间的距离为零时,因此认为最佳的概率最佳。