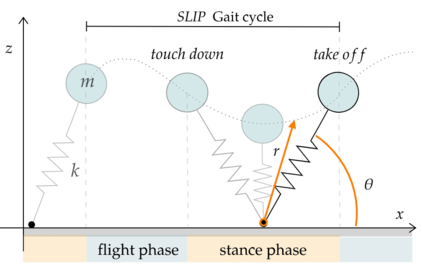

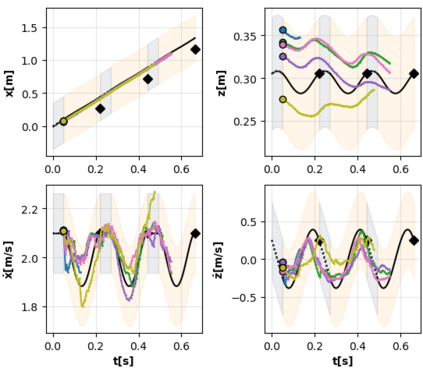

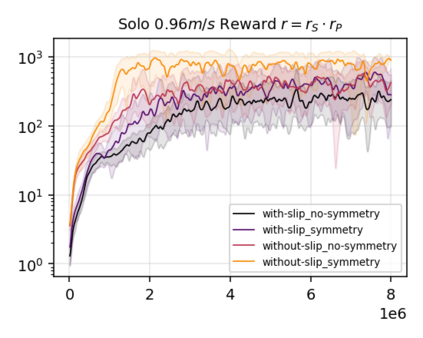

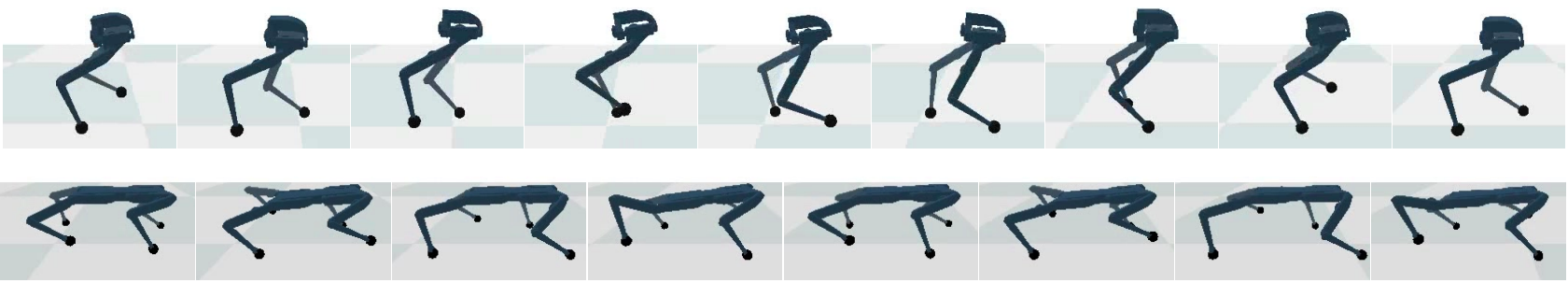

Learning controllers that reproduce legged locomotion in nature have been a long-time goal in robotics and computer graphics. While yielding promising results, recent approaches are not yet flexible enough to be applicable to legged systems of different morphologies. This is partly because they often rely on precise motion capture references or elaborate learning environments that ensure the naturality of the emergent locomotion gaits but prevent generalization. This work proposes a generic approach for ensuring realism in locomotion by guiding the learning process with the spring-loaded inverted pendulum model as a reference. Leveraging on the exploration capacities of Reinforcement Learning (RL), we learn a control policy that fills in the information gap between the template model and full-body dynamics required to maintain stable and periodic locomotion. The proposed approach can be applied to robots of different sizes and morphologies and adapted to any RL technique and control architecture. We present experimental results showing that even in a model-free setup and with a simple reactive control architecture, the learned policies can generate realistic and energy-efficient locomotion gaits for a bipedal and a quadrupedal robot. And most importantly, this is achieved without using motion capture, strong constraints in the dynamics or kinematics of the robot, nor prescribing limb coordination. We provide supplemental videos for qualitative analysis of the naturality of the learned gaits.

翻译:在机器人和计算机图形中,复制脚步运动自然的学习控制器是一个长期的目标。在产生有希望的结果的同时,最近的方法还不够灵活,不足以适用于不同形态的分解系统。部分原因是它们往往依赖精确的运动抓取参考或精心构建的学习环境,以确保突发的运动动作步态的自然性,但不能一概而论。这项工作提出了一种通用的方法,用春季倒转的钟式控制模型指导学习过程,以此确保运动中的现实主义。在加强学习(RL)的探索能力方面,我们学习了一种控制政策,填补模板模型模型与保持稳定和定期移动所需的全体动态之间的信息差距。提议的方法可以适用于不同尺寸和形态的机器人,并适应任何RL技术和控制结构。我们提出一个实验结果,表明即使在没有模型的设置和简单的被动反应控制结构中,学习的政策可以产生现实和节能的轮回能力。我们学习的政策可以创造现实和节能的轮廓,用来填补最坚固的双翼模型模型模型和最坚固的机动性机动,并且用最坚固的机动的机动的机动性机动和机动模型分析,提供了最坚实的机动的机动的机动的机动的机动的机动。