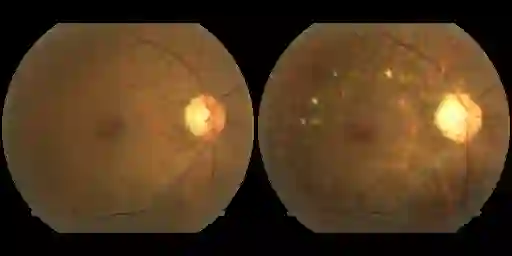

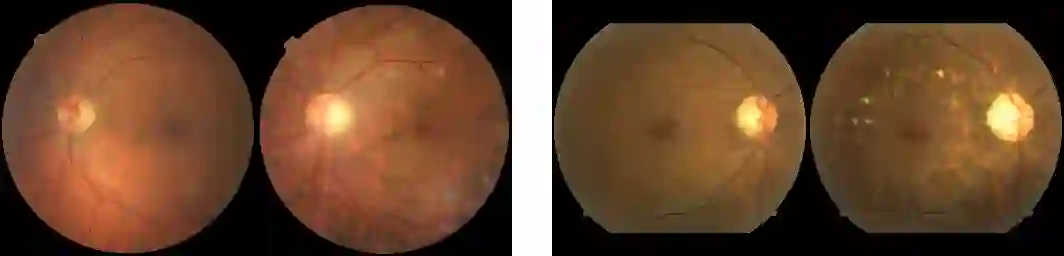

We propose a novel method for enforcing AI fairness with respect to protected or sensitive factors. This method uses a dual strategy performing training and representation alteration (TARA) for the mitigation of prominent causes of AI bias by including: a) the use of representation learning alteration via adversarial independence to suppress the bias-inducing dependence of the data representation from protected factors; and b) training set alteration via intelligent augmentation to address bias-causing data imbalance, by using generative models that allow the fine control of sensitive factors related to underrepresented populations via domain adaptation and latent space manipulation. When testing our methods on image analytics, experiments demonstrate that TARA significantly or fully debiases baseline models while outperforming competing debiasing methods that have the same amount of information, e.g., with (% overall accuracy, % accuracy gap) = (78.8, 0.5) vs. the baseline method's score of (71.8, 10.5) for EyePACS, and (73.7, 11.8) vs. (69.1, 21.7) for CelebA. Furthermore, recognizing certain limitations in current metrics used for assessing debiasing performance, we propose novel conjunctive debiasing metrics. Our experiments also demonstrate the ability of these novel metrics in assessing the Pareto efficiency of the proposed methods.

翻译:我们建议一种新颖的方法,在受保护或敏感因素方面执行大赦国际的公平性。这一方法使用一种双重战略,开展培训和代表性改变(TARA),以缓解大赦国际偏见的突出原因,其方法是:(a) 通过对抗性独立性,使用代表性改变,以抑制数据代表从受保护因素中产生偏向性依赖;(b) 通过智能增强,建立一套培训,通过智能增强,解决造成偏向的数据不平衡问题,方法是使用基因化模型,以便通过域适应和潜在空间操纵对与代表性不足人口有关的敏感因素进行精细控制。在测试我们的图像分析方法时,实验表明TARA明显或完全贬低基线模型,而比具有相同数量的信息(例如,总体准确率,准确度差距%)=(78.8,0.5)与EyePACS的基准评分数(71.8,10.5)和(73.7,11.8)与CeebA的(69.1, 21.7)相比。此外,我们认识到目前用来评估贬低性基准模型能力的方法存在某些限制。我们还提议在评估新的衡量效率的能力方面进行新的衡量。