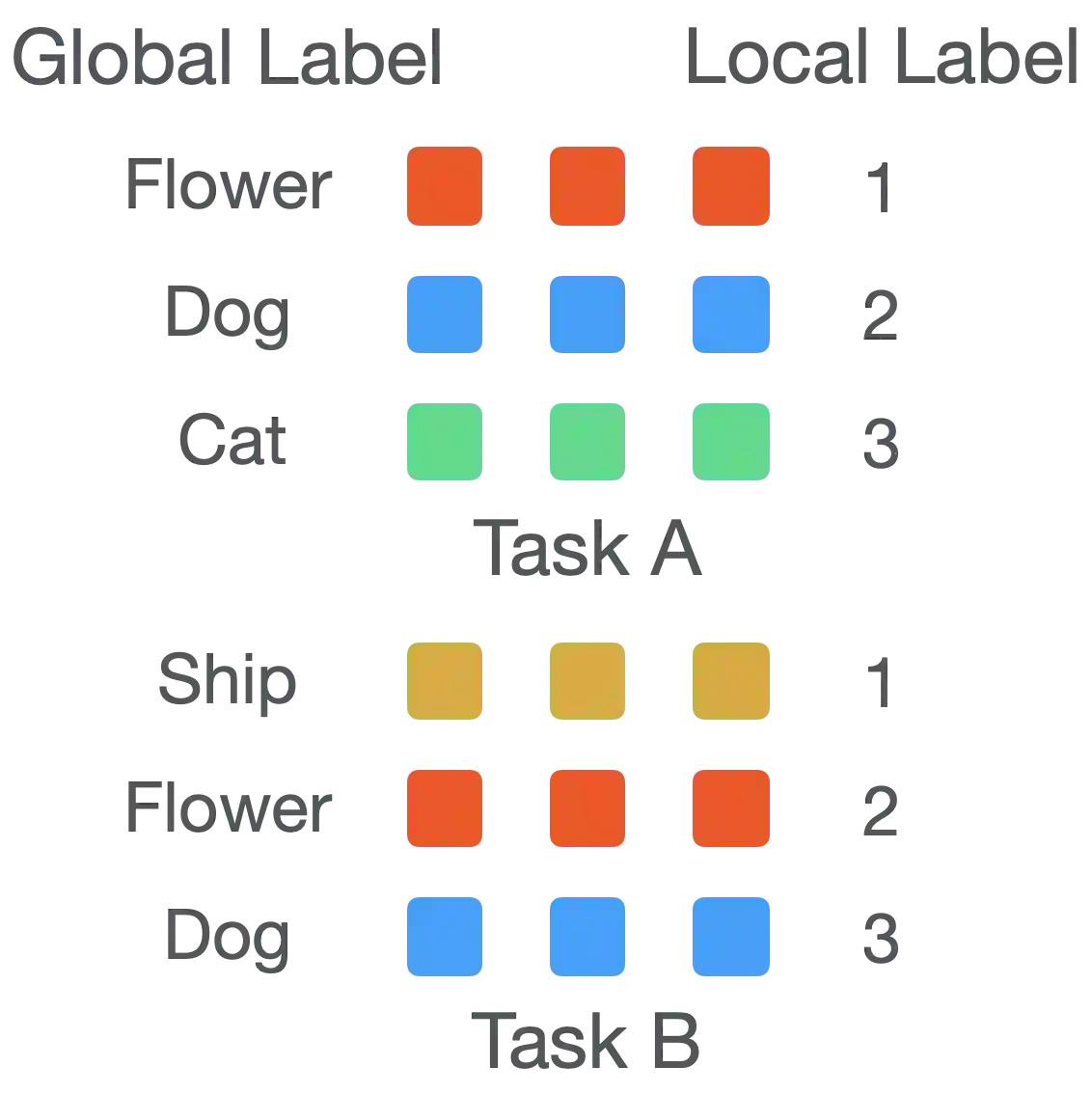

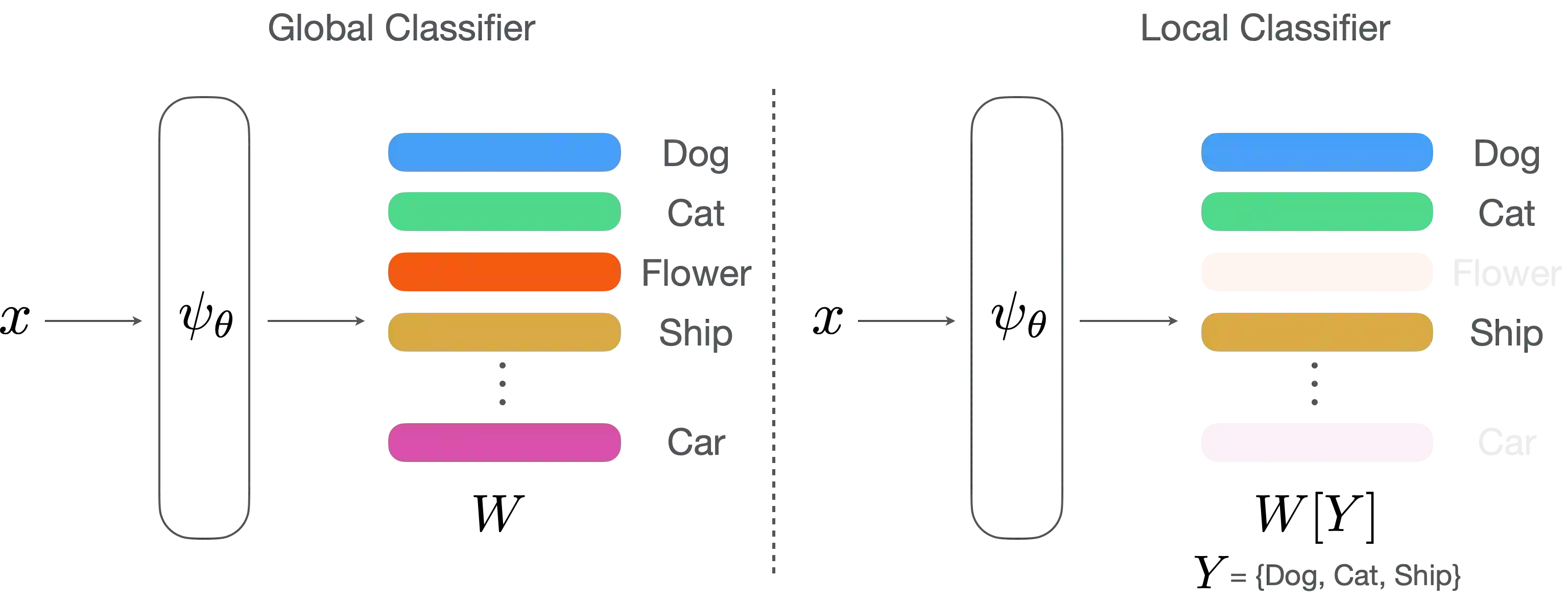

Few-shot learning (FSL) is a central problem in meta-learning, where learners must quickly adapt to new tasks given limited training data. Surprisingly, recent works have outperformed meta-learning methods tailored to FSL by casting it as standard supervised learning to jointly classify all classes shared across tasks. However, this approach violates the standard FSL setting by requiring global labels shared across tasks, which are often unavailable in practice. In this paper, we show why solving FSL via standard classification is theoretically advantageous. This motivates us to propose Meta Label Learning (MeLa), a novel algorithm that infers global labels and obtains robust few-shot models via standard classification. Empirically, we demonstrate that MeLa outperforms meta-learning competitors and is comparable to the oracle setting where ground truth labels are given. We provide extensive ablation studies to highlight the key properties of the proposed strategy.

翻译:少见的学习(FSL)是元学习的一个中心问题,学习者必须迅速适应培训数据有限的新任务。令人惊讶的是,最近的工作表现优于FSL特制的元学习方法,将FSL作为标准监督学习,共同分类所有不同任务类别。然而,这种方法违反了标准FSL设置,要求全球跨任务标签共享,而在实践中往往无法使用。在本文中,我们说明了为什么通过标准分类解决FSL在理论上是有利的。这促使我们提出Meta Label Learning(MeLa),这是一种新奇的算法,通过标准分类推算全球标签,获得一些强健的微分数分数的模型。我们生动地表明,MeLa优于元学习竞争者,与提供地面真象标签的奥克拉设置相似。我们提供了广泛的关联研究,以突出拟议战略的关键属性。