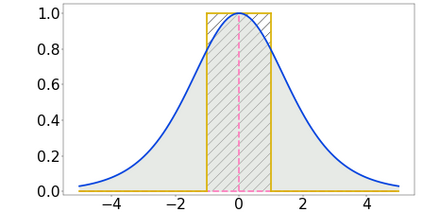

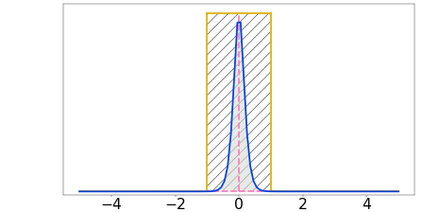

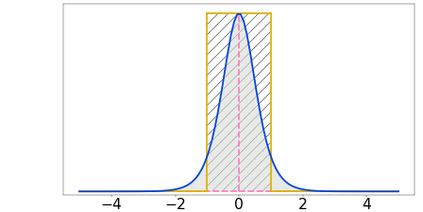

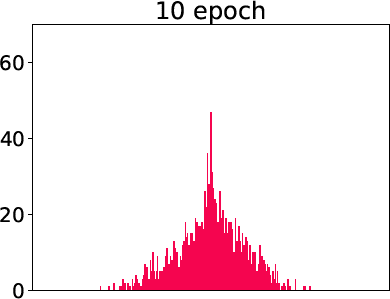

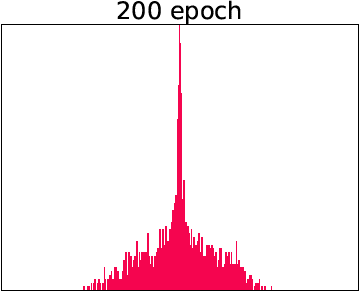

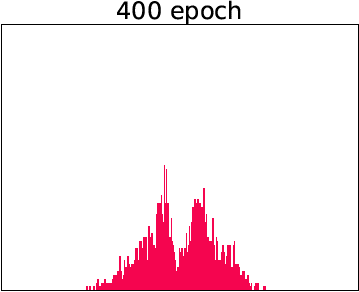

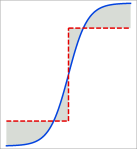

Weight and activation binarization is an effective approach to deep neural network compression and can accelerate the inference by leveraging bitwise operations. Although many binarization methods have improved the accuracy of the model by minimizing the quantization error in forward propagation, there remains a noticeable performance gap between the binarized model and the full-precision one. Our empirical study indicates that the quantization brings information loss in both forward and backward propagation, which is the bottleneck of training accurate binary neural networks. To address these issues, we propose an Information Retention Network (IR-Net) to retain the information that consists in the forward activations and backward gradients. IR-Net mainly relies on two technical contributions: (1) Libra Parameter Binarization (Libra-PB): simultaneously minimizing both quantization error and information loss of parameters by balanced and standardized weights in forward propagation; (2) Error Decay Estimator (EDE): minimizing the information loss of gradients by gradually approximating the sign function in backward propagation, jointly considering the updating ability and accurate gradients. We are the first to investigate both forward and backward processes of binary networks from the unified information perspective, which provides new insight into the mechanism of network binarization. Comprehensive experiments with various network structures on CIFAR-10 and ImageNet datasets manifest that the proposed IR-Net can consistently outperform state-of-the-art quantization methods.

翻译:重力和激活二进制是深神经网络压缩的有效方法,通过利用微量操作加速推导。虽然许多二进制方法通过尽量减少前传播中的量化错误提高了模型的准确性,但二进制模型和全精度模型之间仍然存在着明显的性能差距。我们的经验研究表明,四进制在前传播和后向传播中造成信息损失,这是培训准确的二进制神经网络的瓶颈。为了解决这些问题,我们提议建立一个信息保留网(IR-Net),以保留前向启动和后向梯度中包含的信息。IR-Net主要依靠两项技术贡献:(1) 利布拉·帕拉米德·比纳尔化(Libra-PBP):同时通过前向传播中平衡和标准化的重量来尽量减少四进制错误和信息参数损失;(2) 偏差 Estimator(EDE):通过逐渐接近后向传播中的信号功能,共同考虑更新的能力和精确梯度。我们首先调查前向和后向网络的图像化网络结构,即从新的和后向前向前向式网络提供各种IM-FAR-10式数据库的系统。