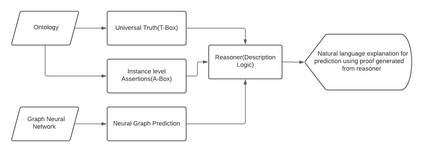

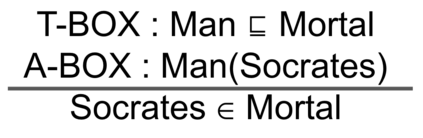

Explaining Graph Neural Networks predictions to end users of AI applications in easily understandable terms remains an unsolved problem. In particular, we do not have well developed methods for automatically evaluating explanations, in ways that are closer to how users consume those explanations. Based on recent application trends and our own experiences in real world problems, we propose automatic evaluation approaches for GNN Explanations.

翻译:以易于理解的术语向AI应用终端用户解释图形神经网络预测仍是一个尚未解决的问题,特别是,我们没有完善的自动评价解释的方法,其方法更接近用户如何使用这些解释。 根据最近的应用趋势和我们自己在现实世界问题方面的经验,我们提议对GNN解释进行自动评价。

相关内容

Arxiv

3+阅读 · 2019年9月3日