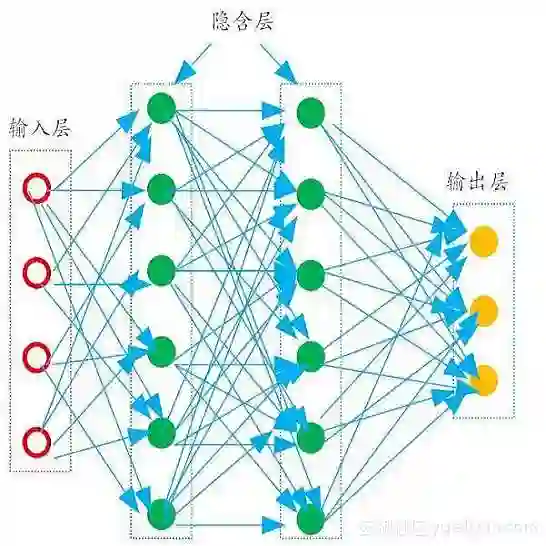

We propose an analog implementation of the transcendental activation function leveraging two spin-orbit torque magnetoresistive random-access memory (SOT-MRAM) devices and a CMOS inverter. The proposed analog neuron circuit consumes 1.8-27x less power, and occupies 2.5-4931x smaller area, compared to the state-of-the-art analog and digital implementations. Moreover, the developed neuron can be readily integrated with memristive crossbars without requiring any intermediate signal conversion units. The architecture-level analyses show that a fully-analog in-memory computing (IMC) circuit that use our SOT-MRAM neuron along with an SOT-MRAM based crossbar can achieve more than 1.1x, 12x, and 13.3x reduction in power, latency, and energy, respectively, compared to a mixed-signal implementation with analog memristive crossbars and digital neurons. Finally, through cross-layer analyses, we provide a guide on how varying the device-level parameters in our neuron can affect the accuracy of multilayer perceptron (MLP) for MNIST classification.

翻译:我们提议对利用两个旋转轨道磁性磁性随机存取装置和CMOS反射器的超光活化功能进行模拟实施。拟议的模拟神经电路消耗1.8-27x小功率,并占用2.5-4931x小面积,而与最先进的模拟和数字实施相比,其占用面积为2.5-4931x小面积。此外,发达的神经元可以在不要求任何中间信号转换器的情况下很容易与中间十字栏结合。结构层面分析显示,使用我们的SOT-MORAM神经的完全模拟计算(IMC)电路以及基于SOT-MORAM的横条线可分别超过1.1x、12x和13.3x的功率、耐久度和能量减少量,而与模拟分子交叉横梁和数字神经元的混合信号执行相比,我们通过跨层分析,提供了一份指南,说明我们神经中设备级参数的差异会如何影响多层透镜的精确度。