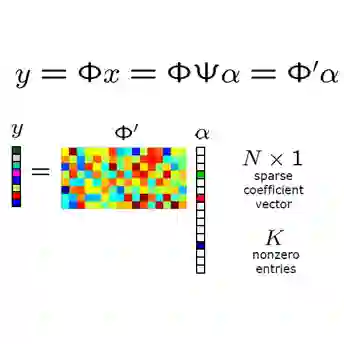

Existing deep compressive sensing (CS) methods either ignore adaptive online optimization or depend on costly iterative optimizer during reconstruction. This work explores a novel image CS framework with recurrent-residual structural constraint, termed as R$^2$CS-NET. The R$^2$CS-NET first progressively optimizes the acquired samplings through a novel recurrent neural network. The cascaded residual convolutional network then fully reconstructs the image from optimized latent representation. As the first deep CS framework efficiently bridging adaptive online optimization, the R$^2$CS-NET integrates the robustness of online optimization with the efficiency and nonlinear capacity of deep learning methods. Signal correlation has been addressed through the network architecture. The adaptive sensing nature further makes it an ideal candidate for color image CS via leveraging channel correlation. Numerical experiments verify the proposed recurrent latent optimization design not only fulfills the adaptation motivation, but also outperforms classic long short-term memory (LSTM) architecture in the same scenario. The overall framework demonstrates hardware implementation feasibility, with leading robustness and generalization capability among existing deep CS benchmarks.

翻译:现有深度压缩遥感(CS)方法要么忽视适应性在线优化,要么在重建过程中依赖昂贵的迭代优化。这项工作探索了一个具有经常性重复结构限制的新图像 CS框架,称为R$2$CS-NET。R$2$CS-NET首先通过一个新型的经常性神经网络逐步优化所获得的取样。级联余变网络随后从优化的潜在代表面中完全重建图像。作为第一个深度CS框架,高效地连接适应性在线优化,R$2$CS-NET将在线优化的稳健性与深度学习方法的效率和非线性能力结合起来。信号相关性已通过网络结构得到解决。适应性感应性质进一步使其成为通过杠杆化频道关联来显示彩色图像CS的理想选择方。数字实验核实拟议的经常性潜在优化设计不仅符合适应动机,而且超过了同一情景的经典短期内存储(LSTM)结构。总体框架展示了硬件实施可行性,在现有的深度 CS基准中具有稳健性和普遍化能力。