【1】Joern Rehder, Janosch Nikolic, Thomas Schneider, Timo Hinzmann, and Roland Siegwart. Extending kalibr: Calibrating the extrinsics of multiple imus and of individual axes. In 2016 IEEE International Conference on Robotics and Automation (ICRA), pages 4304–4311. IEEE, 2016.

[2] Kevin Eckenhoff, Patrick Geneva, Jesse Bloecker, and Guoquan Huang. Multi-camera visual-inertial navigation with online intrinsic and extrinsic calibration. 2019 International Conference on Robotics and Automation (ICRA), pages 3158–3164, 2019.

[3] A. Tedaldi, A. Pretto, and E. Menegatti. A robust and easy to implement method for imu calibration without external equipments. In Proc. of: IEEE International Conference on Robotics and Automation (ICRA), pages 3042–3049, 2014.

[4] A. Pretto and G. Grisetti. Calibration and performance evaluation of low-cost imus. In Proc. of: 20th IMEKO TC4 International Symposium, pages 429–434, 2014.

【5】] Changhao Chen, Stefano Rosa, Yishu Miao, Chris Xiaoxuan Lu, Wei Wu, Andrew Markham, and Niki Trigoni. Selective sensor fusion for neural visual-inertial odometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 10542–10551, 2019.

【6】Thomas Schops, Torsten Sattler, and Marc Pollefeys. Bad slam: Bundle adjusted direct rgb-d slam. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

[7] Adam Harmat, Michael Trentini, and Inna Sharf. Multi-camera tracking and mapping for unmanned aerial vehicles in unstructured environments. Journal of Intelligent & Robotic Systems, 78(2):291– 317, 2015.

【8】Steffen Urban and Stefan Hinz. MultiCol-SLAM - a modular real-time multi-camera slam system. arXiv preprint arXiv:1610.07336, 2016

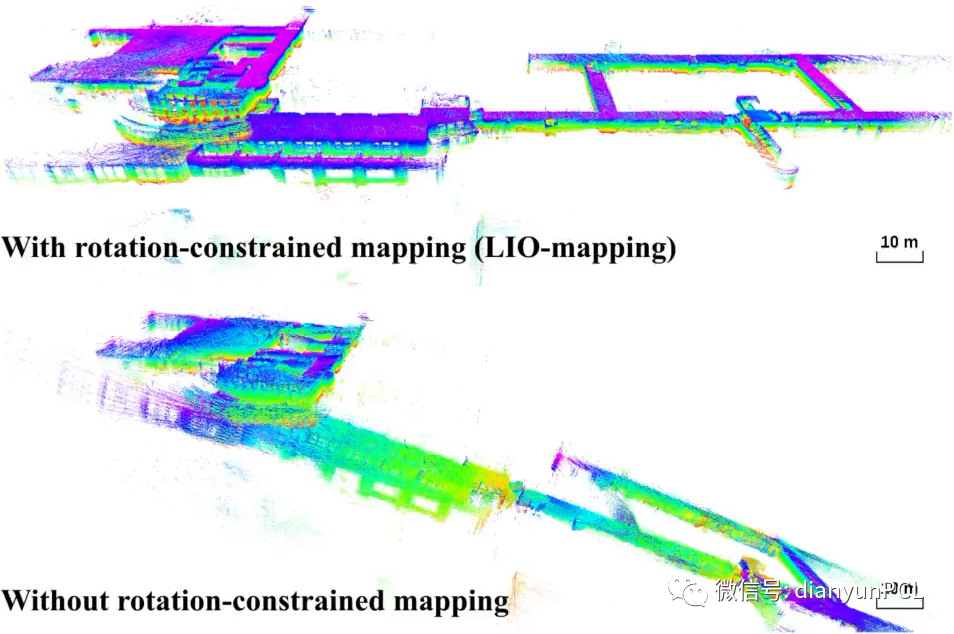

【9】Haoyang Ye, Yuying Chen, and Ming Liu. Tightly coupled 3d lidar inertial odometry and mapping. arXiv preprint arXiv:1904.06993, 2019.

[10] Deyu Yin, Jingbin Liu, Teng Wu, Keke Liu, Juha Hyypp¨a, and Ruizhi Chen. Extrinsic calibration of 2d laser rangefinders using an existing cuboid-shaped corridor as the reference. Sensors, 18(12):4371, 2018.

[1] Shoubin Chen, Jingbin Liu, Teng Wu, Wenchao Huang, Keke Liu, Deyu Yin, Xinlian Liang, Juha Hyypp¨a, and Ruizhi Chen. Extrinsic calibration of 2d laser rangefinders based on a mobile sphere. Remote Sensing, 10(8):1176, 2018.

[12] Jesse Sol Levinson. Automatic laser calibration, mapping, and localization for autonomous vehicles. Stanford University, 2011

【13】Jesse Levinson and Sebastian Thrun. Automatic online calibration of cameras and lasers. In Robotics: Science and Systems, volume 2, 2013.

[14] A. Dhall, K. Chelani, V. Radhakrishnan, and K. M. Krishna. LiDARCamera Calibration using 3D-3D Point correspondences. ArXiv eprints, May 2017.

[15] Nick Schneider, Florian Piewak, Christoph Stiller, and Uwe Franke. Regnet: Multimodal sensor registration using deep neural networks. In 2017 IEEE intelligent vehicles symposium (IV), pages 1803–1810. IEEE, 2017.

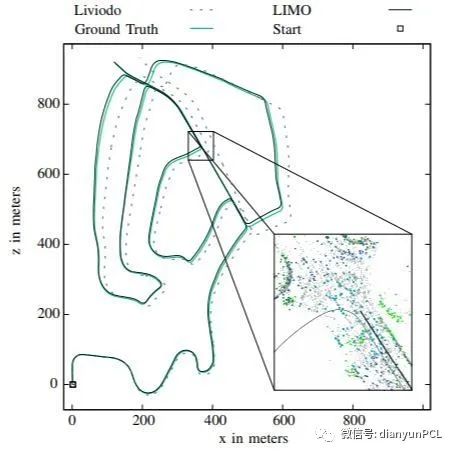

[16] Johannes Graeter, Alexander Wilczynski, and Martin Lauer. Limo: Lidar-monocular visual odometry. 2018.

[17] Ganesh Iyer, J Krishna Murthy, K Madhava Krishna, et al. Calibnet: self-supervised extrinsic calibration using 3d spatial transformer networks. arXiv preprint arXiv:1803.08181, 2018.

【18】Jason Ku, Ali Harakeh, and Steven L Waslander. In defense of classical image processing: Fast depth completion on the cpu. In 2018 15th Conference on Computer and Robot Vision (CRV), pages 16–22. IEEE, 2018

【19】Fangchang Mal and Sertac Karaman. Sparse-to-dense: Depth prediction from sparse depth samples and a single image. In 2018 IEEE International Conference on Robotics and Automation (ICRA), pages 1–8. IEEE, 2018.

[20] Jonas Uhrig, Nick Schneider, Lukas Schneider, Uwe Franke, Thomas Brox, and Andreas Geiger. Sparsity invariant cnns. In 2017 International Conference on 3D Vision (3DV), pages 11–20. IEEE, 2017.

[21] Shreyas S Shivakumar, Ty Nguyen, Steven W Chen, and Camillo J Taylor. Dfusenet: Deep fusion of rgb and sparse depth information for image guided dense depth completion. arXiv preprint arXiv:1902.00761, 2019.

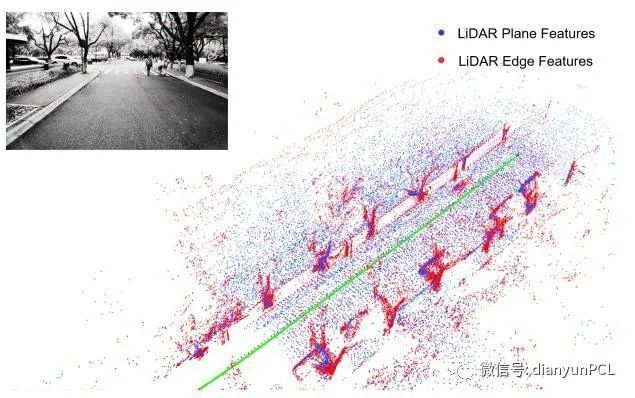

【22】Xingxing Zuo, Patrick Geneva, Woosik Lee, Yong Liu, and Guoquan Huang. Lic-fusion: Lidar-inertial-camera odometry. arXiv preprint arXiv:1909.04102, 2019.

【23】Olivier Aycard, Qadeer Baig, Siviu Bota, Fawzi Nashashibi, Sergiu Nedevschi, Cosmin Pantilie, Michel Parent, Paulo Resende, and TrungDung Vu. Intersection safety using lidar and stereo vision sensors. In 2011 IEEE Intelligent Vehicles Symposium (IV), pages 863–869. IEEE, 2011.

【24】Ricardo Omar Chavez-Garcia and Olivier Aycard. Multiple sensor fusion and classification for moving object detection and tracking IEEE Transactions on Intelligent Transportation Systems, 17(2):525– 534, 2015.

【25】Ji Zhang, Michael Kaess, and Sanjiv Singh. Real-time depth enhanced monocular odometry. In 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 4973–4980. IEEE, 2014.

【26】Ji Zhang and Sanjiv Singh. Visual-lidar odometry and mapping: Lowdrift, robust, and fast. In 2015 IEEE International Conference on Robotics and Automation (ICRA), pages 2174–2181. IEEE, 2015.

【27】Yoshua Nava. Visual-LiDAR SLAM with loop closure. PhD thesis, Masters thesis, KTH Royal Institute of Technology, 2018.

【28】Weizhao Shao, Srinivasan Vijayarangan, Cong Li, and George Kantor. Stereo visual inertial lidar simultaneous localization and mapping. arXiv preprint arXiv:1902.10741, 2019.

[29] Franz Andert, Nikolaus Ammann, and Bolko Maass. Lidar-aided camera feature tracking and visual slam for spacecraft low-orbit navigation and planetary landing. In Advances in Aerospace Guidance, Navigation and Control, pages 605–623. Springer, 2015.

[30] Danfei Xu, Dragomir Anguelov, and Ashesh Jain. Pointfusion: Deep sensor fusion for 3d bounding box estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 244–253, 2018

【31】Kiwoo Shin, Youngwook Paul Kwon, and Masayoshi Tomizuka. Roarnet: A robust 3d object detection based on region approximation refinement. arXiv preprint arXiv:1811.03818, 2018.

[32] Jason Ku, Melissa Mozifian, Jungwook Lee, Ali Harakeh, and Steven Waslander. Joint 3d proposal generation and object detection from view aggregation. IROS, 2018.

[33] Caner Hazirbas, Lingni Ma, Csaba Domokos, and Daniel Cremers. Fusenet: Incorporating depth into semantic segmentation via fusionbased cnn architecture. In Asian conference on computer vision, pages 213–228. Springer, 2016

【34】 Ming Liang, Bin Yang, Shenlong Wang, and Raquel Urtasun. Deep continuous fusion for multi-sensor 3d object detection. In Proceedings of the European Conference on Computer Vision (ECCV), pages 641– 656, 2018.