【论文推荐】最新七篇对抗自编码相关论文—人口异常检测、图像到图像转换、人脸属性、前列腺癌检测、情感转移

【导读】专知内容组整理了最近七篇对抗自编码(Adversarial Autoencoder)相关文章,为大家进行介绍,欢迎查看!

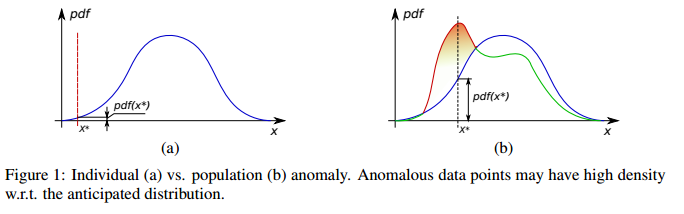

1.Population Anomaly Detection through Deep Gaussianization(通过深度高斯的人口异常检测)

作者:David Tolpin

摘要:We introduce an algorithmic method for population anomaly detection based on gaussianization through an adversarial autoencoder. This method is applicable to detection of `soft' anomalies in arbitrarily distributed highly-dimensional data. A soft, or population, anomaly is characterized by a shift in the distribution of the data set, where certain elements appear with higher probability than anticipated. Such anomalies must be detected by considering a sufficiently large sample set rather than a single sample. Applications include, but not limited to, payment fraud trends, data exfiltration, disease clusters and epidemics, and social unrests. We evaluate the method on several domains and obtain both quantitative results and qualitative insights.

期刊:arXiv, 2018年5月6日

网址:

http://www.zhuanzhi.ai/document/8322da3f14ea3a7616f9429cd790ac65

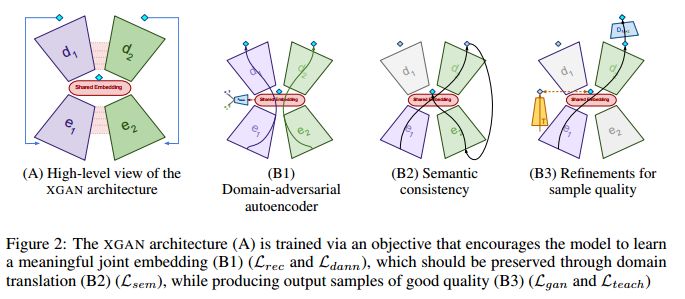

2.XGAN: Unsupervised Image-to-Image Translation for Many-to-Many Mappings(XGAN:多对多映射的无监督图像到图像转换)

作者:Amélie Royer,Konstantinos Bousmalis,Stephan Gouws,Fred Bertsch,Inbar Mosseri,Forrester Cole,Kevin Murphy

机构:Google

摘要:Style transfer usually refers to the task of applying color and texture information from a specific style image to a given content image while preserving the structure of the latter. Here we tackle the more generic problem of semantic style transfer: given two unpaired collections of images, we aim to learn a mapping between the corpus-level style of each collection, while preserving semantic content shared across the two domains. We introduce XGAN ("Cross-GAN"), a dual adversarial autoencoder, which captures a shared representation of the common domain semantic content in an unsupervised way, while jointly learning the domain-to-domain image translations in both directions. We exploit ideas from the domain adaptation literature and define a semantic consistency loss which encourages the model to preserve semantics in the learned embedding space. We report promising qualitative results for the task of face-to-cartoon translation. The cartoon dataset we collected for this purpose is in the process of being released as a new benchmark for semantic style transfer.

期刊:arXiv, 2018年4月25日

网址:

http://www.zhuanzhi.ai/document/0b3b1e8f1bd5d9dd0d7bdd8f06c63e54

3.Mask-aware Photorealistic Face Attribute Manipulation

作者:Ruoqi Sun,Chen Huang,Jianping Shi,Lizhuang Ma

机构:Carnegie Mellon University

摘要:The task of face attribute manipulation has found increasing applications, but still remains challeng- ing with the requirement of editing the attributes of a face image while preserving its unique details. In this paper, we choose to combine the Variational AutoEncoder (VAE) and Generative Adversarial Network (GAN) for photorealistic image genera- tion. We propose an effective method to modify a modest amount of pixels in the feature maps of an encoder, changing the attribute strength contin- uously without hindering global information. Our training objectives of VAE and GAN are reinforced by the supervision of face recognition loss and cy- cle consistency loss for faithful preservation of face details. Moreover, we generate facial masks to en- force background consistency, which allows our training to focus on manipulating the foreground face rather than background. Experimental results demonstrate our method, called Mask-Adversarial AutoEncoder (M-AAE), can generate high-quality images with changing attributes and outperforms prior methods in detail preservation.

期刊:arXiv, 2018年4月24日

网址:

http://www.zhuanzhi.ai/document/ca132b9a22a613f8351fed75986d0659

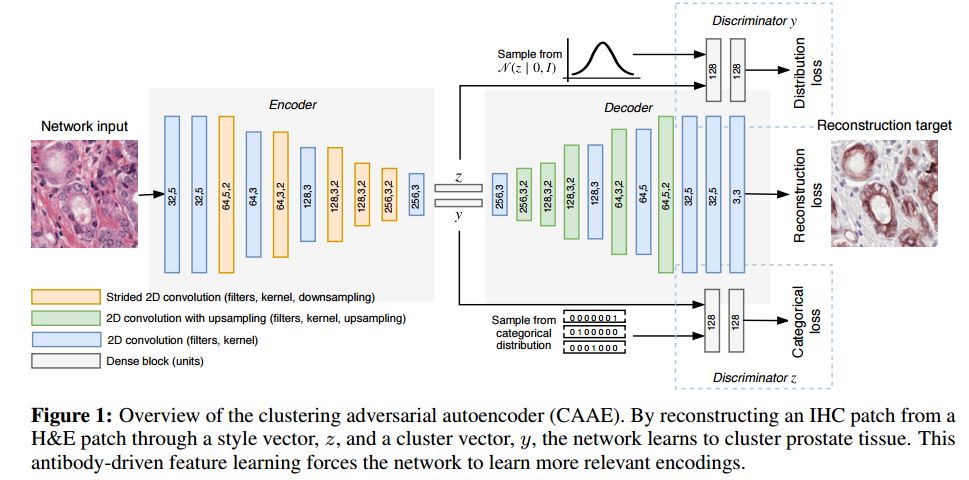

4.Unsupervised Prostate Cancer Detection on H&E using Convolutional Adversarial Autoencoders(在H&E上基于卷积对抗自编码的无监督前列腺癌检测)

作者:Wouter Bulten,Geert Litjens

机构:Radboud University

摘要:We propose an unsupervised method using self-clustering convolutional adversarial autoencoders to classify prostate tissue as tumor or non-tumor without any labeled training data. The clustering method is integrated into the training of the autoencoder and requires only little post-processing. Our network trains on hematoxylin and eosin (H&E) input patches and we tested two different reconstruction targets, H&E and immunohistochemistry (IHC). We show that antibody-driven feature learning using IHC helps the network to learn relevant features for the clustering task. Our network achieves a F1 score of 0.62 using only a small set of validation labels to assign classes to clusters.

期刊:arXiv, 2018年4月19日

网址:

http://www.zhuanzhi.ai/document/673e5d9d3c6ec6245151cdb077a6bae0

5.Sentiment Transfer using Seq2Seq Adversarial Autoencoders(使用Seq2Seq对抗自编码的情感转移)

作者:Ayush Singh,Ritu Palod

机构:Northeastern University

摘要:Expressing in language is subjective. Everyone has a different style of reading and writing, apparently it all boil downs to the way their mind understands things (in a specific format). Language style transfer is a way to preserve the meaning of a text and change the way it is expressed. Progress in language style transfer is lagged behind other domains, such as computer vision, mainly because of the lack of parallel data, use cases, and reliable evaluation metrics. In response to the challenge of lacking parallel data, we explore learning style transfer from non-parallel data. We propose a model combining seq2seq, autoencoders, and adversarial loss to achieve this goal. The key idea behind the proposed models is to learn separate content representations and style representations using adversarial networks. Considering the problem of evaluating style transfer tasks, we frame the problem as sentiment transfer and evaluation using a sentiment classifier to calculate how many sentiments was the model able to transfer. We report our results on several kinds of models.

期刊:arXiv, 2018年4月11日

网址:

http://www.zhuanzhi.ai/document/80fb663d9ab16f887ddf0a7fd60ae3df

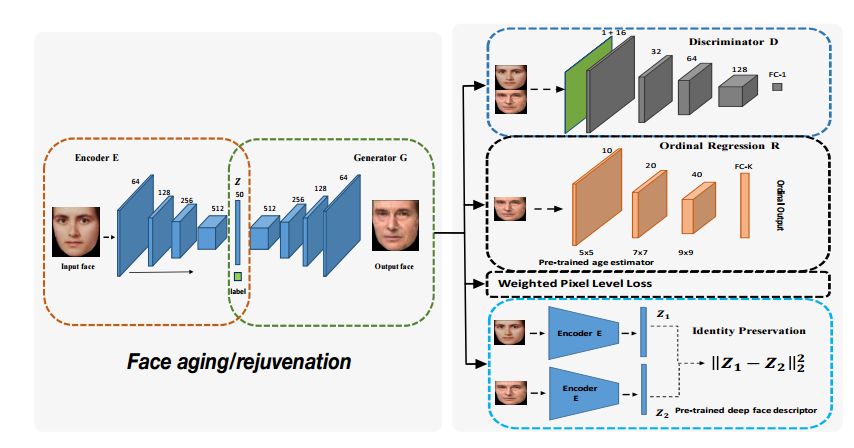

6.Facial Aging and Rejuvenation by Conditional Multi-Adversarial Autoencoder with Ordinal Regression(采用有序回归的条件多对抗自编码的面部老化和恢复)

作者:Haiping Zhu,Qi Zhou,Junping Zhang,James Z. Wang

机构:Fudan University,The Pennsylvania State University,University Park

摘要:Facial aging and facial rejuvenation analyze a given face photograph to predict a future look or estimate a past look of the person. To achieve this, it is critical to preserve human identity and the corresponding aging progression and regression with high accuracy. However, existing methods cannot simultaneously handle these two objectives well. We propose a novel generative adversarial network based approach, named the Conditional Multi-Adversarial AutoEncoder with Ordinal Regression (CMAAE-OR). It utilizes an age estimation technique to control the aging accuracy and takes a high-level feature representation to preserve personalized identity. Specifically, the face is first mapped to a latent vector through a convolutional encoder. The latent vector is then projected onto the face manifold conditional on the age through a deconvolutional generator. The latent vector preserves personalized face features and the age controls facial aging and rejuvenation. A discriminator and an ordinal regression are imposed on the encoder and the generator in tandem, making the generated face images to be more photorealistic while simultaneously exhibiting desirable aging effects. Besides, a high-level feature representation is utilized to preserve personalized identity of the generated face. Experiments on two benchmark datasets demonstrate appealing performance of the proposed method over the state-of-the-art.

期刊:arXiv, 2018年4月9日

网址:

http://www.zhuanzhi.ai/document/14867e85f4b73a782a09d89cc5ac8f57

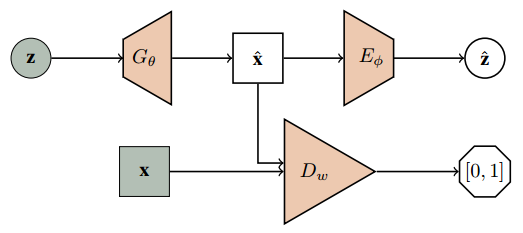

7.Flipped-Adversarial AutoEncoders

作者:Jiyi Zhang,Hung Dang,Hwee Kuan Lee,Ee-Chien Chang

摘要:We propose a flipped-Adversarial AutoEncoder (FAAE) that simultaneously trains a generative model G that maps an arbitrary latent code distribution to a data distribution and an encoder E that embodies an "inverse mapping" that encodes a data sample into a latent code vector. Unlike previous hybrid approaches that leverage adversarial training criterion in constructing autoencoders, FAAE minimizes re-encoding errors in the latent space and exploits adversarial criterion in the data space. Experimental evaluations demonstrate that the proposed framework produces sharper reconstructed images while at the same time enabling inference that captures rich semantic representation of data.

期刊:arXiv, 2018年4月4日

网址:

http://www.zhuanzhi.ai/document/fd75b74d0a5388d45cac1ab8521d806c

-END-

专 · 知

人工智能领域主题知识资料查看与加入专知人工智能服务群:

【专知AI服务计划】专知AI知识技术服务会员群加入与人工智能领域26个主题知识资料全集获取

[点击上面图片加入会员]

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请加专知小助手微信(扫一扫如下二维码添加),加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

请关注专知公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知