给卷积神经网络动动刀:加法网络探究

adder.py

main.py

resnet20.py

resnet50.py

test.py

class adder2d(nn.Module):

def __init__(self, input_channels, output_channels, kernel_size, stride=1, padding=0, bias=False):

super(adder2d, self).__init__()

self.stride = stride

self.padding = padding

self.input_channel=output_channel

self.kernel_size=kernel_size

self.adder=torch.nn.Parameter(nn.init.normal_(torch.randn(output_channel,input_channel,kernel_size,kernel_size)))

self.bias=bias

if bias:

self.b = torch.nn.Parameter(nn.init.uniform_(torch.zeros(output_channel)))

def forward(self, x):

output = adder2d_function(x,self.adder, self.stride, self.padding)

if self.bias:

output += self.b.unsqueeze(0).unsqueeze(2).unsqueeze(3)

return output

可以看到,adder2d这个类定义了adder算子,是继承于nn.module的,所以在网络定义时可以直接使用adder2d来定义一个adder层。例如resnet20.py中就如下定义一个3*3 kernel大小的adder层:

def conv3x3(in_planes, out_planes, stride=1):

" 3x3 convolution with padding "

return adder.adder2d(in_planes, out_planes, kernel_size=3, stride=stride, padding=1, bias=False)

self.adder= torch.nn.Parameter(nn.init.normal_(torch.randn(output_channel,input_channel,kernel_size,kernel_size)))

self.b = torch.nn.Parameter(nn.init.uniform_(torch.zeros(output_channel)))

output= adder2d_function(x,self.adder, self.stride, self.padding)

def adder2d_function(X, W, stride=1, padding=0):

n_filters, d_filter, h_filter, w_filter = W.size()

n_x, d_x, h_x, w_x = X.size()

h_out = (h_x - h_filter + 2 * padding) / stride + 1

w_out = (w_x - w_filter + 2 * padding) / stride + 1 h_out, w_out = int(h_out), int(w_out)

X_col = torch.nn.functional.unfold(X.view(1, -1, h_x, w_x), h_filter, dilation=1, padding=padding, stride=stride).view(n_x, -1, h_out*w_out)

X_col = X_col.permute(1,2,0).contiguous().view(X_col.size(1),-1)

W_col = W.view(n_filters, -1)

out = adder.apply(W_col,X_col)

out = out.view(n_filters, h_out, w_out, n_x)

out = out.permute(3, 0, 1, 2).contiguous()

return out:

X_col = torch.nn.functional.unfold(X.view(1, -1, h_x, w_x), h_filter, dilation=1, padding=padding, stride=stride).view(n_x, -1, h_out*w_out)

X_col = X_col.permute(1,2,0).contiguous().view(X_col.size(1),-1)

W_col = W.view(n_filters, -1)

out = adder.apply(W_col,X_col)

out = out.view(n_filters, h_out, w_out, n_x)

out = out.permute(3, 0, 1, 2).contiguous()

class adder(Function):

@staticmethod

def forward(ctx, W_col, X_col):

ctx.save_for_backward(W_col,X_col)

output = -(W_col.unsqueeze(2)-X_col.unsqueeze(0)).abs().sum(1)

return output

@staticmethod

def backward(ctx,grad_output):

W_col,X_col = ctx.saved_tensors

grad_W_col = ((X_col.unsqueeze(0)-W_col.unsqueeze(2))*grad_output.unsqueeze(1)).sum(2)

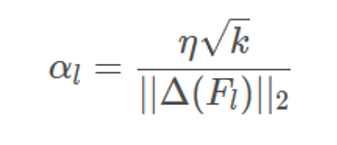

grad_W_col = grad_W_col/grad_W_col.norm(p=2).clamp(min=1e-12)*math.sqrt(W_col.size(1)*W_col.size(0))/5

grad_X_col = (-(X_col.unsqueeze(0)-W_col.unsqueeze(2)).clamp(-1,1)*grad_output.unsqueeze(1)).sum(0)

return grad_W_col, grad_X_col

output = -(W_col.unsqueeze(2)-X_col.unsqueeze(0)).abs().sum(1)

grad_W_col= ((X_col.unsqueeze(0)-W_col.unsqueeze(2))*grad_output.unsqueeze(1)).sum(2)

grad_X_col = (-(X_col.unsqueeze(0)-W_col.unsqueeze(2)).clamp(-1,1)*grad_output.unsqueeze(1)).sum(0)

grad_W_col=grad_W_col/grad_W_col.norm(p=2).clamp(min=1e-12)*math.sqrt(W_col.size(1)*W_col.size(0))/5

该论文已被CVPR 2020接收。

论文一作:

陈汉亭,北京大学智能科学系硕博连读三年级在读,同济大学学士,师从北京大学许超教授,在华为诺亚方舟实验室实习。研究兴趣主要包括计算机视觉、机器学习和深度学习。在 ICCV,AAAI,CVPR 等会议发表论文数篇,目前主要研究方向为神经网络模型小型化。

论文二作:

王云鹤,在华为诺亚方舟实验室从事边缘计算领域的算法开发和工程落地,研究领域包含深度神经网络的模型裁剪、量化、蒸馏和自动搜索等。王云鹤博士毕业于北京大学,在相关领域发表学术论文40余篇,包含NeurIPS、ICML、CVPR、ICCV、TPAMI、AAAI、IJCAI等。

论文地址:https://arxiv.org/pdf/1912.13200.pdf

Github 代码地址:https://github.com/huawei-noah/AdderNet