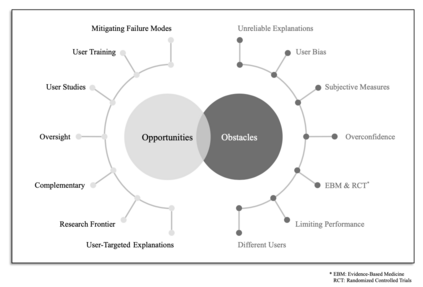

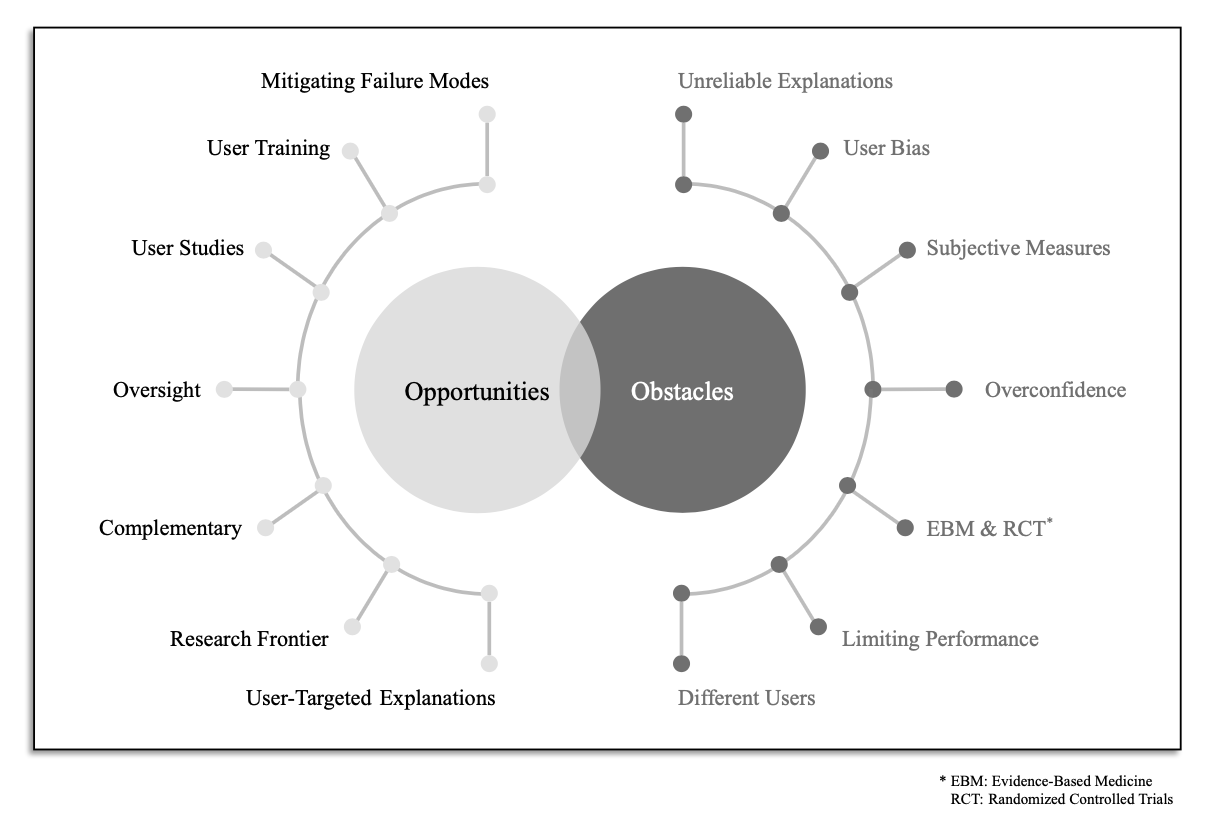

The recent spike in certified Artificial Intelligence (AI) tools for healthcare has renewed the debate around adoption of this technology. One thread of such debate concerns Explainable AI and its promise to render AI devices more transparent and trustworthy. A few voices active in the medical AI space have expressed concerns on the reliability of Explainable AI techniques, questioning their use and inclusion in guidelines and standards. Revisiting such criticisms, this article offers a balanced and comprehensive perspective on the utility of Explainable AI, focusing on the specificity of clinical applications of AI and placing them in the context of healthcare interventions. Against its detractors and despite valid concerns, we argue that the Explainable AI research program is still central to human-machine interaction and ultimately our main tool against loss of control, a danger that cannot be prevented by rigorous clinical validation alone.

翻译:最近经认证的人工智能(AI)医疗工具的激增重新引发了关于采用这一技术的辩论。这种辩论的一线涉及可解释的AI及其使AI装置更加透明和可信赖的承诺。在医学AI空间活跃的少数声音对可解释的AI技术的可靠性表示关切,质疑其使用和纳入准则和标准。这种批评的重新审视,这一条对可解释的AI的效用提供了平衡和全面的观点,侧重于AI临床应用的特殊性,并将其置于医疗干预中。针对其诋毁者,尽管存在合理的关切,我们辩称,可解释的AI研究方案对于人体机器互动,最终对于我们防止失控的主要工具仍然至关重要,而严格的临床验证本身无法防止这种危险。