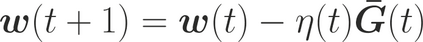

One of the main focus in federated learning (FL) is the communication efficiency since a large number of participating edge devices send their updates to the edge server at each round of the model training. Existing works reconstruct each model update from edge devices and implicitly assume that the local model updates are independent over edge device. In FL, however, the model update is an indirect multi-terminal source coding problem where each edge device cannot observe directly the source that is to be reconstructed at the decoder, but is rather provided only with a noisy version. The existing works do not leverage the redundancy in the information transmitted by different edges. This paper studies the rate region for the indirect multiterminal source coding problem in FL. The goal is to obtain the minimum achievable rate at a particular upper bound of gradient variance. We obtain the rate region for multiple edge devices in general case and derive an explicit formula of the sum-rate distortion function in the special case where gradient are identical over edge device and dimension. Finally, we analysis communication efficiency of convex Mini-batched SGD and non-convex Minibatched SGD based on the sum-rate distortion function, respectively.

翻译:联合学习(FL)的主要焦点之一是通信效率,因为大量参与的边缘设备在每轮模型培训中向边缘服务器发送其更新信息。现有的工程从边缘设备中重建每个模型更新,并暗含地假设本地模型更新是独立的边缘设备。但在FL, 模型更新是一个间接的多端源编码问题,因为每个边缘设备都无法直接观测拟在解码器中重建的来源,而只是提供一个噪音版本。现有的工程没有利用不同边缘传送的信息中的冗余。本文研究了FL中间接多端源编码问题的比率区域。目标是在特定的梯度差异上限上方获得最小的可实现率。我们在一般情况下获得多端设备的比率区域,并在梯度为相同的超边缘装置和尺寸的特殊情况下得出总和率扭曲功能的明确公式。最后,我们根据超端装置扭曲值函数分别分析前点Minibate SGD和不连接点 SGDMIGD的通信效率。