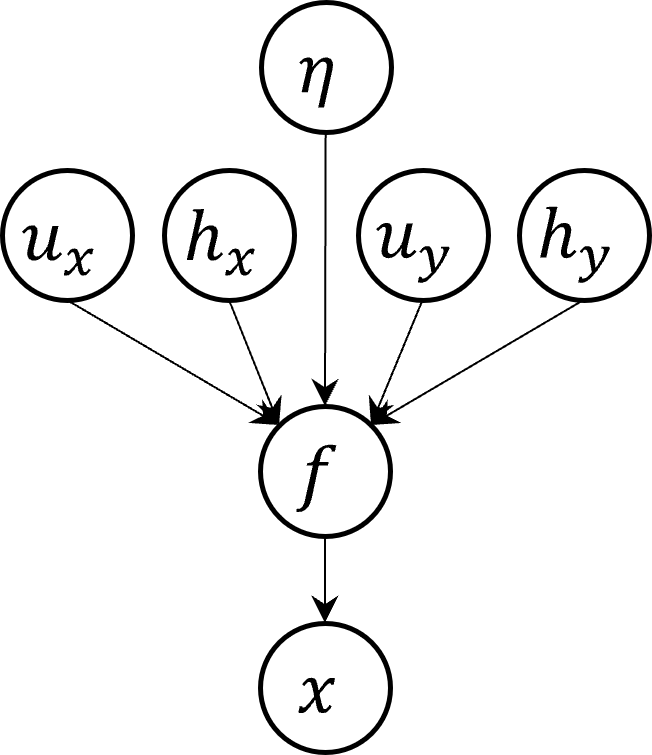

Gaussian processes are the leading method for non-parametric regression on small to medium datasets. One main challenge is the choice of kernel and optimization of hyperparameters. We propose a novel regression method that does not require specification of a kernel, length scale, variance, nor prior mean. Its only hyperparameter is the assumed regularity (degree of differentiability) of the true function. We achieve this with a novel non-Gaussian stochastic process that we construct from minimal assumptions of translation and scale invariance. The process can be thought of as a hierarchical Gaussian process model, where the hyperparameters have been incorporated into the process itself. To perform inference with this process we develop the required mathematical tools. It turns out that for interpolation, the posterior is a t-process with a polyharmonic spline as mean. For regression, we state the exact posterior and find its mean (again a polyharmonic spline) and approximate variance with a sampling method. Experiments show a performance equal to that of Gaussian processes with optimized hyperparameters. The most important insight is that it is possible to derive a working machine learning method by assuming nothing but regularity and scale- and translation invariance, without any other model assumptions.

翻译:高斯进程是中小数据集非参数回归的主要方法。 一个主要的挑战就是选择内核和优化超参数。 我们提出一个新的回归方法, 不需要对内核、 长度尺度、 差异或先前平均值进行规格说明。 它唯一的超参数是假设真实函数的规律性( 差异度) 。 我们用一个新颖的非加西西亚的随机过程来实现这一目标, 我们从最起码的翻译假设和规模变差中构建这个过程。 这个过程可以被看作高斯级进程模型, 将超参数纳入进程本身。 要用这个过程进行推断, 我们开发所需的数学工具。 它唯一的超度参数是假设真实函数的规律性( 差异度) 。 对于回归, 我们用一个新颖的、 非加固度和比例差的假设来完成这个过程。 实验显示一个和高斯进程一样的性能, 而没有优化的超标准, 假设任何最精确的机器变异性, 也是最有可能的。