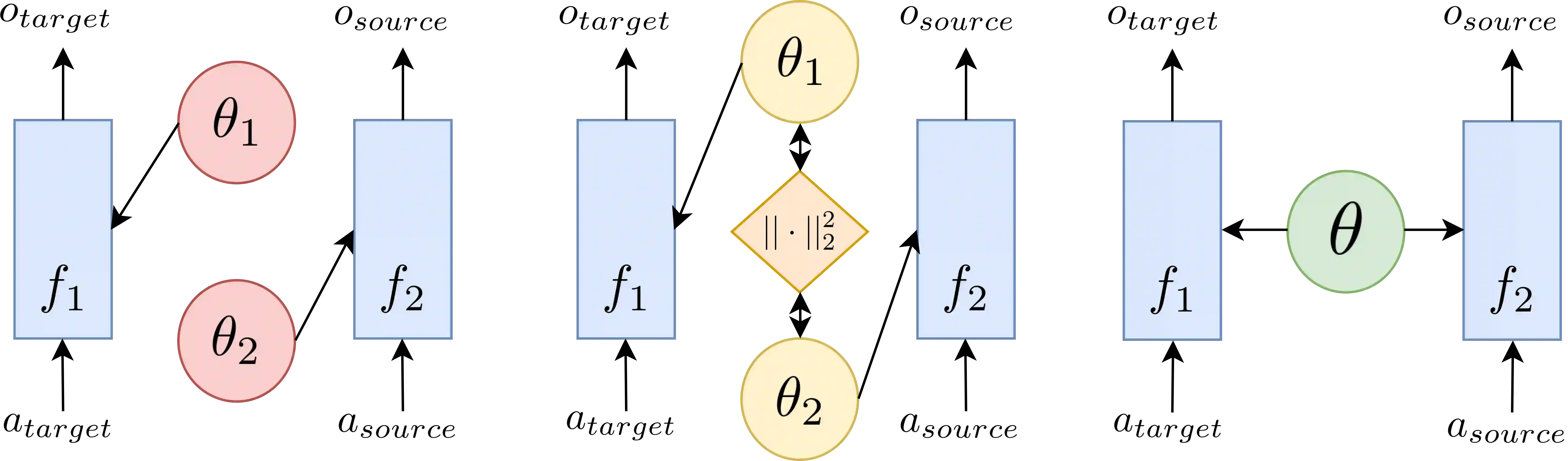

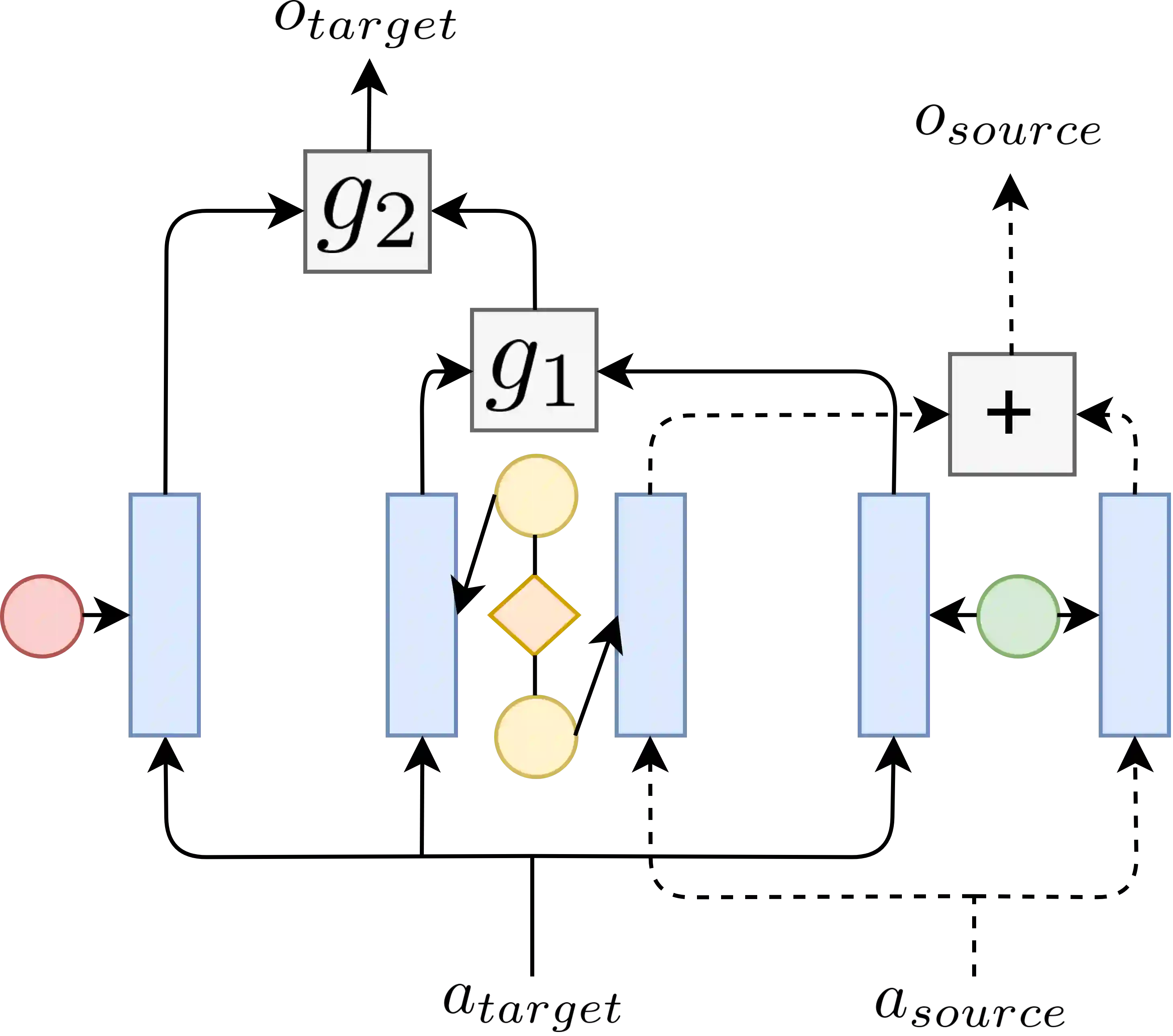

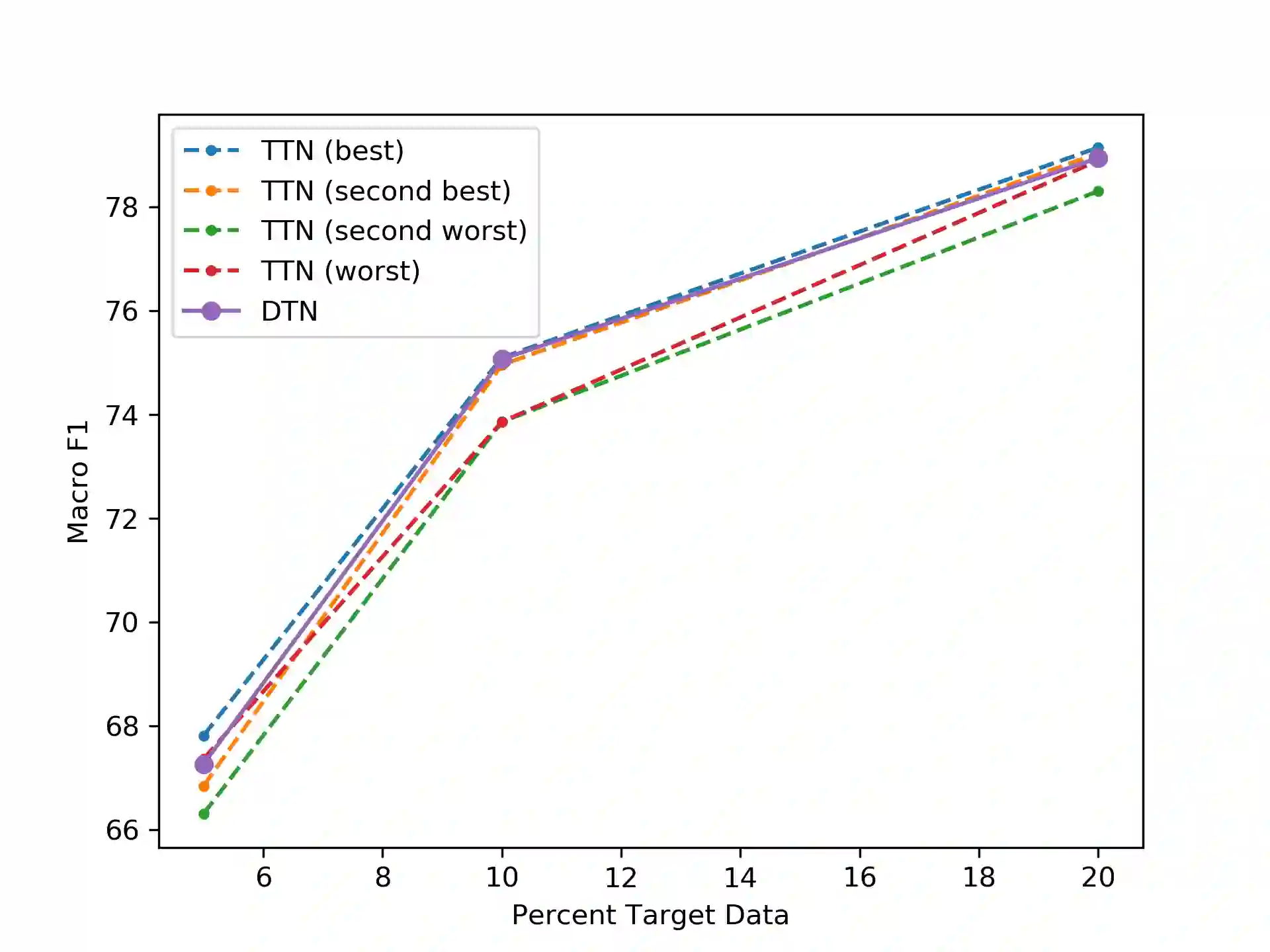

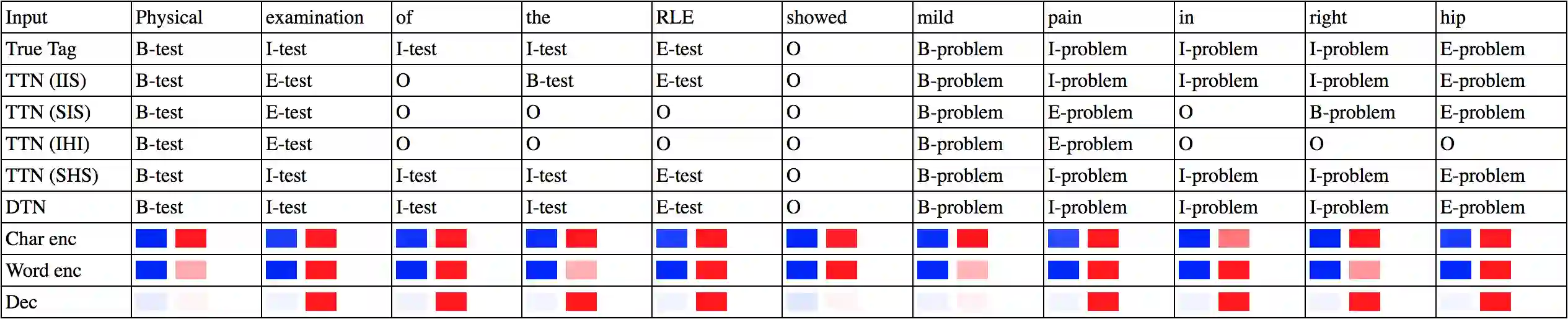

State-of-the-art named entity recognition (NER) systems have been improving continuously using neural architectures over the past several years. However, many tasks including NER require large sets of annotated data to achieve such performance. In particular, we focus on NER from clinical notes, which is one of the most fundamental and critical problems for medical text analysis. Our work centers on effectively adapting these neural architectures towards low-resource settings using parameter transfer methods. We complement a standard hierarchical NER model with a general transfer learning framework consisting of parameter sharing between the source and target tasks, and showcase scores significantly above the baseline architecture. These sharing schemes require an exponential search over tied parameter sets to generate an optimal configuration. To mitigate the problem of exhaustively searching for model optimization, we propose the Dynamic Transfer Networks (DTN), a gated architecture which learns the appropriate parameter sharing scheme between source and target datasets. DTN achieves the improvements of the optimized transfer learning framework with just a single training setting, effectively removing the need for exponential search.

翻译:过去几年来,使用神经结构不断改进了最先进的实体识别(NER)系统,但包括NER在内的许多任务需要大量附加说明的数据才能取得这种性能。特别是,我们注重临床笔记中的NER,这是医学文本分析的最根本和最关键问题之一。我们的工作中心是利用参数传输方法,使这些神经结构有效地适应低资源环境。我们用一个由源和目标任务之间参数共享构成的一般传输学习框架来补充标准级NER模型,并展示比基线结构高得多的分数。这些共享计划需要对捆绑的参数组进行指数搜索,以产生最佳配置。为了减轻对模型优化进行彻底搜索的问题,我们提议了动态传输网络(DTN),这是一个封闭的架构,以学习源和目标数据集之间的适当参数共享计划。DTN用一个单一的培训设置来改进优化的传输学习框架,有效地消除了指数搜索的需要。