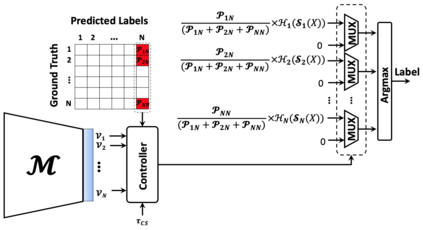

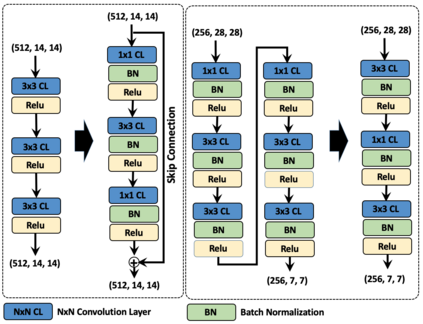

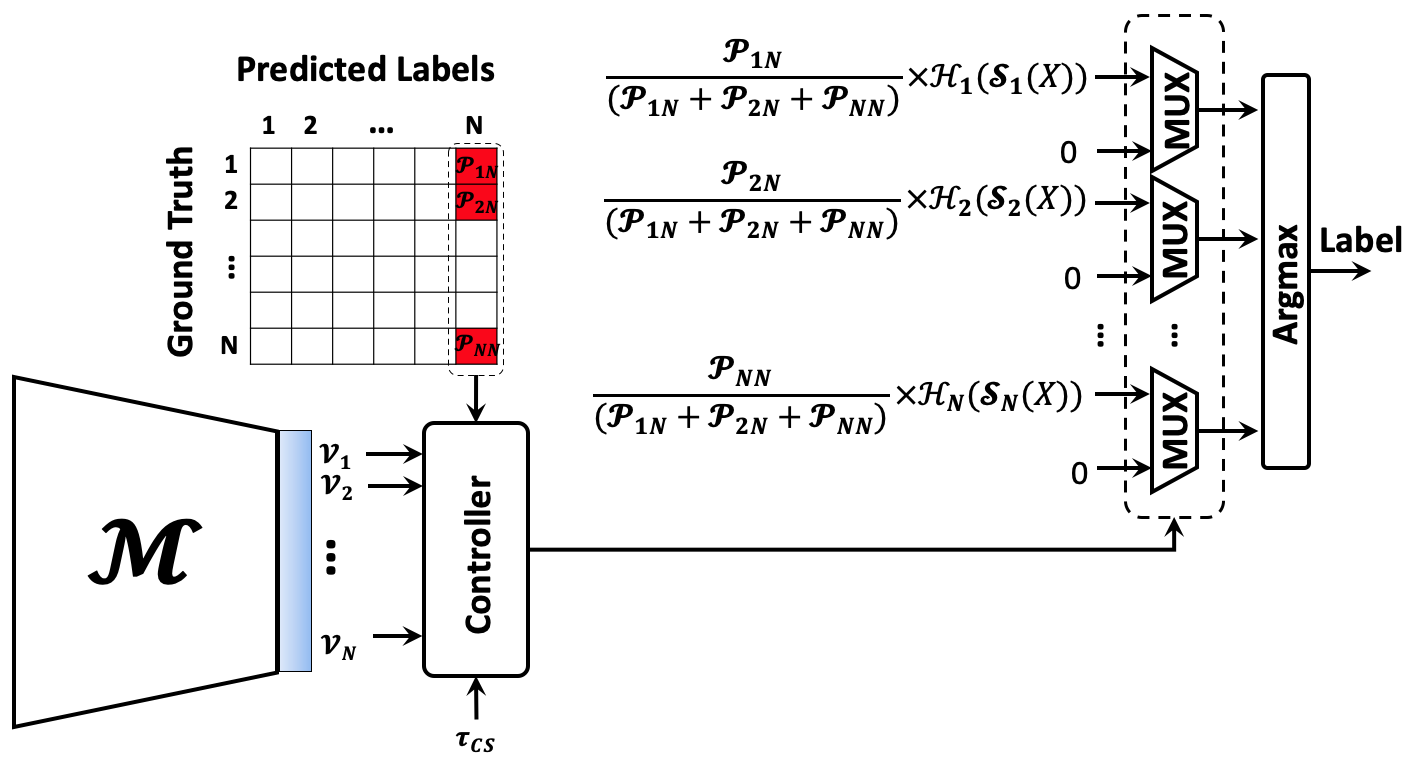

Deep convolutional neural networks have shown high efficiency in computer visions and other applications. However, with the increase in the depth of the networks, the computational complexity is growing exponentially. In this paper, we propose a novel solution to reduce the computational complexity of convolutional neural network models used for many class image classification. Our proposed technique breaks the classification task into two steps: 1) coarse-grain classification, in which the input samples are classified among a set of hyper-classes, 2) fine-grain classification, in which the final labels are predicted among those hyper-classes detected at the first step. We illustrate that our proposed classifier can reach the level of accuracy reported by the best in class classification models with less computational complexity (Flop Count) by only activating parts of the model that are needed for the image classification.

翻译:深相神经网络在计算机视觉和其他应用方面显示出很高的效率。 但是,随着网络深度的提高,计算的复杂性正在成倍增长。 在本文中,我们提出了一个新的解决方案来降低用于许多类图像分类的共进神经网络模型的计算复杂性。我们提出的技术将分类任务分成两步:(1) 粗重分解,输入样本分为一套超级分类;(2) 细重分解,在第一步检测到的超高级中预测最终标签。我们说明,我们提议的分类师可以达到最佳分类模型报告的准确程度,而计算复杂性较低(Flop Count),只需激活图像分类所需的模型部分。