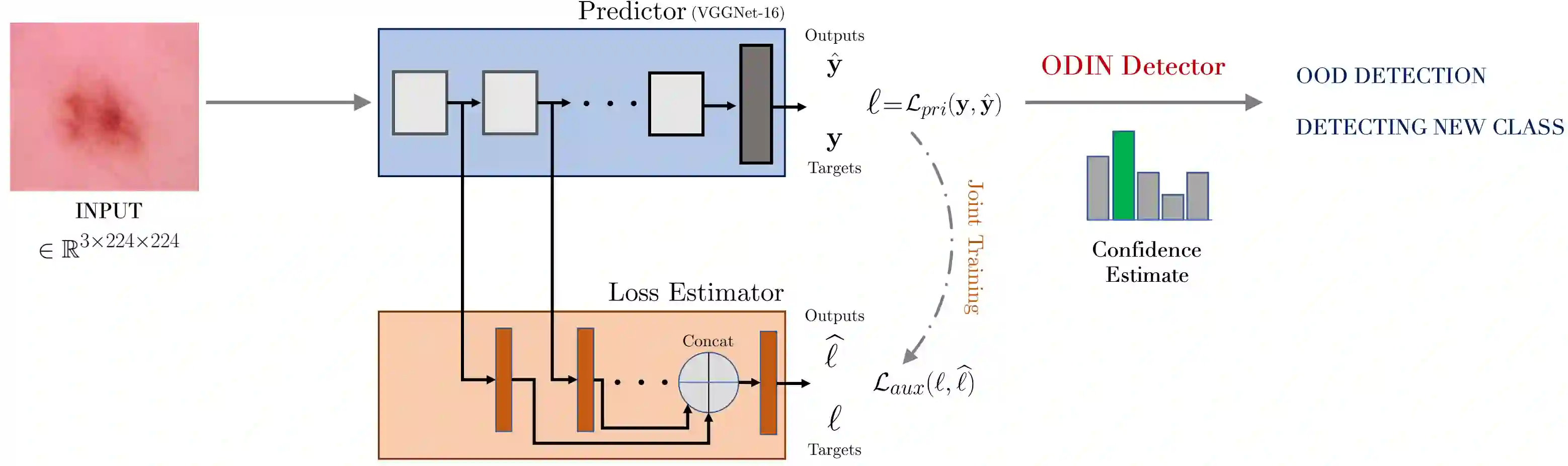

With increased interest in adopting AI methods for clinical diagnosis, a vital step towards safe deployment of such tools is to ensure that the models not only produce accurate predictions but also do not generalize to data regimes where the training data provide no meaningful evidence. Existing approaches for ensuring the distribution of model predictions to be similar to that of the true distribution rely on explicit uncertainty estimators that are inherently hard to calibrate. In this paper, we propose to train a loss estimator alongside the predictive model, using a contrastive training objective, to directly estimate the prediction uncertainties. Interestingly, we find that, in addition to producing well-calibrated uncertainties, this approach improves the generalization behavior of the predictor. Using a dermatology use-case, we show the impact of loss estimators on model generalization, in terms of both its fidelity on in-distribution data and its ability to detect out of distribution samples or new classes unseen during training.

翻译:随着对采用AI方法进行临床诊断的兴趣增加,安全部署此类工具的一个重要步骤是确保模型不仅产生准确的预测,而且不向培训数据没有提供有意义的证据的数据系统概括,确保模型预测分布与真实分布相似的现有方法依赖于明确的不确定性估计器,而这些估计器本身很难校准。在本文中,我们提议使用对比培训目标,与预测模型一起培训损失估计器,直接估计预测不确定性。有趣的是,我们发现,除了产生经充分校准的不确定性外,这一方法还改善了预测器的普遍化行为。我们使用皮肤学使用案例,展示了损失估计器对于模型一般化的影响,既表现在分配数据上的忠诚性,也表现在培训过程中检测分发样品或新类别不为人知的能力。